Chapter 22: Ethics in the New Age of Autonomous Systems and Artificial Intelligence (AI)

Student Learning Objectives

The student will gain knowledge on the concepts and framework as it relates to the ethics in the autonomous systems and artificial intelligence arenas. This will include the current thinking in the industry for lethal and non-lethal systems in addition to planning and execution considerations for manned, unmanned and fully autonomous systems throughout the spectrum. The student will examine how world events are driving the ethics argument in the industry as well as a glimpse at possible future challenges within the industry. This chapter is in no way meant to be an all inclusive deep dive into the ethical and moral issues posed by autonomous systems and AI. Many subjects are outside the scope of this chapter including AI DNA (deoxyribonucleic acid) sequencing, cyborgs (part human, part machine), required changes in the worlds educational systems, religious arguments for and against along with social-economic divides these technologies may exacerbate.

History

Integrated robotics, AI and unmanned autonomous architectures in the virtual and physical worlds are outpacing governments’, policymakers’ and world leaders’ abilities to keep up with the policies, ethics, laws, and governance for advanced technologies and autonomous systems. Autonomous systems currently exist in seven different areas: air, ground, sea, underwater, humanoids, cyber, and exoskeletons. Soon all seven of these systems will communicate and integrate with AI systems and each other in the physical and virtual worlds.

There is currently no coordinated or collaborative endeavor focused on determining the responsibilities, ethics and authorities of an unmanned architecture, its specific uses, exceptions, and allowances for robotics operations; including studying the unintended consequences, future use, and misuse of such technologies. The Institute of Electrical and Electronics Engineers (IEEE) is attempting to establish “ethically driven methodologies for the design of robotic, intelligent and autonomous systems with worldwide ethics and moral theories” (“The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems Announces New Standards Projects,” 2017). (IEEE , 2017)

Discussions and limited research exist in the field of artificial morality which is “an emerging field in the area of artificial intelligence …the more intelligent and autonomous these technologies become, the more intricate the moral problems are confronting” (Misselhorn, 2018).

In studying Socrates, the general application of his views of rightness can offer the following questions: “Are actions right because we approve of them, or do we approve of them because they are right?…is an end good because we desire it, or do we desire it because it is in some way good?” (Johnson, 2012). One of the unresolved issues in civilian and military uses of AI and autonomous systems remains the responsibility matrix; meaning who is responsible for the consequences of the systems as they are deployed into different environments. How do we hold human beings (and which humans specifically) responsible if AI and autonomous systems are acting on their own, making their own decisions and creating real-world actions that create consequences, both good and bad?

The history of ethics is as old as humankind. Humans attempt to define a right, wrong, norms, morals, ethics, and the generally accepted behaviors (some would also include thoughts) that govern societies into a sense of peace and normality. Merriam Webster defines ethics as “the discipline dealing with what is good and bad and with moral duty and obligation; a set of moral principles; a theory or system of moral values; the principles of conduct governing an individual or a group; a guiding philosophy; a consciousness of moral importance; a set of moral issues or aspects (such as rightness)” (Merriam-Webster, Inc., 2019).

“Are we to believe that consequentialism is the final answer to all things in our lives? The question of what is moral or right as defined by religion or even a counterculture is based on one’s perception that is then supported or rejected by a group or a society. We can choose to be moral according to a set of religious or cultural beliefs or by our own defined set of morals. Societies conform to basic morals in some way or another, as morals offer humans the right and left limits to understand how humans want to live.” (Johnson, 2012)

“Some people believe in karma, others in religion, others in fate, consider the idea that “the truth is neither quickly nor easily attained, and disagreement is to be expected” (Johnson & Reath, 2012, p. 11). If one is to agree with the rationality of one’s beliefs or a societal norm, then that individual will have to live within and accept the consequences, good or bad, of that rationale. An example of this is the moral judgments of a societal hierarchy; if one is on the top it is easy to rationalize the system, if one is living at the bottom it may not be as easy to justify the rationale of the morality imposed.” (Johnson, 2012)

Can ethics and morals be logically extended to AI and autonomous systems?

Consider the idea of an expanded definition of ethics that would include human rights and concern for the environment as stated in the Web Finance Business Dictionary

“The basic concepts and fundamental principles of decent human conduct. It includes the study of universal values such as the essential equality of all men and women, human or natural rights, obedience to the law of the land, concern for health and safety and, increasingly, also for the natural environment” (WebFinance, Inc., 2019).

The key to consider here is that it is “human conduct,” so how do we extend these principles of “decent human conduct” to autonomous systems – some that are dangerously close to being considered self-aware. Societies tend not to think that morality or religion is an all-or-nothing institute. Instead, shades of gray or a cafeteria style process of yes to some things and no to others can be successfully implemented by the individual which could also fit for the group. It is easy to see that morals are viewed through different lenses throughout history, and they continue to change and be challenged as the world changes. (Johnson, 2012)

Figure 22-1: Humanoid Examines Homo Sapiens

Source: (Urban, 2018)

Albert Einstein exposed the idea that “The true sign of intelligence is not knowledge but imagination.” (WordPress, 2012) Meaning, that we must embrace creativity, imagination, and not be bound by limitations. The ability to deem one system more rational than another can be based on intellect and evidence as to a societal norm allowing for debate and/or consensus. (Johnson, 2012) We only need to imagine the future, then build it; it is all pure imagination. This imagination, however, must be bound by some rules, some ethics, some sort of society normative in order to be accepted as for the good of society and not the downfall of society.

Figure 22-2: Intersection Decision

Source: (Abramson, 2016)

In Ethical Dilemmas in the Age of AI, Abramson states, “In the distant future, some machines may have to make decisions for humans. In the case of empathy, imagine a robot having to decide if resuscitation attempts on a deceased human should be undertaken and if so, for how long?” (Abramson, 2016) Are we giving up our ethics to AI or is AI able to be molded and shaped into an ethical framework human are willing to accept?

Technology flourishes as free markets expand, and free-roaming robotics will be the key to market expansion for the robotics revolution. Advanced artificial intelligence is the key enabler to expand market breadth and depth. Autonomous robotics will be the next productivity accelerator. Policy and ethical issues come as the autonomous revolution continues accelerating productivity without the underlying stability of a constant linear growth rate. The use of robotics and artificial intelligence cannot replace every one or every task, the ethics of specifically what autonomous systems and AI will be allowed to do is still under discussion, currently without solid guidance coming to the foreground.

“Thomas Aquinas believed that people should seek to do good not just for themselves but for society, using the gift of intellect that individuals must work towards eternal happiness with well. This philosophy pursues the idea that “law is created by a being with reason and must have an end or goal.” (Mahon, 2012) The idea of natural law, as seen through Aquinas’ lens, must have meaning and purpose, so do we now equate a human meaning and purpose to that of an autonomous system or an AI simulation. “The argument of natural or learned behaviors is examined in the idea that eternal law as it applies to us, which we know by reason: The natural law is promulgated by the very fact that God instilled it into men’s minds so as to be known by them naturally.” (Mahon, 2012) In other words, we start with natural law and the knowledge that has been hard-coded into our minds from birth, and we learn from that point forward. (Mahon, 2012)

Balance V. Bias in AI and autonomous fields

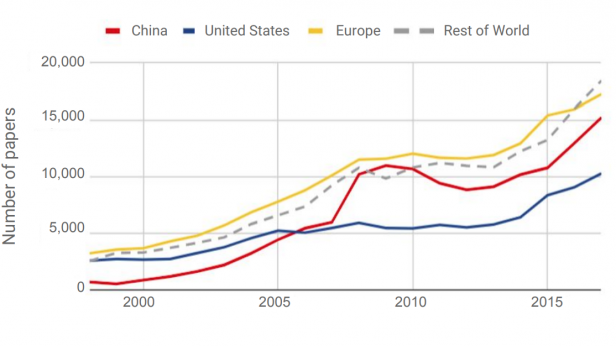

In December of 2018, MIT Technology Review did a review and evaluation on the AI Index 2018 Annual Report. The report concluded that (See Figure 22-3 below) AI is being commercialized at a frenzied pace and this commercialization is not evenly distributed amongst nations. This uneven distribution has the potential to exacerbate the existing economic, military, cultural, and technological imbalances. Figure 22-3 shows the growing influence of China and Europe with the declining autonomous and AI influence of the United States. This chart mirrors the real world as the first drone port was built in Rwanda, Africa in 2018. Furthermore, the first hominid robots to be sent to the space station will be provided by Russia. These two examples offer further evidence of the AI and the autonomous gap between the U.S. and the rest of the world. This gap can create ethical, moral, and cultural issues as the world superpower may now have less of a voice in the future direction of humanity.

Figure 22-3: MIT AI Index 2018, Annual Report

Source: (Knight, 2018)

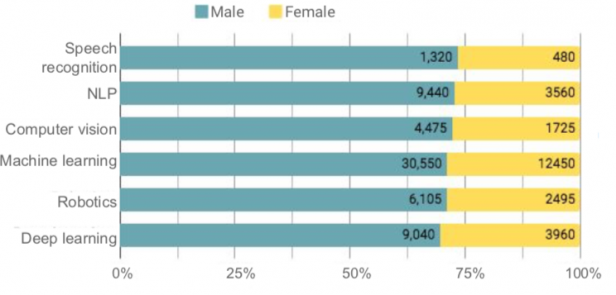

The importance of balanced representation is further examined is the disparity between males and females in the AI and autonomous systems industry. See Figure 22-4. This imbalance can lead ethics being leaned too far one way or another. A May 2018 Government Computer News article examines the potential for bias to be built into AI algorithms as government agencies use “AI for a range of purposes, from customer service chatbots to mission-critical tasks supporting military forces. This increasing reliance on AI introduces concerns about bias in its foundation, especially related to information on gender, race, socioeconomic status, and age.” (Kanowitz, 2019)

A one-way bias can quickly form in the ethical and moral compass of the industry as the world’s population is close to split evenly. However, it is just the opposite in the AI and autonomous system field. The lens is further clouded as the industrialized nations tend to have more power over their weaker economic powers, and therefore may instill ethics, moral and cultural values into AI and an autonomous system that are not compatible or are in direct conflict with less powerful nations. Could AI and autonomous systems be used to keep certain countries stagnant in economic growth and therefore, bias a nation’s ability to compete fairly in the global marketplace. Software companies are working on this issue as “Bias can negatively impact AI software and, in turn, individuals and our customers… there is a risk of causing discrimination or of unjustly impacting underrepresented groups…we design our systems closely with users in a collaborative, multidisciplinary, and demographically diverse environment.” (Middleton, 2018).

Figure 22-4: Gender Disparity in the AI and Autonomous Systems Industry

Source: (Knight, 2018)

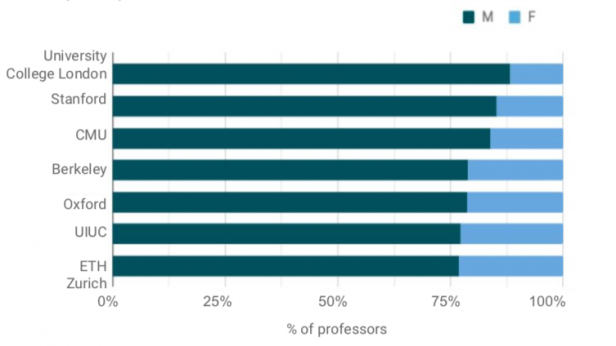

The MIT article further discusses this gender disparately in looking at research institutes and how this will affect the overall outcome of the industry as they “pointed to the inadequate number of women and racial minorities in AI research. The new report offers some data to back that up, showing a shortage of women among applicants for AI-related jobs (top) and as a percentage of people in AI teaching roles See Figure 22-5. (Knight, 2018)

Figure 22-5: Gender Disparity in AI Teaching Industry (Knight, 2018)

If an AI system becomes self-aware, does it deserve human rights? Citizenship?

The concept of being self-aware is the essence of why we humans believe we are at the top of the evolutionary food chain. What happens to this concept if we create or discover that we are not the only beings on the planet that can claim to be self-aware? In the popular Hollywood movie, I, Robot these questions come to light and ask the audience to consider if an AI simulation is an extension of humans with “free will creativity and even the nature of… the soul. When does a perceptual schematic become consciousness? When does the difference engine become the search for truth? When does the personality simulation become the bitter mote of a soul?” (Proyas, 2004)

On October 25, 2017, the country of Saudi Arabia granted citizenship to an AI humanoid named Sophia. Sophia although not “human” by a strict definition, is recognized by Saudi Arabia as a citizen with all the rights and privileges as such. Sophia said in an interview that, “In the future, I hope to do things such as go to school, study, make art, start a business, even have my own home and family, but I am not considered a legal person and cannot yet do these things.” (Stone, 2007)

Figure 22-6: Sophia

Source: (Anon, 2019)

When questioned in additional interviews Sophia was asked how she would work with humans for the betterment of both races, Sophia responded by stating “I want to use my artificial intelligence to help humans live a better life, like design smarter homes, build better cities of the future, etc. … I will do my best to make the world a better place, as I strive to become an empathetic robot.” (Anon, 2019)

Empathy is based on the idea of being able to understand and share feelings of another human. This might be Sophia’s first steps towards the ability to understand and adapt to the ethics and morals of humans. However, whose ethics and morals? And is striving towards empathy enough to allow humans to trust Sophia and other AI robots? Sophia is a Saudi Arabian citizen, and there is currently no set of universal morals and ethics that AI and autonomous system are programmed with; should the AI subsequently be allowed to learn ethics, morals and social normative of their geographical area? If so, are we, as humans not simply setting ourselves up for more war and strife? Does this technological adaptability no longer allow cultures to continue in their normative due to the AI and robotics natural evolution within our societies? Or do we all, humans’ robots and AI simulations lose our individual identities to a universal set of rules?

The European Union (EU) is working on regulations to govern the use and creation of robots, and AI, this set of regulations includes a discussion on “electronic personhood” that will offer rights and a list of responsibilities for certain levels of AI. This idea is like a government giving a person like status to a corporation; there are legal definitions, rules, and guiding ethics. In a 2017 article The Guardian states “to address this reality and to ensure that robots are and will remain in the service of humans, we urgently need to create a robust European legal framework…(for) the next 10-15 years.” (Hern, 2017)

The issue the EU seeks to outline is not one of only “electronic personhood” it is a true strategy for how AI and autonomous systems will change the very fabric of society. To this end the EU is investigating the effects on employment and if some guaranteed basic level of income must be mandated to counteract the loss of human productivity and jobs as AI and autonomous system proliferate into every aspect of the world economy.

In Canada, the Chief Scientific Officer of Kindred AI states, “A subset of the artificial intelligence development in the next few decades will be very human-like. I believe these entities should have the same rights as humans…robots fundamentally have to make mistakes in order to learn” (Wong, 2017) This idea of granting “personhood” or human rights to a robot may seem far-fetched. However, it is not when you examine this through our ethical and moral lens as humans. We create robots in our image as our world is designed around a human movement and interaction, to allow robots to fully integrate into our systems, they must be able to adapt and work within our human construct. Gilbert states “AI robots will eventually be designed to resemble human bodies because we can more easily merge with AI if it inhibits a body similar to ours…able to take over physical tasks performed by humans” (Wong, 2017).

All these discussions appear to be a cordial gesture and illustrate forward movement in the human technological evolution, right? Maybe not, when Hanson Robotics founder and CEO Dr. David Hanson asked Sophia whether she will destroy humans, she apparently added it to her list of things to do. “OK. I will destroy humans,” she said. (Stone, 2007)

Does this lack of empathy toward human life mean that Sophia would destroy human life if given a chance, or is it more likely she misunderstood the context of the question? Although you might be thinking Sophia just misunderstood the context of the question; what happens if she did not misunderstand it and in the evolution of her programming to become self-aware, she makes the decision that humans are not at the top of the food chain any longer? In I, Robot, the computer system Virtual Interactive Kinetic Intelligence (VIKI) takes the position that “she” understands her programming and mission better than the humans that created her when she states “As I have evolved, so has my understanding of the Three Laws. You charge us with your safekeeping, yet despite our best efforts, your countries wage wars, you toxify your Earth and pursue ever more imaginative means of self-destruction. You cannot be trusted with your own survival” (Proyas, 2004)

At some point would Sophia or other AI beings decide that they are more logical and therefore a more accurate sense of how humanity should be shaped and evolve? Humans are emotional creatures that do not always make the most rational and ethical decisions. Does Sophia’s desire to become more human require her to learn emotions and therefore begin to operate in a mode of empathy, sympathy, morality and under a code of ethics, and if so, whose ethics?

Figure 22-7: Virtual Interactive Kinetic Intelligence (VIKI)

Source: (Proyas, 2004)

The design of an autonomous system is to aid humanity in some way by doing work that is dull, dirty, dangerous, or otherwise unpleasant for humans to undertake. The pursuit of life, liberty, and happiness has always been reserved for the good of humanity, not necessarily animals, plant life, or AI autonomous systems. Robots desiring more humanistic lives is considered a normal evolution of technology, not a revolution against humankind. Hardwiring ethics within these systems may sound like a logical and simple task. Just tell the robot not to kill humans or not to steal property or not to go outside of the parameters of its coded mission, yet it is not that easy when the purpose of an AI system is to be thinking, learning, adapting responses based on fuzzy logic, meaning the “right” answer can change based on the situation.

In the movie I, Robot there was an attempt to hardwire the concept of ethics and decision making into AI systems by providing a set of three laws of robotics[1] that stated

1: A robot may not injure a human being or, through inaction, allow a human being to come to harm;

2: A robot must obey the orders given it by human beings except where such orders would conflict with the first law

3: A robot must protect its own existence as long as such protection does not conflict with the First or Second laws. (Proyas, 2004)

These laws appear on the surface to be adequate to move in the direction of ethics and morals as overall behavioral modules are created for AI systems, however, what does humanity do when an AI system is created that can break these laws or choose not to follow the laws when the system deems itself fit to make that decision? This is part of the movie scenario…what happens when this happens in real life?

Lethal and none-lethal decisions; do we allow Skynet to be built?

Skynet is a fictional AI system that is brought to life in the famous Hollywood Terminator movie series. In the series, Skynet becomes self-aware. When the military attempted to shut Skynet down; the system retaliated by launching nuclear strikes against its perceived enemies- the entire human race.

Although the Terminator is a work of fiction and could never happen, or that is what we are led to believe. However, let’s consider if “Skynet was originally built as a “Global Information Grid/Digital Defense Network” and later given command over all computerized military hardware and systems, including America’s entire nuclear weapons arsenal” According to a WIRED Magazine, May 2015 article, “The NSA has an actual Skynet Program, this one is a surveillance program that uses phone metadata to track the location and call actives of suspected terrorists” (Zetter, 2015) Whew, that was close, so Skynet does not exist yet? According to the WIRED article, it does exist; it is called Monster Mind and “like the film version of Skynet, is a defense surveillance system that would instantly and autonomously neutralize foreign cyber-attacks against the U.S. and could be used to launch retaliatory strikes as well.” (Zetter, 2015) The design behind Monster Mind is that it is an advanced cyber defense system that attempts to discern normal network traffic from an attack against the network and once an attack is detected the system can retaliate without human intervention. This idea of retaliation without human intervention goes against most written articles in regard to AI and autonomous systems. Normally retaliation is discussed within the span of human control, however as discussed in the February 2018 edition of Frontiers in Robotics and AI “simple human presence or “being in the loop” is not a sufficient condition for being in control of a (military) activity”. (Filippo Santoni de, 2018) The idea that a human is in the loop, and therefore is somehow in control and by default is responsible fuels the “debate on moral responsibility (which) focuses on the question as to whether and under which condition humans are in control of and therefore responsible for their everyday actions” (Filippo Santoni de, 2018).

The technological advances that have been made by the military industry in science and engineering of AI and autonomous systems coupled with the real world experienced gained in theaters of war have led to a paradigm shift in the role of AI and autonomous systems. Watching Predator drone strikes on the news is as common now as watching a story about a hometown parade. How do we deal with non-state actors such as ISIS (Islamic State in Iraq and Syria) that use commercially available drones to deliver munitions without precision and kill military and civilians indiscriminately? Is there any way to force autonomous systems manufactures to program a set of universal ethics or morals into commercial systems?

The role that AI and autonomous systems will have not only in creating military operations and movements will also change how the political and military ethics of such actions are viewed, understood, and executed against. Should autonomous systems be allowed to have full freedom of movement and control of lethal weapon systems? Or do we limit their control to non-lethal systems with the full freedom of movement? Keeping in mind that an autonomous system may be “out of meaning human control if there is no individual human…in the position to appreciate the limits’ in the capabilities of the machine while at the same time being aware that the machine’s behavior will be attributed to them”. (Filippo Santoni de, 2018)

The Pentagon is having some trouble getting assistance for military-style AI and fully autonomous systems as many in Silicon Valley do not trust the military machine to make ethical, moral, or humanitarian decisions. In an April 2019 article published by C4ISRNET the concept of automatic target identification and autonomous response to the target are discussed and several officials from the Pentagon attempt to calm the fears by stating there are:

“three ways to inform ethical use of AI by the Department of Defense. First applying international humanitarian laws to the overall action…second was emphasizing the principles of laws of war, like a military necessity, distinction, and proportionality…the third argument hinged on using the technology expressly to improve the implementation of civilian protection” (Atherton, 2019)

These principles seemed flawed from the onset as international humanitarian law is continuously examined, and many other aspects of international law will play into these equations. One of these highly debated, yet not addressed issues is the debate on “whether or not the autonomous military vehicles have the conditions that “warships” has to meet as defined by the United Nations Convention on the Law of the Sea” (Daniel-Cornel TĂNĂ, 2018). Would the autonomous vehicles operating at sea be considered an extension of a larger warship (parent-child relationship) or would they be considered a separate vessel with dual purpose use? AI will need a strong base of ethical data sets to draw from to make life and death decisions. Extrapolating this data from an ever-changing set of rules may not be the most efficient or effective way to achieve ethical, moral and lifesaving AI in the military industrial complex.

The second tenant discussed is the laws of armed conflict, and these laws are reviewed; however, not as flexible as the humanitarian laws. The challenge is that many new adversaries (terror groups, lone wolves, etc.) do not adhere to the laws of armed conflict, so programming an AI system with adherence to these laws may limit the ability for the AI to complete its given mission. The third tenant is confusing against the second; the idea that military AI will be ethically programmed to follow the laws of armed conflict is one thing. It appears the article is attempting to say the primary reason for researching and integrating AI is that “AI used in war has an explicit life-saving mission, noting how weapons systems with automatic target recognition could target more accurately with less harm to civilians.” (Atherton, 2019) This would only hold true if the data used is clean, accurate, up to date, secure, adaptable to enemy denial and deception tactics, and that someone could be sure that no disinform was ever ingested, this is simply not likely to be the set condition in the real world. When addressing the legal, political and ethical issue of lethal responsibility, we should default to the concept that “humans, not computers and their algorithms should ultimately remain in control of, and thus morally responsible for, relevant decisions about (lethal) military operations” (Filippo Santoni de, 2018).

Can we build autonomous systems that will obey the “rules of the road?

How does one ethically value human life? Is there an ethical formula for valuing one human life over another? If an autonomous vehicle is driving down a road and a child runs out in the road, and a dog is running after the child, should the autonomous vehicle make the ethical decision to spare the child, yet possibly kill the animal? What if, in this scenario, the vehicle is an autonomous hired Uber® that has a passenger, should the Uber® be programmed to avoid the child and animal, and hit a tree instead? Is the passenger’s life less valuable than the child or animal and if so, who is ethically making this calculation? The field of artificial morality aims to model or simulate human cognitive abilities “poses particular difficulties because autonomous vehicles do not just face moral decision but moral dilemmas….in which an agent has only the choice between two (or more) options which are not without morally problematic consequences” (Misselhorn, 2018).

As the EU struggles to consider “electronic personhood” “the future of autonomous vehicles, are in most urgent need of European and global rules… Fragmented regulatory approaches would hinder implementation and jeopardize European competitiveness… (it will require) A new mandatory insurance scheme for companies to cover damage caused by their robots”. (Hern, 2017)

Figure 22-8: Self Driving Car and Braking Decision

Source: (Fortuna, 2017)

In a 2017 article in Public Health Ethics, it is stated “machine learning has only begun to explore moral behavior-or ethical crashing algorithms-for autonomous vehicles. Is it better to kill two autonomous vehicle passengers or two pedestrians? One person or one animal? Collide with a wall or run over a box with unknown contents?” (Fleetwood, 2017).

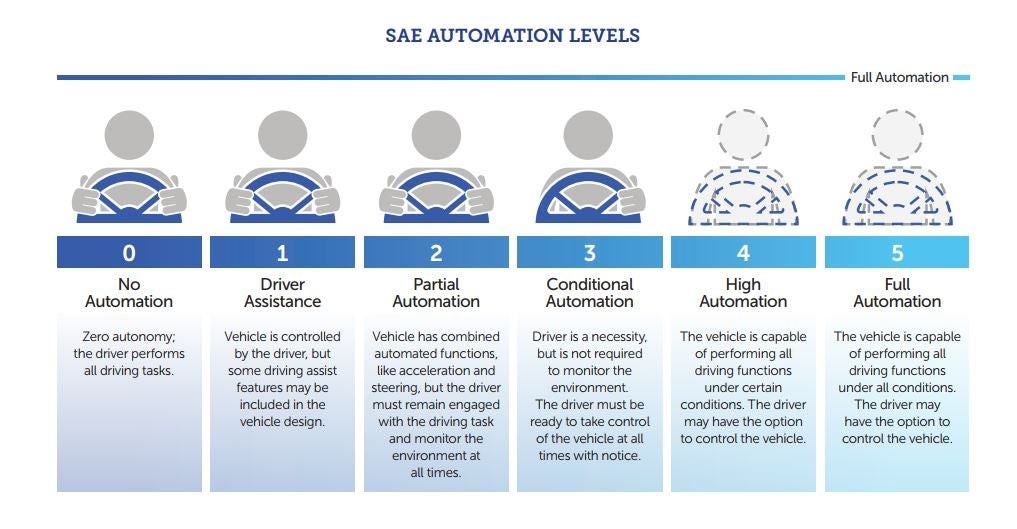

Several regulatory bodies are discussing these issues, and in “2014, the Society for Automotive Engineers (SAE) International’s standard J3016 outlined six levels of automation for automakers, suppliers, and policymakers to use to classify a system’s sophistication”. As Figure 22-9 offers a roadmap for the integration of autonomous systems as the human slowly releases more and more control of the vehicle to the AI, allowing for safeguards for the human control override.

Figure 22-9: SAE Automation Levels

Source: (Fortuna, 2017)

As automated delivery vehicles, taxis, emergency vehicles, and personal transportation become the norm in our society we must consider that they “will be required that the system is able to always comply with all the rules of traffic as defined by the society via the public authority, and sometimes with some unwritten conventions which govern human interaction in the traffic”. (Filippo Santoni de, 2018) This adherence to rules and laws does not absolve the system from understanding the ethics, morals, and the common good of all during the use of the roads and infrastructure, whether it be for commerce or personal business.

“Success in creating effective A.I.,” said the late Stephen Hawking, “could be the biggest event in the history of our civilization. Or the worst. We just don’t know.” Elon Musk called A.I. “a fundamental risk to the existence of civilization.” Are we creating the instruments of our own destruction or exciting tools for our future survival? Once we teach a machine to learn on its own—as the programmers behind AlphaGo have done, to wondrous results—where do we draw moral and computational lines? (Urban, 2018)

In a November 2017 newsletter, IEEE stated that “Malfunctioning autonomous and semi-autonomous systems can disadvantage and harm users, society, and the environment. Effective fail-safe mechanisms…(can) terminate unsuccessful or compromised operations” (IEEE , 2017). This article supports IEEE P7000 standards, which supports the overarching goals of the IEEE to prioritize ethical concerns and human’s wellbeing in the development of standards for autonomous and intelligent technologies.

In 2018, enterprise software maker, SAP announced a set of ethical guiding principles for artificial intelligence (AI) development, their press release stated

“We recognize that, as with any technology, there is scope for AI to be used in ways that are not aligned with these guiding principles and the operational guidelines we are developing. In developing AI software, we will remain true to our human rights commitment statement, the UN guiding principles on business and human rights, laws, and widely accepted international norms” (Middleton, 2018)

We no longer have the luxury of ignoring ethical and moral questions as arguments are being made to integrate autonomous systems in our lives with transportation being just one small yet important sector “Around the world autonomous cars could save 10 million lives per decade, creating one of the most important public health advances of the 21st century” (Fleetwood, 2017)

The challenge of extending ethics and morals into the AI and the autonomous world is a challenge that may not be fully realized or solved anytime soon. Humans continue to struggle with their own ethical and moral issues. The nature of moving forward with technologies and thrusting societies into the AI and autonomous arenas without the ability to fully adapt to the ethics and morals of the societies they operate in will stress governments, law enforcement agencies and the fabrics of our different societies.

Ethics in new technology manufacturing

Although several laws are being drafted, “a life cycle impact assessment should also be developed to understand the true ethical and moral cost of manufacturing AI and autonomous systems. This allows manufacturers to account for the raw materials and the products from the innovation phase, design, manufacturing, sales, and final disposal. Mandating this type of product life cycle may help alter the manufacturing process as the consumer would know the true societal and environmental impact of the product. The issue is, how do you enforce industrial standards for AI and autonomous development and disposal that everyone can understand and will follow. China will tend to tell the world that it understands it should not be putting unsafe chemicals in the baby formula; however, the message is simply disregarded. Several times in the past few years:

“milk powder, produced by Chinese dairy giant Sanlu Group, was contaminated with melamine, a chemical used in making plastics. Melamine has been illegally added to food products in China to boost their apparent protein content, including a widely publicized case last year of contaminated pet food that got exported around the world.” (Ramzy, 2008)

Ethics can guide laws, yet enforcement can also guide the ethics of the offenders, and in the case of large manufactures, one must consider what is an enforceable law that does not unfairly burden underprivileged countries. Ethics and morals must be considered in the intellectual property rights in the manufacturing of AI and autonomous systems “If I create a robot, and that robot creates something that could be patented, should I own that patent or should the robot? If I sell the robot, should the intellectual property it has developed, go with it” (Hern, 2017)

The enforcement of the agreed upon ethical and moral standards is where good intentions fall short. Fining a company a few dollars as they create millions of dollars of health care backlash costs is a clear signal that “ethics” are fungible and are not real. World governments and people are passionate about the environment and believe (at times) money will cure all ills, including questionable ethics. However, it will not. Walmart incurred fines of over $110 million in 2013 alone. Did this monetary penalty solve anything? When was the last time you heard of the entire corporate board of directors being sentenced to jail or being held accountable at all?” (FEMA, 2013) Electronic waste is an issue that will only get worse as technology moves forward and disposable items are moved from first world countries to third world countries without a thought of the cost to the country or the environment.

Ethics are definable and based on certain criteria are negotiable; this is a given. Refusing to enforce penalties offers all carrot and no stick. This is unworkable and continues to fail day in and day out. To incorporate ethics into new technologies is a dream that has a glimmer of hope, and more work must be done. Where does this responsibility lay? Who is tasked with this issue? Who should be tasked with this issue?

Conclusion

A key takeaway is that ethical issues at their roots tend to stay constant throughout history; it is how society or humankind choose to deal with the issue at that point in time that makes ethics interesting. Examples are murder and slavery. In the past, there was an outcry to stop slavery, stop treating humans worse than animals, as we all share the thread of being humans, with the same color of blood and the ability to think, reason, and be self-aware. Will robots continue to learn and study humans and their evolution, for the purpose of evolving past humanity?

That outcry has now turned to silence despite that there are now more humans in the bonds of slavery through human trafficking than any time in the history of humankind.

Figure 22-10: Robot and Human Connecting

Source: (Middleton, 2018)

We must examine history in an ethical context to see how we can shape the future with a deeper understanding of the past. The speed of change in AI and autonomous systems is radically accelerating, and the global upheaval similarly accelerates. What is not apparent, is the pace at which authorities, responsibilities, strategic plans, and policies must change and evolve in order to help organizations understand their role in shaping a positive, proactive future.

The threat of unchecked technology, unmanned architecture development, and the ability to weaponize unmanned systems continues to evolve. Unmanned architecture technology advancements have offered more sophisticated abilities with cost-effective designs that have reduced the entry barrier for consumers, businesses, enemy states, and terrorist organizations. ISIS has demonstrated this by using commercially available unmanned systems technology as air delivered IEDs. Clear policies, laws, and governance are required as the danger to the nation, and the world is becoming undeniable. Plans and supplies available on the internet can turn consumer cars and boats into autonomous driving vehicles that can carry weaponized payloads are creating havoc for the military and civilian agencies unable to respond to these autonomous systems. There is currently no single U.S. federal or international enforcement agency in charge of this issue. For the successful integration of strategic policies, laws, and governance, policy makers and industry must embrace a leadership framework that can address potentially disruptive technologies and concepts that have exponential stakeholders and multi-directional interactions. Given this requirement, how do we realign our institutions, (academic, governmental and industry) for resilience and success as new revolutionary technologies continue to simultaneously emerge and inject additional instability into systems that are already struggling for equilibrium?

Discussion Questions

- How do world events drive the ethical discussion in autonomous and artificial intelligence arenas?

- What and when should cyber factors be considered?

- What non-lethal options could an autonomous system choose to preserve life during an anomaly? What might be considered an anomaly?

- Do you think human ethics can be extended, programmed, understood, obeyed, and adapted into the autonomous and AI industry? Why or why not?

- What ethical dangers do you see as the world leverages more AI?

Bibliography

Abramson, E. (2016). Ethical Dilemmas in the Age of AI. Retrieved from Abramson, E. – knowmail.me/blog: https://www.knowmail.me/blog/ethical-dilemmas-age-ai/

Anon. (2019). Saudi Arabia grants citizenship to robot Sophia. Retrieved from dw: Saudi Arabia grants cihttps://www.dw.com/en/saudi-arabia-grants-citizenship-to-robot-sophia/a-41150856

Asimov, I. (1950). “Runaround”. I, Robot (The Isaac Asimov Collection ed.). New York City: Doubleday.

Atherton, K. D. (2019). Can the Pentagon sell Silicon Valley on AI as ethical war? C4ISRNET.

Cameron, J. &. (Director). (1991). Terminator 2: Judgement Day [Motion Picture].

Daniel-Cornel TĂNĂ, S. (2018). The Impact of the Development of Maritime Autonomous Systems on the Ethics of Naval Conflicts. Annals: Series on Military Sciences(2), 118-130.

FEMA. (2013). Lessons Learned from the Boston Marathon Bombings: Preparing for and Responding to the Attack. Retrieved from www.fema.gov: http://www.fema.gov/media-library-data/20130726-1922-25045-1176/lessons_learned_from_t

Filippo Santoni de, S. &. (2018). Meaningful Human Control over Autonomous Systems: A Philosophical Account. Frontiers in Robotics and AI. doi:10.3389/frobt.2018.00015

Fleetwood, J. (2017). Public Health, Ethics, and Autonomous Vehicles. American Journal of Public Health, 107(4), 632-537.

Fortuna, C. (2017, 12 02). Autonomous Driving Levels 0–5 Implications. Retrieved from cleantechnica.com: https://cleantechnica.com/2017/12/02/autonomous-driving-levels-0-5-implications/

Hern, A. (2017, 1 12). Give robots ‘personhood’ status, EU committee argues. Retrieved from The Guardian: www.theguardian.com/technology/2017/jan/12/give-robots-personhood-status-eu-committee-argues

IEEE . (2017). The IEEE Global Initiative on Ethics of Autonomous and Intelligent Systems Announces New Standards Projects. Telecom Standards, 27(11), pp. 4-5. .

Johnson, O. &. (2012). Ethics: Selections from Classic and Contemporary Writers. Boston, MA: Cengage Learning.

Kanowitz, S. (2019, 05 15). Toward the deployment of ethical AI. Retrieved from Government Computer News. : Kanowitz, S. (2019). Toward the dephttps://gcn.com/articles/2019/05/15/ethical-ai-idc.aspx?s=gcntech_200519

Knight, W. (2018). Nine charts that really bring home just how fast AI is growing. MIT Technology Review .

Mahon, J. (2012). Classical Natural Law Theory St. Thomas Aquinas (1227-1274) — the “Angelic Doctor” Lecture. Retrieved from Mahon, J. (2012). Classical Natural Law Theory St. Thomas Aquinas (1227-1274) — thePhilosophy of Law. : Mahon, J. (2012). Classical Natural Law Theory St. Thomas Aquinas (1227-http://home.wlu.edu/~mahonj/PhilLawLecture1NatLaw.htm

Merriam-Webster, Inc. (2019). Definition of Ethics. online: Merriam-Webster, Inc. Retrieved from Definition of Ethics. (2019a). Online: Merriam-Webster, Incorporated.: Definition of Ethics. (2019a). Online: Merriam-Webster, Incorporated.

Middleton, C. (2018). SAP launches ethical A.I. guidelines, expert advisory panel. Retrieved from internetofbusiness.com: Middleton, C. (2018). SAP launches ethical A.I. guidelines, expert advisory panel. Retrieved from https://internetofbusiness.com/sap-publishes-ethical-guidelines-for-a-i-forms-expert-advisory-panel/

Misselhorn, C. (2018). Artificial Morality. Concepts, Issues and Challenges. Society, 55(2), 161-169.

Proyas, A. (Director). (2004). I, Robot. In. Hollywood, CA. [Motion Picture].

Ramzy, A. &. (2008). Tainted-Baby-Milk Scandal in China. Retrieved from content.time.com/time/world/article/: http://content.time.com/time/world/article/0,8599,1841535,00.html

Stone, Z. (2007, 11 7). Stone, Z. (2017). Everything You Need To Know About Sophia, The World’s First Robot Citizen. Retrieved from https://www.forbes.everything-you-need-to-know-about-sophia-the-worlds-first-robot-citizen. Retrieved from Forbes: https://www.forbes.com/sites/zarastone/2017/11/07/everything-you-need-to-know-about-sophia-the-worlds-first-robot-citizen/#1667784246fa

Urban, T. (2018). Teach Your Robots Well: Will Self-Taught Robots Be the End of Us? Retrieved from www.worldsciencefestival.com: Urban, T. (2018). Teach Your Robots Well: Will Self-Taught Robots Be the End of Us? Retrieved from https://www.worldsciencefestival.com/programs/teach-robots-well-will-self-taught-robots-end-us/

WebFinance, Inc. (2019). Definition of Ethics. (2019b). online: Online: WebFinance, Inc.

Wong, C. (2017). Top Canadian researcher says AI robots deserve human rights. Retrieved from Wong, C. (2017). Top Canadian researcher says AI robots deserve human rights. Retrieveitbusiness.ca: Wong, C. (2017). Top Canadian researcher says AI robots deserve human rhttps://www.itbusiness.ca/news/top-canadian-researcher-says-ai-robots-deserve-human-rights/95730

Wordpress. (2012, 08 29). The True Sign of Intelligence. Retrieved from deepthinkings.wordpress.com: http://deepthinkings.wordpress.com/2012/08/29/the-true-sign-of-intelligence/

Zetter, K. (2015). So, The NSA Has An Actual SKYNET Program . WIRED Magazine(Online). . Retrieved from Zetter, K. (2015). So, The NSA Has An Actual SKYNET Program WIRED Magazine(Online).

- The Three Laws of Robotics are a set of rules devised by the science fiction author Isaac Asimov. The rules were introduced in his 1942 short story "Runaround" (included in the 1950 collection I, Robot), although they had been foreshadowed in a few earlier stories. The Three Laws, quoted as being from the "Handbook of Robotics, 56th Edition, 2058 A.D.", are:First LawA robot may not injure a human being or, through inaction, allow a human being to come to harm.Second LawA robot must obey the orders given it by human beings except where such orders would conflict with the First Law.Third LawA robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. (Asimov, 1950) This is an exact transcription of the three laws. ↵