7 Deception

By Professor Randall K. Nichols, Kansas State University

STUDENT OBJECTIVES[1]

Students will understand and study:

The various deception methods and technologies employed against critical infrastructure assets and computer targets,

Recognize that unmanned aircraft (UAS) are reasonable deployment agents against critical infrastructure and CBRN assets. UAS are expendable, quiet, hard to detect, and can act in numbers (SWARMS) in many deception domains.

Unmanned systems present a lethal risk of deception operations and should be accounted for in security plans.

INTRODUCTION

Picture for a moment you are watching your child playing H.S. football in a state championship game. There are about 22,000 in attendance. The game clock is about 10 minutes after halftime. Yelling, cheering, and screaming for their teams, they hardly notice the three medium-sized drones flying over the stands. The drones start dropping hundreds of small pieces of paper over the crowd and then leave the area. The notes say: ” You have 15 minutes to evacuate – the next drones carry fentanyl and Semtex explosives. You are not safe. Evacuate!” 15 minutes later, nearly 50 drones SWARM over the stadium dropping talcum powder and powerful firecrackers on the crowd. This is a terrorist scenario that relies on DECEPTION aimed at causing Panic in a crowd and DISRUPTION.[2] Its goal is to make people fear and lose faith in their local government to protect them. Drones can be the precursor!

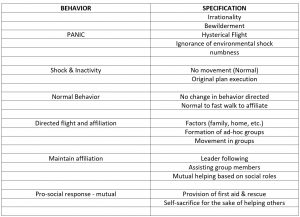

Terrorists rely on how crowds behave in a Panic Situation. (Bade, 2009) provided insight into the theories about crowd behavior in Panic Situations. Table 7.1 shows that crowds don’t act as crowds during a panic situation but as a group of individuals. They resort to individual behavior at the expense of all others. They exhibit both Panic Theory and Urgency Theory.

Panic Theory embraces four principles:

- Deal primarily with the factors that may make the occurrence of Panic during emergencies,

- The basic premise is that when people perceive danger, their usual conscious personalities are often replaced by the unconscious personalities, which lead them to act irrationally,

- Hysterical flight,

- Ignorance of the environment. (Bade, 2009)

Urgency Theory embraces two main principles:

- The occurrences of human blockages of exiting space depend on the levels of urgency to exit

- Three crucial factors could lead to this situation:

- the severity of the penalty and consequence for not exiting quickly,[3]

- the time available to exit, and

- the group size.

A problem arises when the distribution of urgency levels contains a large number with a high urgency to leave; for example, too many people try to exit quickly at the same time ( with limited exits). (Bade, 2009)

Table 7.1 Characteristics of Emergency Behavior

Source: (Bade, 2009) Photo by Author from original manuscript to meet PB guidelines.

Two famous Panic Theory / Urgency Theory examples are 1) The Station Nightclub Fire in Rhode Island in 2003, which killed ~100 and injured 200 more. (CBS News, 2021 updated)[4] and 2) May 9, 2001, Accra Sports Stadium Disaster[5] (Chrockett, 2014)

The author has contributed to the science of Panic Attack responses with an article on how to respond before the full-blown Panic arrives. (Nichols R. K., short-circuiting-simple-panic-attacks-quick-guide-out, 2018)

VULNERABILITIES OF MODERN SOCIETIES TO UAS ATTACK

According to (Dorn, 2021), the federal government has yet to acknowledge the threats posed by UAS, and it barely noticed the USS and UUS’s capabilities and the threat platforms they pose. Present-day unmanned systems are faced with a contradictory relationship between their small degree and the likelihood of detection and the small degree of lethality that a single unmanned system represents. If an unmanned system successfully attacks a congested target, such as a ballgame, it is unlikely to kill more than a few fans. The attack creates a sense of fear in the citizens; terrorism has been brought to their doorsteps, and the uncertainty in the government’s ability to prevent such attacks and protect its citizens. (Dorn, 2021) A boosted course of action uses a combination of manned and unmanned systems operating as a team (MUM-T) or in SWARM mode to deliver payloads. (Nichols & Mumm, Unmanned Aircraft Systems in the Cyber Domain, 2nd Edition., 2019) If the terrorist has access to CBRNE agents/weapons, this will present a significant long-term threat to the U.S.

(Kallenborn & Bleck, 2018) exposed that UAS have the potential to be substituted for CBRN agents in an attack. The UAS could be used as a SWARM with explosives, or if CBRN agents are to be used in the attack, a UAS SWARM would be an ideal platform to deliver such agents to a specific target or in a widely dispersed manner.

(Nichols & al., 2020) (Nichols & Sincavage, Disruptive Technologies with Applications in Airline, Marine, and Defense Industries, 2021) suggested that the UAS can be agents of deception, and their payloads can be used to create Panic.

BASIC TERMINOLOGY

The study of deception has a variety of roots. The best text on Deception and Counter Deception principles is (Bennett & Waltz, 2007). Its use has been well researched and published. The idea of using UAS as a deployment vehicle is new and credited to the authors. (R. K. Nichols & et al., 2022) The art/science of deception has its own terminology to distinguish deception activities’ principles, means, and effects. The basic DoD accepted terms are: (Daniel & Herbig, 1982)

Denial includes those measures designed to hinder or deny the enemy the knowledge of an object by hiding or disrupting the means of observation of the object. The basis for Denial is dissimulation, the concealing of truth. (Daniel & Herbig, 1982)

Deception includes measures designed to mislead the enemy by manipulation, distortion, or falsification of evidence to induce him to react in a manner prejudicial to his interests. The goal of deception is to make an enemy more vulnerable to the effects of weapons, maneuvers, and operations of friendly forces. The basis for deception is a simulation, the presence of that which is false. (Daniel & Herbig, 1982)

Denial and Deception ( D & D) include integrating both processes to mislead an enemy’s intelligence capability. The acronym C3D2 is synonymous with D & D; it refers to cover, concealment, camouflage, Denial, and deception. (Daniel & Herbig, 1982)

Deception means are those methods, resources, and techniques that can convey information to the deception target. DoD characterizes means as:

Physical means: activities and resources used to convey or deny selected information to a foreign power. (Examples are military operations, reconnaissance, force movement, dummy equipment, logistical actions, test, and evaluation activities.)

Technical means are defined as: Resources and operating techniques to convey or deny selected information through deliberate radiation, alteration, absorption, reflections of energy; the emission or suppression of chemical or biological odors; and the emission or suppression of nuclear particles.

Administrative means are resources, methods, and techniques to convey or deny an enemy’s oral, pictorial, documentary, or other physical evidence.

The Deception target is the adversary decision-maker with the authority to decide what will achieve the deception objective – the desired result of the deception operation.

Channels of deception are the information paths by which deception means are conveyed to their targets. (Daniel & Herbig, 1982)

PERSPECTIVES OF DECEPTION

(Bennett & Waltz, 2007) present 60 pages of Deception research. There are two models of deception that apply to the UAS environment. (Gerwehr & Glenn, 2002) present their three perspectives on Deception in Table 7.2.

Table 7.2 Three Perspectives on Deception

| Level of Sophistication | Effect Sought | Means of Deception |

| Static: Deceptions that remain static regardless of state, activities, or histories of either the deceiver or target masking | Masking: Concealing a signal. Ex: camouflage, concealment, and signature reductions. | Morphological: The part of the deception is primarily a matter of substance, shape, coloration, or temperature. |

| Dynamic: Deceptions that become active under specific circumstances. The ruse itself and associated trigger do not change over time nor vary significantly by circumstance or activity. |

Misdirecting: Transmitting an unambiguous false signal. Ex: feints, demonstrations, decoys, dummies, disguises, and disinformation.[6] |

|

| Adaptive are: Dynamic, and the trigger or ruse can be modified with experience. Deceptions that improve with trial and error. |

|

Behavioral: The part of the deception is primarily a matter of implementation or function, such as timing, location, or patterns of events or behavior. |

| Premeditative: Deception is designed and implemented based on experience, knowledge of the deceiver’s capabilities, and the target’s sensors and search strategies. | Confusing: Raising the noise level to create uncertainty or paralyze the target’s perceptual capabilities. Ex: voluminous communication traffic, conditioning, and random signals or behavior. |

Source: (Gerwehr & Glenn, 2002)

DECEPTION MAXIMS

One of the important results of the CIA’s ORD Deception Research Program was the paper on Deception Maxims. (MathTech, Inc, 1980) All 10 Maxims are covered in detail in (Bennett & Waltz, 2007) Table 7.3 shows ten interesting Deception Maxims.

Table 7.3 Deception Maxims

| MAXIM | RESULT |

| #1 Magruder’s Principle | It is easier for the target to maintain a preexisting belief even if presented with information expected to change that belief. |

| #2 Limitations to human Information processing | Limitations to human information processing can be design deception schemes, including the law of small numbers and susceptibility to conditioning |

| #3 the Multiple forms of surprise | Surprise can be achieved in different forms: location, strength, intention, style, and timing. [7] |

| #4 Jones’ Lemma | Deception becomes more difficult as the number of channels available to the target increases. The greater the number the deceiver controls the deceiver, the greater the likelihood that the deception will be achieved! [8] |

| #5 A choice among types of deceptions | The objective of the deception planner should be to reduce the ambiguity in the mind of the target to make the target more certain of a particular falsehood rather than less certain of the truth |

| #6 Axelrod’s contribution: the husbanding of assets | There are circumstances where deception assets should be husbanded, despite the costs of maintaining them and the risk of the exposure until they can be put to fruitful use |

| #7 A sequencing rule | Deception activities should occur in a sequence that prolongs the target’s false perceptions of the situation for as long as possible |

| #8 Importance of feedback | Accurate feedback from the target increases the deceptions likelihood of success |

| #9 The Monkeys Paw | The deception may produce subtle and unwanted side effects. Deception planners should be sensitive to this possibility and attempt to minimize them |

| #10 Care in designing the planned placement of deceptive material | Great care must be taken when designing schemes to leak notional plans. Apparent windfalls are subject to close scrutiny and are often misbelieved. |

Source: (MathTech, Inc, 1980)

Take note of Maxims #1, #4, and #9. These are the key to UAS deployment for Deception objectives. [9]

SURPRISE

Jock Haswell, Michael Dewar, and Jon Latimer (all former British officers) have written about purpose, principles, and deception techniques. They all emphasize that the goal of deception in warfare is a surprise. Five principles are common to all three authors’ writings:

- Preparation: Successful deception operations require careful intelligence preparation to develop detailed knowledge of the target and the target’s likely reaction to each part of the deception.

- Centralized control and coordination: Uncoordinated deception operations can confuse friendly forces (or terrorists, depending on POV) and reduce or negate the effectiveness of the deception.

- Credibility: The deception should produce false and real information and a pattern of events that align with the target’s preconceptions and expectations.

- Multiple information channels: False information must be presented to the target through as many channels as possible without arousing the target’s suspicions that the information is too good to be true. This is also called confirmation bias.

- Security: Access to the deception plan must be carefully restricted. Information released to the target must be revealed so that the absence of normal security precautions does not arouse the target’s suspicions. (Haswell, 1985)(Dewar, 1989)(Latimer, 2001)

Four Fundamental Principles

Four Fundamental Principles form the foundation of deception theory in general. These principles relate to how the target of deception acquires, registers, processes, and ultimately perceives data and information about their world. They are Truth, Denial, Deceit, and Misdirection. (Bennett & Waltz, 2007) See Table 7.4.

- Truth: All deception works within the context of what is true.

- Denial: Denying the target access to the truth is the prerequisite to all deception.

- Deceit: All deception requires deceit.

- Misdirection: Deception depends on manipulating what the target registers. See Table 7.4.

Table 7.4 Deception

| DECEPTION | DECEPTION | DECEPTION | DECEPTION |

| Truth | Denial | Deceit | Misdirection |

| All deception works within the context of what is true denying | Denying the target access to the truth is the prerequisite to all deception.

|

All deception requires deceit. | Deception depends on manipulating what the target registers provide |

| Provides the target with real data and accurate information blocks | Blocks the target’s access to real data and accurate information. | Provides the target with false data and wrong or misleading information. | Determines where and when the target’s attention is focused: what registers |

| It influences how the target registers, processes, and perceives data and information and, ultimately, what the target believes and does. | It influences how the target registers, processes, and perceives data and information and, ultimately, what the target believes and does. | It influences how the target registers, processes, and perceives data and information and, ultimately, what the target believes and does. | It influences how the target registers, processes, and perceives data and information and, ultimately, what the target believes and does. |

Deception is the deliberate attempt to manipulate the perceptions of the target. If deception is to work, there must be a foundation of accurate perceptions that can be manipulated. All deception works within the context of what is true (or honest). (Mitchell, 1986, p358)

Denial and deception (D & D) is the universal description for strategic deception. Denial blocks the target’s access to real data and accurate information and can be considered a standalone concept; it is the linchpin to deception. Denial’s other terms are security, secrecy, cover, dissimulation, masking, or passive deception. Denial protects the deceiver’s real capabilities and intentions. (Bennett & Waltz, 2007)

All deception requires deceit. The methods of Denial (secrecy, concealment, and signal reduction) reduce or eliminate the real signals that the target needs to form accurate perceptions of a situation. (Dewar, 1989)

Misdirection is the most fundamental principle of all practicians of magic. In magic, misdirection directs the audience’s attention towards the effect and away from the method that produces it.

Three examples of UAS Attacks could be Destruction, Disruption, or Deception (D/D/D)

(Dorn, 2021) presents three attacks on critical infrastructure that could be developed for Destruction, Disruption, or Deception (D/D/D). Depending on the terrorist objectives, the UAS payloads could be structured to deliver weapons for any of the three Ds. The easiest objective (best terrorist case for their investment) would be deception which would provide testing of defenses for ISR purposes. The moderate case would be Disruption of services and personnel. The worst-case and best defended would be to use actual CBRN agents. Chances of lethal success would be minimized. Exposure would be maximized. It all depends on the attacker’s objective and how lethal the plans are to accomplish their goals.

Attack 1: Ronald Reagan National Airport (RRNA). DHS designated the area around RRNA a-defend-at-all-costs asset in metropolitan Washington, DC. Multiple large UAS (called motherships) carrying multiple smaller UAS, all capable of independent action against multiple targets, present themselves. The motherships follow well-established low-level transit routes (LLTR) to blend in with aircraft traffic in and out of RRNA and Joint Base Andrews. The confusion and inaction of FAA controllers would be long enough for the motherships to divert and drop their load of smaller UAS / drones. What distinguishes the D/D/D cases are the payloads. Payloads could be CBRNE agents, talcum powder and firecrackers, or paper leaflets for a PSYOPS deception. In all three, the target will suffer Panic, and the media coverage will guarantee a victory for the terrorists. (Dorn, 2021)

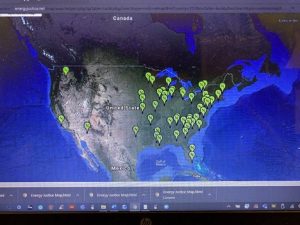

Attack 2: Multiple UAS engage in attacks on multiple nuclear power plants on the east coast.

By launching multiple UAS in a SWARM formation, terrorists would conduct overflights of essential plants within a giver region and overwhelm the first responder and LEO assets. The dispersal of powder or liquids would cause Panic only if the plant workers observed it. Assuming a fixed dispersal unit and a CBRN agents payload, plant workers would walk or run through the contaminated area ( parking lot, facility grounds) and carry the agents into the plant. The SOP for nuclear plants would be that the plant would be shut down once a radiation leak alarm was triggered. All personnel would be evacuated. Figure 7.1 shows the approximate locations of 99 operating nuclear power plants within the U.S. These 99 nuclear plants provide 19.7% of the U.S. daily electrical requirements. The two prime targets would be the dome-shaped structures and the outside cooling towers. It is probable that a SWARM attack on multiple nuclear power plants would succeed. (Dorn, 2021) The nuclear reactor is safe and guarded by ten-foot-thick steel-reinforced walls, concrete, and a dome. The plant’s turbines, generators, condensers, spent fuel rod facilities, and cooling towers are not built to the same standard. (Dorn, 2021) suggests “that there are no tactics, techniques, and technologies to deter, deny, disrupt, or destroy the threat that UAS poses to the nuclear explosive ordinance and mitigating the effects of a UAS overflight in which CBRN agents were released on the employees and compound.”

The authors disagree. Classified systems are not covered in this book but certainly exist. Non-classified defenses for critical infrastructure attacks of this sort are covered in detail in (Nichols R. K., 2020). New C-UAS systems are coming on stream every day from multiple vendors and interests as we write this chapter. The scenario is interesting but not so bleak. (Nichols & Sincavage, Disruptive Technologies with Applications in Airline, Marine, and Defense Industries, 2021) (Nichols R. K., 2020)

Attack 3. Expansion of author’s introductory stadium attack. Multiple UAS or SWARM equipped with several grams of explosive CBRN agents or fentanyl, Trichloroisocyanuric acid, or carfentanil (extremely lethal in small amounts) could disperse them from above an open stadium filled with spectators during a sporting event. The UASs could be launched out of multiple briefcases, backpacks, large purses, or vehicles outside the stadium. A safe distance is a plus for the terrorists. The same agents could be launched from a USS or UUV land or on the water while passing outside the stadium. (Dorn, 2021)

All three of the above attacks would involve D & D operations to misdirect the defending forces.

Taxonomy of Technical Methods of Deception

There are four basic toolsets to implement deception objectives. They are:

- Methods to influence sensing channels, either by human senses directly or through remote sensors employed to extend the human senses.

- Methods employed to deceive signal and computer processes

- Deceptive methods employed in the human intelligence (HUMINT) trade exploit intelligence organizations’ information channels. (Bennett & Waltz, 2007)

See Table 7.5 for more detail.

Figure 7.1 Operating Nuclear Power Plants within the U.S.

Source: (EnergyJustice.net, 2022)

Table 7.5 Categories of Deception Channels and Methods

| Tool Set | Channels Manipulated | True / False | Reveal

In Deception Matrix |

Conceal

In Deception Matrix |

| Channels CC&D

Camouflage, Concealment, Deception |

Physical sensors (technical& human sense) | True | Reveal limited real units & activities to show strength, influencer enemy sensor coverage, sensitivities;

|

Camouflage paint & nets; radar nets, thermal, audio, radar signature suppression Activities in facilities underground to avoid surveillance or hidden dual-usage facilities |

| Governing principles: Physics, Manipulate physical phenomena, electromagnetic spectra. | True | Reveal true commercial capability of dual-use facilities, provide true “cover” to misdirect from noncommercial weapons. use | ||

| Channels CC&D

Camouflage, Concealment, Deception |

False | Thermal, radar, audio signature simulation. Physical vehicle & facility decoys | Maintain OPSEC on existing methods & extent of CC&D capabilities ( equipment, nets, decoys, ECM support). | |

| Signals / Channels / D & D | Channels: Abstract symbolic representations of information | True | Reveal limited alluring information on honeypots ( deceptive network servers) to lure attackers and conduct sting operations | Cryptographic & steganographic hiding messages; Polymorphic ( dynamic disguise) of worm code or cyber weapons (Nichols & Ryan, 2000) |

| Governing Principles: Logic/game theory; manipulate information & timing of information | True | |||

| False | Communication traffic simulation. Reveal false flag /feed information on honeypots. Decoy software agents & traffic – also apply to decoy UAS (Nichols & Sincavage, Disruptive Technologies with Applications in Airline, Marine, and Defense Industries, 2021). | Maintain OPSEC on known opponent vulnerabilities & penetration capabilities human | ||

| Human & Media channels | Channels: Human interpersonal interaction; individual& public | True | Reveal valid sources of classified ( but non-damaging) information to provide Bona Fides to double agent | Agent channel cover stories conceal the existence of agent operations; Covert ( black) propaganda organizations & media channels hide the true source & funding of operations.

|

| Governing principles: psychology; manipulate human trust, perception, cognition, emotion & volition | ||||

| Human & Media channels | False | False reports, feeds, papers, plans, codes, False agent channels to distract counterintelligence | Maintain OPSEC on agent operations; monitor assets to validate productivity, reliability & accuracy. |

Source: (Bennett & Waltz, 2007) pp.114 modified from Table 4.1

Table 7.5 exposes a wide variety of Channels and methods for deception. UAS can interface with much of the matrix to support active deployment logistics. Let’s contemplate how UAS might be used with the deception categories. There are two main categories that UAS can be used to achieve a deception objective: CC&D sensory channels and D&D signal channels.

Technical Sensor Camouflage, Concealment, and Deception (CC&D)

UAS systems sync well with the first category of manipulated channels. (Nichols R. K., Chapter 18: Cybersecurity Counter Unmanned Aircraft Systems (C-UAS) and Artificial Intelligence, 2022) Technical camouflage, concealment, and deception (CC&D) have a long history in warfare, hiding military personnel and equipment from long-range human observation within the visible spectrum. Modern CC&D includes a variety of electro-optical, infrared, and radar sensors that span the electromagnetic spectrum. (Adamy D. L., EW 104: EW against a new generation of threats, 2015) (Adamy D. L., EW 103: Tactical Battlefield Communications Electronic Warfare, 2009) (Adamy D. L., Space Electronic Warfare, 2021) From a military POV, UAS may be used to prevent detection by surveillance sensors, then to deny / or disrupt targeting by weapons systems, and ultimately to disrupt precision-guided munitions. The focus of CC&D is to use physical laws to suppress physical phenomena and observable signatures that enable remote detection by discrimination of the target’s signature from a natural background. (Bennett & Waltz, 2007)

(Bennett & Waltz, 2007) defines the elements of CC& D as:

Camouflage uses natural or artificial material on personnel, objects, or tactical positions to confuse, mislead, or evade the enemy. The primary phenomena suppressed by camouflage include:

- A spectral signature, which distinguishes a target by its contrast from background spectra or shadows,

- A spatial signature, which distinguishes the spatial extent, shape, and texture of the object from the natural background objects,

- Spatial location, which is relative to background context, identifies a target, and

- Movement distinguishes an object from the natural background and allows detection by moving target indicator (MTI) sensors that discriminate phase shift of reflected radar or laser energy.

Concealment is the protection from observation or surveillance. It can include blending, where parts of the scene are combined to render the parts indistinguishable. It may also include cover measures to protect a person, plan, operation, formation, or installation from enemy ISR and information leakage.

Deception: performs the function of misdirection, modifying signatures to prevent recognition of true identity or character of asset or activity and providing spoofed (false) or decoy signatures to attract the attention of sensors away from the real assets or activities.

Approaching from above at night without lights, little sound, on a waypoint navigation mode, and lethal payloads, UAS are a significant penetration element with CC&D in situ. (Nichols & Mumm, Unmanned Aircraft Systems in the Cyber Domain, 2nd Edition., 2019)

UAS plays an interesting part in sensor deception, suppression of signals of ground targets, and blending their signatures into background clutter. UAS can use active CC&D to deceive radar electro-optical sensors in the air. At sea, UAS can deceive sonar operations or inject cyber weapons into ship navigation systems to spoof location fixes. (Nichols & Sincavage, Disruptive Technologies with Applications in Airline, Marine, and Defense Industries, 2021) (Bennett & Waltz, 2007)

Before we detail the Signal and IS D&D relationships, we review the Cyber-Electromagnetic Activities environment. (CEA)

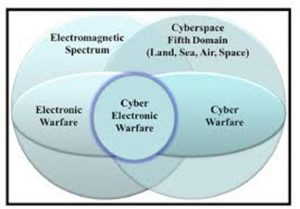

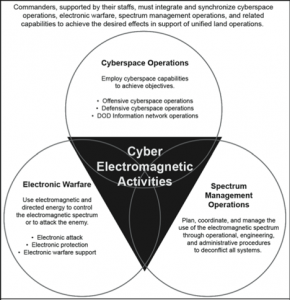

CEA

A closely related science intersects with EW, and that is Cyber. There are distinct parallels and intersections between Cyber and EW. For instance, the sister of signal spreading techniques is encryption. Figure 7.2 shows the intersection of Cyber, EW, and Spectrum Warfare designated as Cyber Electromagnetic Activities (CEA) (Army, FM 3-38 Cyber Electromagnetic Activities, 2014). Figure 7.3 puts CEA in the perspective of total war. (Askin, 2015) Note that CEA is characterized by signal and communications. Strategic sensory deception protects large-scale, long-term, high-value national assets (e.g., WMDD programs, advanced research, and production facilities, related construction and testing activities, proliferation activities) and large-scale military activities. (Bennett & Waltz, 2007)

Information Operations (IO)

There are two sides to the coin when discussing IO. and deception with UAS / UUV as the deployment mechanisms. On one side UAS have many cyber vulnerabilities that can be exploited. These are covered in detail in: (Nichols R. K., Chapter 18: Cybersecurity Counter Unmanned Aircraft Systems (C-UAS) and Artificial Intelligence, 2022). On the other side, the UAS can be considered the deployment agent for deception’s broad network information channel. Most of the methods and attack tools can exploit the network channels. Deception in IO. includes: (DoD-02, 2018)

Communications electronic warfare (CEW) CEW protects and attacks communication networks. Signal deception is employed to intercept, capture, and manipulate free-space communications’ signal envelopes and internal contents. (Poisel, 2002)

Computer network exploitation (CNE). CNE employs intelligence operations to:

- Obtain information resident in files of threat automated information systems (AIS)

- Gain information about potential vulnerabilities,

- Access critical information resident within foreign AIS that benefits friendly forces.

CNE operations employ deceit to survey, penetrate, and access targeted networks and systems. (CJCSI, 2022)

Computer network attack (CNA) CNA employs operations using information systems to disrupt, deny, degrade, or destroy information resident in computers and computer networks or computers and networks themselves. (USAF, January 4, 2002)

CNA also broadly covers SCADA attacks on UAS, GPS, and GNSS systems (Nichols & Mumm, Unmanned Aircraft Systems in the Cyber Domain, 2nd Edition., 2019). CNA is also used in Spoofing attacks on vessels at sea. (Humphreys & al., 2008) (Nichols & al., 2020)

In CEW, CNE, and CNA domains, deception is applied to exploit a vulnerability such as

- 1) Spoof an I.P. address by direct exploitation of the protocol’s lack of authentication or

- 2) Exploit a buffer overflow vulnerability to insert code to enable subsequent access;

Or induce a vulnerability in a system to cause a network firewall misconfiguration to enable access or escalate privileged access – move from unauthorized to root access. CNA attackers use deceit on both human and computer assets. The essential elements are invading trust or destroying integrity. (Bennett & Waltz, 2007)

UAS are often used as the deployment vehicle for cyberweapons and CNA. (Nichols & al., 2020) Table 7.6 Shows the Computer network operations (CNO) deception matrix methods and terminology adopted by the Community.

Table 7.6 Representative CNO Deceptive Operations

| Deception Matrix Quadrant | Deceptive Mechanism | Description / Example |

| Conceal facts (dissimulation) | Cryptography | Openly hide A by encryption process protected by public or private key (Nichols R. K., ICSA Guide to Cryptography, 1999) |

| Steganography | Secretly hide A within open material protected by a secret hiding process and private key (Wayner, 2008) | |

| Trojan or Backdoor concealment hides | Hide C within A: conceal malicious code within a valid process; dynamically encrypt code ( polymorphic) or wrap code while not running memory to avoid a static signature detection; reduce trace logs (Skoudis, 2004). | |

| Reveal fiction (simulation) | Masquerade (decoy) | Present C as B to A: spoof IP address or repeating captured authentication information (Skoudis, 2004) |

| Buffer overflow | Present C as B to A; spoof a service, A, to execute a code C when appearing to request B by exploiting a vulnerability in the service (Skoudis, 2004) | |

| Session Hijack | Capture session information/credentials from B and present A as B (Skoudis, 2004) | |

| Session co-intercept | Intercept and replay security-relevant information to gain control of the session, channel, or process; co-opt a browser before a user can access it (Schneier, 1995) | |

| Man-in-the-Middle (MIM) | Present C to B as A, then C to A as B. Establish trusted links. Control information exchange (Schneier, 1995) | |

| Honeypot / Honeynet | Present C as a valid service; track all users to lure, monitor users’ activity without authorization (Rowe, 2004) | |

| Denial of Service (DOS) | Request excessive services from A, issue false requests from distributed hosts, clog the system. (R.K. Nichols & Lekkas, 2002) | |

| Reroute | Route traffic intended for A to B: control routing information to intercept, disrupt or deny traffic requests (Skoudis, 2004). | |

| Conceal fiction | Withhold operational deception capabilities. | Maintain COMSEC, OPSEC, TRANSEC to protect CNA and CND capabilities |

| Reveal fact (selective) | Selective disclosure and conditioning | Publish limited network capabilities – reduce attacker sensitivity |

Note: Table gives general descriptions of actions on computer hosts or services or servers A, B, and C. Each method has one reference. There are many in each category, and certainly updated as we march forward.

Source: Modified from Table 4.5 p 124 of (Bennett & Waltz, 2007)

Figure 7.2 Cyber Electromagnetic Activities

Source: (Army, FM 3-38 Cyber Electromagnetic Activities, 2014)

Figure 7.3 CEA / CEW in the view of Total War

Source: (Askin, 2015)

Sensor deception activities in the CEW sphere are designed to D&D national technical means such as space reconnaissance and surveillance, global fixed sensor detection networks, and clandestine sensors. They thwart intelligence discovery and analysis.

Signal and Information Systems (IS) Denial and Deception

UAS is exceptionally well suited to Signal and Information Denial & Deception (D&D) Operations. This category of technical methods seeks to deceive the information channel provided by electronic systems. These methods issue deceptive signals and processes that influence automated electronic systems rather than the sensors of physical processes. (Bennett & Waltz, 2007) UAS /UUV are the new lynchpins for electronic warfare (EW) and cyberwarfare (CW) in air and sea. (Nichols & Mumm, Unmanned Aircraft Systems in the Cyber Domain, 2nd Edition., 2019) [10]

The second category (Table 7.5) of technical methods seeks to deceive the information channel provided by electronic systems. These methods use deceptive signals and processes that influence automated electronic systems rather than physical sensors. Deception involves the manipulation of signals and symbols to defy logic processing. (Bennett & Waltz, 2007)

Electronic Warfare (EW)

The subject of E.W. is covered by one of my most revered mentors in his EW series. (Adamy D. -0., 2015) (Adamy D., 2009) (Adamy D. EW 101 A First Course in Electronic Warfare, 2001) (Adamy D. L., 2004) (Adamy D. L., EW 103: Tactical Battlefield Communications Electronic Warfare, 2009) (Adamy D. L., EW 104: EW against a new generation of threats, 2015) (Adamy D. L., Space Electronic Warfare, 2021) Our series also looks at E.W. in (Nichols & Mumm, Unmanned Aircraft Systems in the Cyber Domain, 2nd Edition., 2019) (Nichols & Ryan, 2000) (R.K. Nichols, 2020) (R.K. Nichols & Lekkas, 2002) looks into the wireless security field and its interrelationships with satellite telemetry, EW, and Cyber security.

EW Generalities

Electronic warfare (EW) is defined as the art and science of preserving the use of the electromagnetic spectrum (EMS) for friendly use while denying its use by the enemy. (Adamy D., EW 101 A First Course in Electronic Warfare, 2001) The EMS is from D.C. to light and beyond.

Legacy EW definitions

- EW was classically divided into (Adamy D., EW 101 A First Course in Electronic Warfare, 2001)

- ESM – Electromagnetic Support Measures – the receiving part of EW;

- ECM – Electromagnetic Countermeasures – jamming, chaff, flares used to interfere with operations of radars, military communications, and heat-seeking weapons;

- ECCM -Electronic Counter-Counter Measures – measures are taken to design or operate radars or communications systems to counter the effects of ECM.[11]

Not included in the EW definitions were Anti-radiation Weapons (ARW) and Directed Energy Weapons (DEW).

USA and NATO have updated these categories:

- ES – Electronic warfare Support (old ESM) to monitor the R.F. environment;

- EA – Electronic Attack – the old ECM includes ARW and D.E. weapons; to deny, disrupt, deceive, exploit, and destroy adversary electronic systems.

- EP – Electronic Protection – (old ECCM) (Adamy D., E 101 A First Course in Electronic Warfare, 2001) to guard friendly systems from hostile attack.[12]

ES is different from Signal Intelligence (SIGINT). SIGINT comprises Communications Intelligence (COMINT) and Electronic Intelligence (ELINT). All these fields involve the receiving of enemy transmissions. (Adamy D., EW 101 A First Course in Electronic Warfare, 2001)

COMINT receives enemy communications signals to extract intelligence.

ELINT uses enemy non-communications signals to determine the enemy’s EMS signature so that countermeasures can be developed. ELINT systems collect substantial data over large periods to support detailed analysis.

ES/ESM collects enemy signals, either communication or non-communication, with the object of doing something immediately about those signals or the weapons associated with those signals. The received signals might be jammed, or the information sent to a lethal responder. Received signals can be used to type and locate the enemy’s transmitter, locate enemy forces, weapons, distribution, and electronic capability. (Adamy D., EW 101 A First Course in Electronic Warfare, 2001) [13]

The information channels of EW include radar and data link systems, satellite links, navigation systems, and electro-optical (EO) systems (e.g., laser radar and EO missile seekers.) (Nichols & Mumm, Unmanned Aircraft Systems in the Cyber Domain, 2nd Edition., 2019)

EA methods include jamming techniques that degrade signal processing systems’ detection and discrimination performance and complementary deception techniques. (Bennett & Waltz, 2007)

Electromagnetic deception (EMD) is defined as the deliberate radiation, re-radiation, alteration, suppression, absorption, Denial, enhancement, or reflection of electromagnetic energy in a manner intended to convey misleading information to an enemy or enemy electromagnetic–dependent weapons, thereby degrading or neutralizing the enemy’s combat capability. (Army, Joint Doctrine for Electronic Warfare – Joint Pub 3-51, April 7, 2000) These deceptive actions include exploiting processing vulnerabilities, inserting too many signatures in the detection buffer, and spoofing. Table 7.7 present a taxonomy of EMD techniques within the format of the deception matrix. (Adamy D., EW 101: A First Course in Electronic Warfare, 2001) (Adamy D. L., EW 104: EW against a new generation of threats, 2015)

Spoofing – GPS Spoofing

Spoofing – A Cyber-weapon attack that generates false signals to replace valid ones. GPS Spoofing is an attack to provide false information to GPS receivers by broadcasting counterfeit signals similar to the original GPS signal or by recording the original GPS signal captured somewhere else at some other time and then retransmitting the signal. The Spoofing attack causes GPS receivers to provide the wrong information about position and time. (T.E. Humphrees, 2008) (Tippenhauer & et.al, 2011)

Spoofing Techniques

According to (Haider & Khalid, 2016), there are three common GPS Spoofing techniques with different sophistication levels. They are simplistic, intermediate, and sophisticated. (Humphreys & al., 2008)

The simplistic spoofing attack is the most commonly used technique to spoof GPS receivers. It only requires a COTS GPS signal simulator, amplifier, and antenna to broadcast signals towards the GPS receiver. It was performed successfully by Los Almos National Laboratory in 2002. (Warner & Johnson, 2002) Simplistic spoofing attacks can be expensive as the GPS simulator can run $400K and is heavy (not mobile). The available GPS signal does not synchronize simulator signals, and detection is easy.

In the intermediate spoofing attack, the spoofing component consists of a GPS receiver to receive a genuine GPS signal and a spoofing device to transmit a fake GPS signal. The idea is to estimate the target receiver antenna position and velocity and then broadcast a fake signal relative to the genuine GPS signal. This type of spoofing attack is difficult to detect and can be partially prevented by using an IMU. (Humphreys & al., 2008)

In sophisticated spoofing attacks, multiple receiver-spoofer devices target the GPS receiver from different angles and directions. The angle-of-attack defense against GPS spoofing in which the angle of reception is monitored to detect spoofing fails in this scenario. The only known defense successful against such an attack is cryptographic authentication. (Humphreys & al., 2008) [14]

Note that prior research on spoofing was to exclude the fake signals and focus on a single satellite. ECD ( next section) includes the fake signal on a minimum of four satellites and then progressively / selectively eliminates their effect until the real weaker GPS signals become apparent. (Eichelberger, 2019)

EICHELBERGER’S CD – COLLECTIVE DETECTION MAXIMUM LIKELIHOOD LOCALIZATION APPROACH (ECD)

Returning to the spoofing attack discussion, Dr. Manuel Eichelberger’s CD – Collective detection maximum likelihood localization approach, his method not only can detect spoofing attacks but also mitigate them! The ECD approach is a robust algorithm to mitigate spoofing. ECD can differentiate closer differences between the correct and spoofed locations than previously known approaches. (Eichelberger, 2019) COTS has little spoofing integrated defenses. Military receivers use symmetrically encrypted GPS signals, subject to a “replay” attack with a small delay to confuse receivers.

ECD solves even the toughest type of GPS spoofing attack, consisting of spoofed signals with power levels similar to the authentic signals. (Eichelberger, 2019) ECD achieves median errors under 19 m on the TEXBAT dataset, the de-facto reference dataset for testing GPS anti-spoofing algorithms. (Ranganathan & al., 2016) (Wesson, 2014) The ECD approach uses only a few milliseconds worth of raw GPS signals, so-called snapshots, for each location fix. This enables offloading of the computation into the Cloud, allowing knowledge of observed attacks.[1] Existing spoofing mitigation methods require a constant stream of GPS signals and tracking those signals over time. Computational load increases because fake signals must be detected, removed, or bypassed. (Eichelberger, 2019)

Table 7.7 Standard Taxonomy of Representative Electromagnetic (EM) Deception Techniques

| Deception Matrix Quadrant | Electromagnetic Deception Categories | EW Deception Techniques |

| Conceal Facts (dissimulation) | Type 1 manipulative EM deception: eliminate revealing, EM telltale indicators that hostile forces may use radar | Radar Cross Section (RCS) suppression by low observable methods: radar absorption materials or radar energy redirection to reduce effective RCS |

| Conceal signals within wideband spread-spectrum signals (sequence, frequency hopping) | ||

| Radar chaff and cover jamming to reduce signal quality and mask the target’s signature | ||

| Reveal fiction (simulation) | Imitative EM deception: introduces EM energy into enemy systems imitates enemy emissions | Radar signature, IFF [15] spoofing; store, repeat, or imitate RCS, power signatures, or IFF codes to appear as the enemy system signals, |

| Type 2 manipulative EM deception: convey misleading EM telltale indicators that hostiles may use deceptive | Deceptive jamming to induce error signals within receiver–processor logic or range estimation errors | |

| Navigation beaconing: intercept and rebroadcast beacon signals on the same frequency to cause inaccurate bearings and navigation solutions | ||

| Simulative EM deception: simulate friendly or actual capabilities to mislead hostile forces | Saturation and Seduction decoys to misdirect, overload signal generators, or cause fire control to break the lock on the intended targets conceal | |

| Conceal fiction | Withhold deception capabilities until the surprise project | Protect electronic deception emissions and modes—husband assets. |

| Reveal facts | Surveillance conditioning display | Display signatures and selected capabilities to desensitize radar/overwatch surveillance |

Sources: (Adamy D. L., EW 104: EW against a new generation of threats, 2015) (Army, Joint Doctrine for Electronic Warfare – Joint Pub 3-51, April 7, 2000) (Bennett & Waltz, 2007)

Signals Intelligence (SIGINT)

Deception techniques are also employed with SIGINT agents/community. SIGINT employs deceptive methods to intercept, collect, and analyze external and communications intelligence ( COMINT). Cryptanalytic deception methods to gain keying information or disrupt or bypass encrypted channels.[16] (Nichols R. K., Chapter 18: Cybersecurity Counter Unmanned Aircraft Systems (C-UAS) and Artificial Intelligence, 2022) (Nichols R. K., ICSA Guide to Cryptography, 1999) (Schneier, 1995) (Army, FM 3-38 Cyber Electromagnetic Activities, 2014) (Bennett & Waltz, 2007)To wit:

Ciphertext replay: Unencrypted ciphertext is recorded, modified, and replayed with a valid key interval to disrupt the target system.

Key spoofing: Impersonates key distribution server and issues false keys to target, then decrypts traffic issued under the false key.

Man-in-the-Middle (MIM) Secure a trusted position between two parties and issue spoofed keys to both. Then intercepts all traffic and can change at will.

The above methods may be used to intercept/disrupt hostile communication by inserting false/misleading transmissions to deceive or reduce the integrity of communication channels. (Bennett & Waltz, 2007)

CONCLUSIONS

Deception planning requires careful application of multiple methods across channels to limit a target’s ability to compare multiple sources for conflicts, ambiguities, uncertainties, or feedback cues to simulated or hidden information. (Bennett & Waltz, 2007) UASs are reasonable agents to deliver deceitful payloads against CBNE targets, assets, and critical national infrastructure. (DHS, 2018)

Bibliography

Adamy, D. -0. (2015). EW 104 EW Against a New Generation of Threats. Boston: Artech House.

Adamy, D. (2001). EW 101 A First Course in Electronic Warfare. Boston, MA: Artech House.

Adamy, D. (2001). EW 101: A First Course in Electronic Warfare. Boston: Artech House.

Adamy, D. (2009). EW 103 Tactical Battlefield Communications Electronic Warfare. Boston, MA: Artech House.

Adamy, D. L. (2004). EW 102 A Second Course in Electronic Warfare. Boston: Artech House.

Adamy, D. L. (2009). EW 103: Tactical Battlefield Communications Electronic Warfare. Norwood, MA: Artech House.

Adamy, D. L. (2015). EW 104: EW against a new generation of threats. Norwood, MA: Artech House.

Adamy, D. L. (2021). Space Electronic Warfare. Norwood, MA: Artech House.

Army, U. (2014). FM 3-38 Cyber Electromagnetic Activities. Washington: DoD.

Army, U. (April 7, 2000). Joint Doctrine for Electronic Warfare – Joint Pub 3-51. Washington: DoD.

Askin, O. I. (2015). Cyberwarfare and electronic warfare integration in the operational environment of the future: cyber, electronic warfare. . Cyber Sensing 2015 (14 May 2015) (pp. Proceedings Vol 9458, Cyber Sensing 2015; 94580H (2015) SPIE Defense + Security, 20). Washington: Askin, O., Irmak, R, and Avseyer, M. (14 May 2015) Cyber warfare and electronic war 94580H (2015) SPIE Defense + Security, 20.

Bade, H. S. (2009). Analysis_of_Crowd_Behaviour_Theories_in_Panic_Situation. Retrieved from https://www.researchgate.net/publication/224099630 – Intern. Conf. on Information and Multimedia Technology: https://www.researchgate.net/publication/224099630_Analysis_of_Crowd_Behaviour_Theories_in_Panic_Situation

Bennett, M., & Waltz, a. E. (2007). Counter Deception: Principles and Applications for National Security. Norwood, MA: Artech House.

CBS News. (2021 updated, October 24). the-station-nightclub-fire-rhode-island-what-happened-and-whos-to-blame/. Retrieved from https://www.cbsnews.com/news/: https://www.cbsnews.com/news/the-station-nightclub-fire-rhode-island-what-happened-and-whos-to-blame/

Chrockett, Z. (2014, June 16). deadliest-soccer-disasters. Retrieved from https://priceonomics.com/: https://priceonomics.com/historys-deadliest-soccer-disasters/

CJCSI. (2022). CJCSI 3210.01A -Ref f . Washington: USDOJ.

Daniel, D., & Herbig, a. K. (1982). Propositions on Military Deception. In J. Gooch, & a. A. (eds), Military Deception and Strategic Surprise (pp. pp. 155-156). Totowa, NJ: Frank Cass & Co.

Dewar, M. (1989). The Art of Deception in Warfare. Devon, UK: David & Charles Pub.

DHS. (2018, May 22). Cybersecurity Risks Posed by Unmanned Aircraft Systems. Retrieved from https://www.eisac.com/: https://www.eisac.com/cartella/Asset/00007102/OCIA%20-%20Cybersecurity%20Risks%20Posed%20by%20Unmanned%20Aircraft%20Systems.pdf?parent=115994

DoD-02. (2018). Information Operations (IO) in the United States. Retrieved from JP 3-13: http://www.dtic.mil/doctrine/new_pubs/jp3_13.pdf

Dorn, T. (2021). Unmanned Systems: Savior or Threat. Conneaut Lake, PA: Page Publishing.

Eichelberger, M. (2019). Robust Global Localization using GPS and Aircraft Signals. Zurich, Switzerland: Free Space Publishing, DISS. ETH No 26089.

EnergyJustice.net. (2022, February 10). Nuclear Operating Plants in the US. Retrieved from http://www.energyjustice.net/map/: http://www.energyjustice.net/map/nuclearoperating

Gerwehr, S., & Glenn, a. R. (2002). Unweaving the Web: Deception and Adaptation in Future Urban Operations. Santa Monica, CA: RAND.

Haider, Z., & Khalid, &. S. (2016). Survey of Effective GPS Spoofing Countermeasures. 6th Intern. Ann Conf on Innovative Computing Technology (INTECH 2016) (pp. 573-577). IEEE 978-1-5090-3/16.

Haswell, J. (1985). The Tangled Web: The Art of Tactical and Strategic Deception. Buckinghamshire, UK: John Goodchild Pub.

Humphreys, T., & al., e. (2008). Assessing the spoofing threat: Development of a portable GPS civilian spoofer. In Radionavigation Laboratory Conf. Proc.

Kallenborn, Z., & Bleck, a. P. (2018). Swarming destruction: drone swarms and chemical, biological, radiological, and nuclear weapons. The nonproliferation review, pp. Vol 25, Issue 5-6, pp. 523-543.

Latimer, J. (2001). Deception in War: The Art of the Bluff, the Value of Deceit, and the most thrilling Episodes of Cunning in Military History, from the Trojan War to the Gulf War. Woodstock, NY: Overlook Press.

MathTech, Inc. (1980). Deception Maxims: Fact and Folklore. Princeton, NJ: Everest Consulting Associates and MathTech, Inc.

Mitchell, R. W. (1986, p358). In Epilogue: Deception Perspectives on Human and Nonhuman Deceit. Albany, NY: State University of New York Press.

Nichols, R. K. (1999). ICSA Guide to Cryptography. New York City: McGraw Hill.

Nichols, R. K. (2018, March 27). short-circuiting-simple-panic-attacks-quick-guide-out. Retrieved from https://www.linkedin.com/pulse/: https://www.linkedin.com/pulse/short-circuiting-simple-panic-attacks-quick-guide-out-nichols-dtm/

Nichols, R. K. (2020). Counter Unmanned Aircraft Systems Technologies & Operations. Manhattan, KS: www.newprairiepress.org/ebooks/31.

Nichols, R. K. (2022). Chapter 18: Cybersecurity Counter Unmanned Aircraft Systems (C-UAS) and Artificial Intelligence. In D. M. R. K. Barnhart, Introduction to Unmanned Aircraft Systems, 3rd Edition (pp. 399-440). Boca Raton, FL: CRC.

Nichols, R. K., & Mumm, H. C. (2019). Unmanned Aircraft Systems in the Cyber Domain, 2nd Edition. Manhattan, KS: www.newprairiepress.org/ebooks/27.

Nichols, R. K., & Sincavage, S. M. (2021). Disruptive Technologies with Applications in Airline, Marine, and Defense Industries. Manhattan, KS: New Prairie Press #38.

Nichols, R., & al., e. (2020). Unmanned Vehicle Systems and Operations on Air, Sea, and Land. Manhattan, KS: New Prairie Press #35.

Nichols, R., & Ryan, D. &. (2000). Defending Your Digital Assets Against Hackers, Crackers, Spies and Thieves San Francisco: McGraw Hill, RSA Press.

Poisel, R. (2002). Introduction to Communications Electronic Warfare Systems. Norwood, MA: Artech House.

R.K. Nichols & Lekkas, P. (2002). Wireless Security; Threats, Models & Solutions. NYC: McGraw Hill.

R.K. Nichols, e. a. (2020). Unmanned Vehicle Systems & Operations on Air, Sea & Land. Manhattan, KS: New Prairie Press #35.

R. K. Nichols, & et al. (2022). DRONE DELIVERY OF CBNRECy – DEW WEAPONS, Emerging Threats of Mini-Weapons of Mass Destruction and Disruption (WMDD). Manhattan, KS: New Prairie Press #TBA.

Ranganathan, A., & al., e. (2016). SPREE: A Spoofing Resistant GPS Receiver. Proc. of the 22nd ann Inter Conf. on Mobile Computing and Networking, ACM, pp. 348-360.

Rowe, N. C. (2004). Honeynet Project- Research on Deception in Defense Information Systems. Proc DoD Command and Control Research Program Conf. San Diego, CA: DoD.

Schneier, B. (1995). Applied Cryptography: Protocols, Algorithms and Source Code in C. New York John Wiley & Sons.

Skoudis, E. (2004). Malware: Fighting Malicious Code. Upper Saddle River, NJ: Prentice-Hall.

T.E. Humphries, e. (2008). Assessing the Spoofing Threat: Development of a portable GPS Spoofing Civilian Spoofer. ION (pp. Sept 16-19). Savana, GA: ION.

Tippenhauer, N., & et.al. (2011). On the requirements for successful spoofing attacks. Proc. of the 18th ACM Conf. on Computing and communications security (CCS), 75-86.

USAF. (January 4, 2002). Air Force Doctrine Document AFDD 2-5, Information Operations.Washington: USAF.

Warner, J., & Johnson, &. R. (2002). A Simple Demonstration that the system (GPS) is vulnerable to spoofing. J. of Security Administration. Retrieved from https://the-eye.eu/public/Books/Electronic%20Archive/GPS-Spoofing-2002-2003.pdf

Wayner, P. (2008). Disappearing Cryptography: Information Hiding: Steganography and Watermarking, 3rd ed. Baltimore: Morgan Kaufmann.

Wesson, K. (2014, May). Secure Navigation and Timing without Local Storage of Secret Keys. Ph.D. Thesis.

Endnotes

[1] This chapter does not discuss counter-deception strategies and methods. It would require a book by itself. But be aware that there are counter–deception strategies employed by military and LEO forces globally for any deception technique or technology.

[2] This is the 2nd D in WMDD.

[3] In our introductory example. The penalty is death or trampling,

[4] There are fascinating reconstructions and images of the crowds panicking – trying to get out and only one long passageway to funnel the hundreds escaping.

[5] On May 9, 2001, Ghana’s two most prominent teams — Accra Hearts and Asante Kotoko — came together for a match at Accra Sports Stadium that would become the deadliest sporting disaster in African history.

Due to the heated nature of the rivalry, extra security had been ordered, and trouble had been anticipated. When the match ended in a 2-1 Accra Hearts victory, the match lived up to its expectations: angry Kotoko fans began ripping plastic chairs out of the ground and hurling them onto the pitch. As with the Estadio Nacional Disaster, police responded by launching tear gas and firing plastic bullets into the crowd — not just at those guilty of hooliganism, but at everyone present. A massive stampede of 40,000 fans rushed to exit the stadium, resulting in packed corridors; by the time the masses had cleared, 127 lay dead, most from compressive asphyxiation.

[6] The introductory ballpark example exploits disinformation.

[7] These concepts are important to the use of UAS in deception operations.

[8] Think F.B., Instagram, and every social media outlet.

[9] One doesn’t have to look far in today’s society to see the bandwagon effects of pushing an agenda and having government, big tech, and the majority of news outlets harping on anyone’s deception. Truth shines the light on all situations but usually is found out too late.

[10] EW and IO. are covered in detail in Chapter 14 (Nichols & Mumm, Unmanned Aircraft Systems in the Cyber Domain, 2nd Edition., 2019). Cyber operations are covered in detail in (Nichols R. K., Chapter 18: Cybersecurity Counter Unmanned Aircraft Systems (C-UAS) and Artificial Intelligence, 2022), and Maritime security involving Cyber is discussed vigorously in (Nichols & Sincavage, Disruptive Technologies with Applications in Airline, Marine, and Defense Industries, 2021)

[11] ECCM was considered T.S. classified with most secret protocols and design algorithms. TS = Top Secret

[12] EW, E.S., E.P., E.A. definitions were adjusted via (Bennett & Waltz, 2007)to be consistent with our UAS weapons deployment theme.

[13] Adamy (2001) is correct when he suggests that the “key to understanding EW principles (particularly the R.F.) part is to understand radio propagation theory. Understanding propagation leads logically to understanding how they are intercepted, jammed or protected.” (Adamy D., 2001)

[14] (Nichols & al., 2020) have argued the case for cryptographic authentication on civilian UAS /UUV and expanded the INFOSEC requirements.

[15] IFF = Identify Friend or Foe challenge system

[16] Multiple sources pose this solution.