16 Assessing the Drone Delivery Future WMDD and DEW Threats/Risks

By Dr. Hans C. Mumm & Wayne D. Lonstein, Esq.

STUDENT LEARNING OBJECTIVES

The student will gain knowledge of the concepts and framework related to the future uses and misuses of autonomous systems in the Weapons of Mass Destruction (WMD), Directed Energy Weapons (DEW), and cyber weapons. This will include multiple autonomous systems attack vectors, legal ramifications, air space/freedom of movement considerations, and assessment recommendations for a safe and secure future.

The student will review the legal considerations and consequences regarding artificial intelligence in WMD/DEW applications. The student will appreciate the potential legal consequences when the autonomous systems are deployed thousands of miles away from the operator.

A LOOK BACK AT THE TRADITIONAL DELIVERY SYSTEMS

CBNRE weapons can be delivered “via various mechanisms including but not limited to; ballistic missiles, air-dropped gravity bombs, rockets, artillery shells, aerosol canisters, land mines, and mortars” (Kimball, 2020). This list is based on warfare in the pre-pandemic years, as it is now clear that biological weapons can be delivered through means different than traditional warfare. The speed of commerce, travel, and cultures around the world proved a model of how quickly biological agents can spread and negatively affect the entire plant.

UAS platforms are ideal for dispersing chemical agents. “Like cruise missiles, UAVs are ideal platforms for slower dissemination due to controllable speeds and dispersal over a wide area. UAVs can fly below radar detection and change directions, allowing them to be retargeted during flight” (Kimball, 2020).

The Islamic State (I.S.) has worked to create “inventive and spectacular ways of killing people has long been a hallmark of Islamic State’s modus operandi. The use of mustard gas and chlorine against Kurdish Peshmerga fighters is well documented, as is research by I.S. to develop radiological dispersion devices” (Dunn, 2021).

STACK INTEGRATION-EMERGING TECHNOLOGIES OFFERS NEW TACTICS, TECHNIQUES, AND PROCEDURES (TTPS)

The integration and cooperation of the seven recognized autonomous systems (UAS, UGV, UUV, USV, humanoid, cyber, and exoskeleton) would forever change the landscape of our world. Introducing the soon-to-be-eighth autonomous system “nano-biologics” will inject complexity into this discussion that humankind may not be ready to confront. The worldwide

“healthcare market will fuel human/machine enhancement technologies primarily to

augment the loss of functionality from injury or disease, and defense applications will

likely not drive the market…the gradual introduction of beneficial restorative cyborg

technologies will, to an extent, acclimatize the population to their use.” (Emanuel, 2019)

This change will be difficult for most cultures to embrace as “Societal apprehension following

the introduction of new technologies can lead to unanticipated political barriers and slow

domestic adoption, irrespective of value or realistic risk” (Emanuel, 2019).

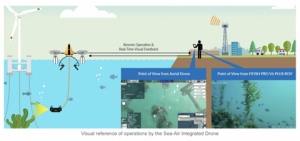

The changing nature of the autonomous systems space, from remote-controlled toy airplanes to drones to military UAVs, is now about to be upended yet again as the industry starts creating hybrid multi-purpose systems. These “drones are now capable of moving from a wheeled system to an airborne system. Other drones are capable of moving from subsurface (underwater) to airborne modality” (Pledger, 2021). An example of this is “QYSEA, KDDI, and PRODRONE have teamed up to create the World’s first sea-to-air drone” (Allard, 2022).

Figure 16.1: Picture of a Sea-Air Integrated Drone

Source: (Allard, 2022)

Figure 16.2: Diagram showing communications between the sea-air drone and remote operator

Source: (Allard, 2022)

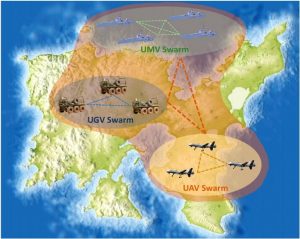

Integrating all autonomous systems will move the industry from a single point of use system to a cooperative goal-oriented artificial ecosystem. The swarm concept will move from a single system type swarming with their kind to the ability to mix with different types of autonomous systems to swarm and goal seek, allowing some to be destroyed for the group’s overall goal.

Figure 16.3: Illustration of UMVs, UGVs, and UAVs swarms working together

Source: (Stolfi, 2021)

As the world has become more dangerous, new capabilities must be developed to counter the violence and evil that is becoming too prevalent. With the National Defense University in Washington, D.C., Harry Wingo worked on a counter-sniper, counteractive shooter system.

“a collaborative design (“co-design”) approach to accelerating public and political acceptance of the cyberspace and information risks, inherent to the development and deployment of indoor smart building unmanned aircraft systems (UAS) to provide immediate “visual access” to law enforcement and other armed first responders in the case of an active shooter incident inside of a building, including risks concerning (1) system safety and reliability; (2) supply chain security; (3) cybersecurity; and (4) privacy. The paper explores the potential to use a virtual world platform as part of a phased pilot at the U.S. Service Academies to build trust in the privacy, security, and efficacy of a counteractive shooter UAS (Wingo, 2020).

Assessment of Emerging Threats of Mini-WMD

The threat of mini-WMD grows as circuits get smaller, cheaper, and faster. The ability for anyone to buy parts from the internet and gain the knowledge to assemble such a system by watching a YouTube video is only accelerating. Terrorist groups have adapted commercial drones for their own use, “including intelligence collection, explosive delivery (either by dropping explosives like a bomb, the vehicle operating as the impactor, or the drone having an equipped rocket-launching system of some type) and chemical weapon delivery” (Pledger, 2021). The array of chemical and biological material that can easily be obtained, weaponized if required, and then assembled into a weapon for disbursement and use is only keystrokes away and sometimes as close as the nearest superstore.

Terrorists do not need to acquire exotic chemical weapons to be effective “even gasoline spread like a vapor when ignited has 15 times the explosive energy of the equivalent weight of TNT. Moreover, even if the gasoline were ignited, its effect on a crowd would be devastating” (Dunn, 2021).

The challenge of dealing with these issues is becoming more difficult as terrorist groups continue to ramp up their technical know-how and put this knowledge to use. Consider,

Once Pandora’s box was opened, bad actors adapted quickly and used drones to plan and conduct attacks. Between 1994 and 2018, more than 14 planned or attempted terrorist attacks took place using aerial drones. Some of these were:

- in 1994, Aum Shinrikyo attempted to use a remote-controlled helicopter to spray sarin gas, but tests failed as the helicopter crashed;

- in 2013, a planned attack by Al-Qaeda in Pakistan using multiple drones was stopped by local law enforcement;

- in 2014, the Islamic State began using commercial off-the-shelf and homemade aerial drones at scale during military operations in Iraq and Syria;

- in August 2018, two GPS–guided drones, laden with explosives, were used in a failed attempt to assassinate Venezuelan President Maduro; and,

- in January 2018, a swarm of 13 homemade aerial drones attacked two Russian military bases in Syria (Pledger, 2021).

The U.S. military incorporates and integrates mini-systems, including the FLIR Black Hornet PRS illustrated in Figure 16.4. This mini-UAV enables a soldier to have situational awareness without being detected. The Black Hornet PRS has electrical optical (E.O.) and infrared (I.R.) capabilities like larger UAVs and can provide the same reconnaissance as UGVs. The FLIR Black Hornet PRS provides the “Game-changing E.O. and I.R. technology [that] bridges the gap between aerial and ground-based sensors, providing the same amount of S.A. as a larger UAV and threat location capabilities of UGVs” (FLIR, 2022)

Figure 16.4: Image of a soldier and a Black Hornet UAV

Source: (FLIR, 2022)

The PRS is reported to be nearly silent, with a flight time of up to 25 minutes. The mission of these mini drones is expanding as sensor systems come online and the military discovers new ways to employ the technology.

Does the World Have an Answer to These Emerging Threats?

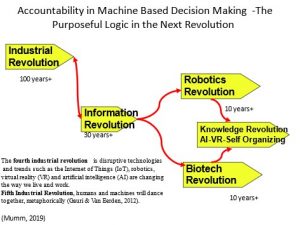

The fourth industrial revolution, which we are currently experiencing, encompasses “disruptive technologies and trends such as the Internet of Things (IoT), robotics, virtual reality (V.R.) and artificial intelligence (A.I.) are changing the way we live and work. In the Fifth Industrial Revolution, humans and machines will dance together, metaphorically” (Gauri, 2019)

Figure 16.5:Timeline of Industrial Revolutions

Source: (Gauri, 2019)

The fourth industrial revolution allows innovation to flourish and humans to do more with less. Yet, the emerging threats that this revolution is creating are seemingly ignored as the world continues to run headlong toward the fifth industrial revolution. The cyber threat in the A.I. and IoT industries is cause for pause. The caution does not even begin to encompass the evolution of quantum computing that the world is racing towards with little security, ethics, or understanding of this technology and how it will transform humanity. Quantum computing will change how our “national security institutions conduct their way of business in all its forms and functions. Data protection, risk modeling, portfolio management, robotic process automation, digital labor, natural language processing, machine learning, auditing in the cloud, blockchain, quantum computing, and deep learning may look very different in a post-quantum world” (Mumm, 2022). Consider that in 2022, the human brain is being connected to machines, machines that can control all layers of autonomous systems, including swarms of different autonomous systems that can goal seek, adjust on the fly, and evolve within the architecture more it is used. This is exciting; however, it may not make for a safer world.

The development of direct neural enhancements of the human brain for two-way data transfer would create a revolutionary advancement in future military capabilities. This technology is predicted to facilitate read/write capability between humans and machines and between humans through brain-to-brain interactions. These interactions would allow warfighters direct communication with unmanned and autonomous systems and other humans to optimize command and control systems and operations. (Emanuel, 2019)

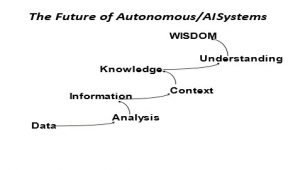

What the technology world is hoping to bring to humanity is wisdom, not simply the ability to send data, humanity is seeking wisdom, and it is this wisdom that will allow the fourth and fifth industrial revolutions to enhance humankind, and with guidance and care, allow a more peaceful world to emerge.

Figure 16.6: Future capabilities of autonomous/A.I. systems

Source: (Mumm, 2022)

History has taught humanity that the world must have accountability throughout these industrial revolutions. This becomes an even greater burden in the fifth industrial revolution as “The threat of unchecked technology and the ability to weaponize quantum computing continue to evolve. Technology flourishes as free markets expand. Quantum computers will be the next productivity accelerator expanding the market’s breadth and depth”.

(Mumm, 2022)

Figure 16.7: Image of the Industrial Revolution and the next revolution

Source: (Mumm, 2022)

The world does have an answer to future emerging threats; however, history has repeatedly shown that the will of humanity to solve future threats is low, if not non-existent. The threat of autonomous systems combined with the CBNRE and escalating cyber security issues have all been briefed to the world leaders. Industry and government should support the continued work to

establish a whole-of-nation approach to human/machine enhancement technologies versus a whole-of-government approach. Federal and commercial investments in these areas are uncoordinated. They are being outpaced by Chinese research and development efforts, which could result in a loss of U.S. dominance in human/machine enhancement technologies. (Emanuel, 2019)

These discussions have occurred over decades in academic, military, and political arenas. Yet, the technology continues to move forward, mostly unchecked, with few laws, policies, governance, or even agreements on how these threats should be curtailed. Consider the cultural struggle around the world today and how technology can bring us all together as the “fifth revolution will create a new socio-economic era that closes historic gaps in last-mile inclusion and engages the “bottom billion” in creating quantum leaps for humanity and a better planet. (Gauri, 2019) Humanity struggles even to define the ethical parameters of technology and its implementations, forget about what happens when multiple technologies such as autonomous systems begin to communicate, and goal seek together and what secondary and tertiary effects this is going to cause. Sadly, all too often, a community will install a stop sign at a dangerous intersection after someone gets killed in the intersection. Humanity treats technology much the same as the known dangerous intersection, and thus the answer to controlling the emerging threat of CBNRE, cyber and integrated autonomous systems is possible. The question is will humanity break from historical tradition and create the framework and solutions before the threats are allowed to go unchecked and flourish to the detriment of humankind.

Legal Considerations for Autonomous Systems as WMD/DEW Delivery Platforms

Scientists believe that the earliest blending of technology and warfare occurred in 400,000 BC. Researchers found evidence of humans using spears in what is now known as Germany. In 5,300 BC, horses were domesticated and used for transporting and mobilizing warfare. Military technology warfare advanced quickly from China’s Ming Dynasty, developing the matchlock, muskets in the 1500s, underwater mines in the 1770s, submarines in the 1870s, and nuclear bombs in the early 1940s. The arc of military history is rampant advancements in technology in many ways. (Marshall, 2009) While it is true that the transition from the spear to the nuclear bomb took thousands of years, one advancement, the computer, has led to an exponential increase in the speed of advancement of military warfare and humanitarian law.

George Washington once wrote: “My first wish is to see this plague of mankind, war, banished from the earth.” (Hoynes, 1916) Sadly, his sentiment and those of untold others have proven more aspirational than realistic. The harsh reality is that conflict between humans is nearly as old as humanity itself. It was only through evolution and enlightenment that some individuals began to ponder that if war is inevitable, shouldn’t there be some way of limiting its horrors to combatants only and theoretically protect the innocent?

According to Leslie Green, “From earliest times, some restraints were necessary during armed conflict. Thus, we find numerous references in the Old Testament wherein God imposes limitations on the warlike activities of the Israelites.” (Green, 1998) The Chinese, Roman, and Greek empires sought to address the subject. Though repeated attempts were made to codify some universal rules of warfare, it was not until the 1860s when Henri Durant, founder of the Red Cross, began to codify a generally accepted international set of rules regarding warfare and the treatment of civilians and combatants. (Lu, 2018)

Figure 16.8: WWII Red Cross Prisoner of War Gift Package

Source: (National WWII Museum, 2020)

According to the International Committee of the Red Cross, six crucial principles must be adhered to by all combatants:

- No targeting civilians;

- No torture or inhumane treatment of detainees;

- No attacking hospitals and aid workers;

- Provide safe passage for civilians to flee;

- Provide access to humanitarian organizations; and

- No unnecessary or excessive loss and suffering. (anonymous, 2022)

Although the technology discussed in this book is groundbreaking, the technology may ultimately remove humans from the decision-making process to determine if deadly force is appropriate to use in a particular situation, against whom, and when. Humans must currently choose to deploy autonomous technology in battle; however, A.I. and machine learning may soon make that decision without human intervention. Without some set of rules and standards for nations making such a critical decision, the likelihood of a mass casualty event involving the civilian population will increase.

Assessment of the State of Readiness for the Legal Community to Prosecute Cases with Autonomous Systems Use in the WMD/DEW space

With rapid advances in military technology in the 1900s and 2000s, the need for some practice of uniform international humanitarian warfare rules arose. Gas, chemical, and nuclear weapons carried an inherent likelihood of significant civilian casualties. Advances in the rapid delivery of these weapons globally and without warning results in less time to prepare civilian populations for an attack. Without new rules of warfare and adjudication processes for violators, the risk to mankind becomes untenable. The first attempts to address this new reality started with the First Hague Conference of 1898-1899, the Second Hague Convention of 1907, the Geneva Conventions, the League of Nations, and eventually the United Nations. (Klare, 2019)

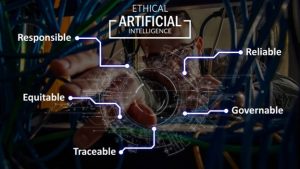

Enter the most recent and perhaps most significant technological advance or change in decades, Artificial Intelligence (“A.I.”). A.I. and autonomous warfare may be a dream for many in the military, yet others foresee a nightmare. Without some foundational understanding of its development and use on the battlefield, many fear runaway technology which could cause catastrophic damage. In 2018, the United States Department of Defense (“DoD”) Defense Innovation Board (“DIB”) developed foundational principles for the safe and ethical implementation of A.I. (United States Department of Defense, Defense Innovation Board, 2019). The DIB issued its recommendations to the DoD in 2019. (Lopez, 2020) In February 2020, the DoD adopted the following five A.I. principles:

- Responsible: DoD personnel will exercise appropriate levels of judgment and care while remaining responsible for the development, deployment, and use of A.I. capabilities.

- Equitable: The department will deliberate steps to minimize unintended bias in A.I. capabilities.

- Traceable: The department’s A.I. capabilities will be developed and deployed such that relevant personnel possesses an appropriate understanding of the technology, development processes, and operational methods applicable to A.I. capabilities, including transparent and auditable methodologies, and data sources, and design procedures and documentation.

- Reliable: The department’s A.I. capabilities will have explicit, well-defined uses, and the safety, security, and effectiveness of such capabilities will be subject to testing and assurance within those defined uses across their entire life cycles.

- Governable: The department will design and engineer A.I. capabilities to fulfill their intended functions while possessing the ability to detect and avoid unintended consequences and disengage or deactivate deployed systems that demonstrate unintended behavior. (Lopez, 2020) Figure 16.9: United States Department of Defense AI Interests

Source: (Lopez, 2020)

Whether these rules of warfare have had the intended effect is still a subject of much debate. With the parallel access to new technology such as A.I., many Non-Governmental Organizations (NGOs), terror groups, and individuals have gained through the internet, muted efficacy of humanitarian rules of warfare may be the result. The discussion of the legal considerations of implementing A.I. on the battlefield deserves a complete and detailed analysis. To limit the discussion in this textbook, students must understand that there may be real-life legal consequences relating to the use of A.I. automation or augmentation. Given the devastating capability of WMD/DEW technology to inflict mass casualties, the possibility of legal consequences must be considered in all aspects.

Legal and Cyber Considerations While Building the Legal Framework Towards Peaceful Containment/Use of Autonomous Systems in the Future

To successfully promulgate rules of warfare using A.I., it is essential to determine who is responsible for unintended harm or intentional improper use of AI-based military systems. Is it the soldier who pushes a button to engage an A.I. weapons system that wrongfully kills thousands of civilians, or is it the superior officer who ordered the soldier to engage the system? In civil law, the legal principles of proximate cause are key.

According to John Kingston of the University of Brighton, “Criminal liability usually requires an action and a mental intent (in legalese and actus rea and mens rea).” (MIT Technology Review, 2018) Kingston examines the groundbreaking work of Gabriel Hallevy, who examined if and how A.I. systems that cause injury or death could be held criminally liable. (Hallevy, 2015) (Kingston, 2018)

Hallevy discussed three different legal theories by which A.I. systems might be held criminally responsible for harm or civilly liable for damages.

- “Perpetrator-via-another. Suppose an offense is committed by a mentally deficient person, a child, or an animal. In that case, the perpetrator is held to be an innocent agent because they lack the mental capacity to form a mens rea(this is true even for strict liability offenses). However, if the innocent agent was instructed by another person (for example, if the owner of a dog instructed his dog to attack somebody), then the instructor is held criminally liable.”

- “Natural-probable-consequence. In this model, part of the A.I. program intended for good purposes is activated inappropriately and performs a criminal action.”

- “Direct liability. This model attributes both actus reus and mens rea to an A.I. system. It is relatively simple to attribute an actus reus to an A.I. system. If a system takes an action that results in a criminal act or fails to take any action when there is a duty to act, then the actus reus of an offense has occurred.” (Hallevy, 2015)

Although seemingly a complex legal theory, the concept of criminal or civil liability for A.I. harm, be it on the battlefield or in everyday civilian life, the adjudication process will require determining what type of A.I. technology is at issue.

Figure 16.10: MIT Technology Review

Source: (Hallevy, 2015)

Given the speed of technology and the seemingly endless uses for A.I., it is challenging to create definitions for the type of A.I. in a particular instance. In striving for simplicity, this definition from 2020 seems to work:

“A.I. solutions are meant to work in conjunction with people to help them accomplish their tasks better, and A.I. solutions are meant to function entirely independently of human intervention. The sorts of solutions where A.I. is helping people do their jobs better is usually referred to as “augmented intelligence” solutions while those meant to operate independently as “autonomous” solutions.” (Walch, 2020)

Initially, legal doctrine focused upon autonomous applications for A.I. technology. The concept of robots performing automated tasks or autonomous vehicles transporting people and goods globally, on, under, above the ground and seas of the earth. From a legal perspective, the analysis of autonomous intelligence is far less complicated than the legal issues relating to augmented intelligence.

“Prior scholarship has therefore focused heavily on autonomous vehicles, giving rise to two central themes. The first is that by automating the driving task, liability for car accidents will move away from negligence on the driver’s part toward product liability for the manufacturer. Because there is no person to be negligent, there is no need to analyze negligence. Scholars instead move straight to analyzing product liability’s doctrinal infirmities in the face of A.I.” (Selbst, 2020)

The legal analysis relating to augmented intelligence is more challenging than automated intelligence. Because A.I. guides a human who then acts or disregards the information. As General Mike Murray put it: “Where I draw the line — and this is, I think well within our current policies – [is], if you’re talking about a lethal effect against another human, you have to have a human in that decision-making process” (Freedberg, 2021)

Instead of determining whether an autonomous technology did not perform as designed or was incorrectly designed, the challenges created by augmented intelligence require an additional component of the interaction between man and A.I., and what if the human took any subsequent action.

A final consideration of a very brief overview of this subject is that legislation and legal precedents can have far-reaching consequences. What may seem like a logical path may be insufficient for new applications of existing A.I. technology. Generally speaking, the law moves much slower than technology. While technology is actively evolving, the law of technology moves by comparison at a snail’s pace. Larry Downes of the Harvard Business Review took this view of why slow and steady a far better legal strategy is than creating what many call “knee jerk” regulation. He wrote:

“That, in any case, is the theory on which U.S. policymakers across the political spectrum have nurtured technology-based innovation since the founding of the Republic. Taking the long view, it’s clearly been a winning strategy, especially compared to the more invasive, command-and-control approach taken by the European Union, which continues to lag on every measure of the Internet economy. (Europe’s strategy now seems to be little more than to hobble U.S. tech companies and hope for the best.” (Downes, 2018)

CONCLUSIONS

As all autonomous systems begin to integrate and communicate, they will begin to goal seek and assist each other as part of an evolving network of devices. These devices can be modular and can enter and exist in this artificial ecosystem to obtain goals, assist other autonomous systems, or assist humans as required. The proliferation of the smaller autonomous systems is of unique concern as they have an inexpensive price point and can be operated out of the box with very little training. “As the first iteration of the robotics revolution, they have proliferated on a massive scale with estimates of over five million drones having been sold worldwide.” (Dunn, 2021)

As technology continues to push human intervention aside, the legal parameters and consequences become more complex. The national and international laws will need to be created and upheld to broad standards for the future and still hold those accountable for atrocities done by autonomous systems.

Questions

- Do you think human evolution will see the WMD/DEW as a positive or negative outcome toward peace?

- List three disruptive technologies in WMD/DEW arena.

- How would you take advantage of airspace and freedom of movement with autonomous systems versus manned systems?

- Name three ways legal ramifications can deter the use/misuse of autonomous systems in the WMD/DEW arena.

- Describe two catastrophic scenarios caused by autonomous systems that are not defined by the legal arena?

Bibliography

Allard, M. (2022, January 21). QYSEA, KDDI & PRODRONE team up to create the world’s first sea-to-air drone. Retrieved from www.newsshooter.com: https://www.newsshooter.com/2022/01/21/qysea-kddi-prodrone-team-up-to-create-the-worlds-first-sea-to-air-drone/

anonymous. (2022). rules-war-why-they-matter. Retrieved from www.icrc.org/en/document/: https://www.icrc.org/en/document/rules-war-why-they-matter

Defense, U. S. (2020, March 11). DOD adopts five principles of artificial intelligence ethics. Retrieved from Army, mil: https://www.army.mil/article/233690/dod_adopts_5_principles_of_artificial_intelligence_ethics

Downes, L. (2018, February 9). How More Regulation for U.S. Tech Could Backfire. Retrieved from Harvard Business Review: https://hbr.org/2018/02/how-more-regulation-for-u-s-tech-could-backfire

Dunn, D. H. (2021). Small drones and the use of chemical weapons as a terrorist threat. Retrieved from www.Birmingham.ac.UK/research/perspective/: https://www.birmingham.ac.uk/research/perspective/small-drones-chemical-weapons-terrorist-threat.aspx

Emanuel, P. W. (2019). Cyborg Soldier 2050: Human/Machine Fusion and the Implications for the Future of the DOD. Retrieved from community.apan.org/: https://community.apan.org/wg/tradoc-g2/mad-scientist/m/articles-of-interest/3004

FLIR. (2022). Black Hornet PRS for Dismounted Soldiers. Retrieved from www.flir.com/products/: https://www.flir.com/products/black-hornet-prs/

Freedberg, S. J. (2021, April 23). Artificial Intelligence, Lawyers, And Laws Of War. Retrieved from Breaking Defense: https://breakingdefense.com/2021/04/artificial-intelligence-lawyers-and-laws-of-war-the-balance/

Gauri, P. &. (2019, May 16). what-the-fifth-industrial-revolution-is-and-why-it-matters/. Retrieved from europeansting.com/: https://europeansting.com/2019/05/16/what-the-fifth-industrial-revolution-is-and-why-it-matters/

Green, L. C. (1998). The Law of War in Historical Perspective. Providence, RI: U.S. Naval War College.

Hallevy, G. (2015). Liability for Crimes Involving Artificial Intelligence Systems. Switzerland: Springer.

Hoynes, C. W. (1916). Preparedness for War and National Defense. Washington, DC: Government Printing Office.

International Committee of the Red Cross. (2022, March 19). The Geneva Conventions of 1949 and their Additional Protocols. Retrieved from The International Committee of the Red Cross: https://www.icrc.org/en/doc/war-and-law/treaties-customary-law/geneva-conventions/overview-geneva-conventions.htm

Kimball, D. &. (2020, March 1). Chemical Weapons: Frequently Asked Questions. Retrieved from www.armscontrol.org/factsheets/Chemical-Weapons-Frequently-Asked-Questions#:: https://www.armscontrol.org/factsheets/Chemical-Weapons-Frequently-Asked-Questions#:~:text=Chemical%20weapons%20can%20be%20delivered,converted

Kingston, J. K. (2018). Artificial Intelligence and Legal Liability. Ithaca, NY: Cornell University ARXIV.

Klare, M. T. (2019). Autonomous Weapons Systems and the Laws of War. Washington, D.C.: Arms Control Association.

Lopez, T. (2020). dod_adopts_5_principles_of_artificial_intelligence_ethics. Retrieved from www.army.mil/: https://www.army.mil/article/233690/dod_adopts_5_principles_of_artificial_intelligence_ethics

Lu, J. (2018, June 28). The ‘Rules Of War’ Are Being Broken. What Exactly Are They? Retrieved from NPR.Org: https://www.npr.org/sections/goatsandsoda/2018/06/28/621112394/the-rules-of-war-are-being-broken-what-exactly-are-they

Marshall, M. (2009, July 7). Timeline: Weapons technology. Retrieved from New Scientist: https://www.newscientist.com/article/dn17423-timeline-weapons-technology/

Middleton, C. (2018). SAP launches ethical A.I. guidelines, an expert advisory panel. Retrieved from internetofbusiness.com: Middleton, C. (2018). SAP launches ethical A.I. guidelines, an expert advisory panel. Retrieved from https://internetofbusiness.com/sap-publishes-ethical-guidelines-for-a-i-forms-expert-advisory-panel/

MIT Technology Review. (2018, March 12). When an A.I. finally kills someone, who will be responsible? Retrieved from MIT Technology Review: https://www.technologyreview.com/2018/03/12/144746/when-an-ai-finally-kills-someone-who-will-be-responsible/

Mumm, D. H. (2022). Securing Quantum Computers Through the Concept of In-Memory Computing (IMC). Retrieved from www.victorysys.com

National WWII Museum. (2020, June 5). Curator’s Choice: Gifts from the “Geneva Man .”Retrieved from National WWII Museum: https://www.nationalww2museum.org/war/articles/curator-kim-guise-geneva-collections

Pledger, T. (2021). The Role of Drones in Future Terrorist Attacks. Retrieved from www.ausa.org/: https://www.ausa.org/publications/role-drones-future-terrorist-attacks

Selbst, A. D. (2020). NEGLIGENCE AND AI’S HUMAN USERS. Boston University Law Review, 1323.

Stolfi, B. D. (2021). Swarm-based-counter-UAV-defense-system. Retrieved from www.semanticscholar.org/: https://www.semanticscholar.org/paper/Swarm-based-counter-UAV-defense-system-Brust-Danoy/24179f7cf9854cb41ca2595c811d26563a49014e

United States Department of Defense. (2020, February 254). Department Of Defense Press Briefing on the Adoption of Ethical Principles for Artificial Intelligence. Retrieved from Defense.gov: https://www.defense.gov/Newsroom/Transcripts/Transcript/Article/2094162/department-of-defense-press-briefing-on-the-adoption-of-ethical-principles-for/

United States Department of Defense, Defense Innovation Board. (2019). A.I. Principles: Washington, DC: United States Department of Defense.

Walch, K. (2020, January 12). Is There A Difference Between Assisted Intelligence Vs? Augmented Intelligence? Retrieved from Forbes: https://www.forbes.com/sites/cognitiveworld/2020/01/12/is-there-a-difference-between-assisted-intelligence-vs-augmented-intelligence/?sh=418b012426ab

Wingo, H. (2020). Smart City IoT: Co-Designing Trustworthy Public Safety Drones for Indoor Flight Missions. Academic Conferences International Limit, 406-409, XX. Retrieved from Wingo, H. (2020). Smart City IoT: Co-Designing Trustworthy Public Safety Drones for Indoor Flight Missions. In (pp. 406-409,XX). Reading: Academic Conferences International Limit.