2 Technology, Ethics, Law and Humanity [Lonstein]

STUDENT LEARNING OBJECTIVES

This chapter asks the student to dive deeply into a growing concern, have we become intoxicated, infatuated, and incapacitated by the abundance of technology in our lives? While most students have not enjoyed using a rotary phone, atlas, listening to AM or FM radio, using a Citizens Band Radio, or navigating without technology, they have grown up in a world of incredible innovation. Every aspect of our public and private lives is now touched by, if not run by, technology. So, the questions must be asked by everyone, how much is too much technology, and just because the technology exists to replace manual, human-run processes (Artificial Intelligence and Machine Learning Automation), does it mean it should?

INTRODUCTION

In 2018, ye author authored an article in Forbes entitled “Governments and Businesses Are Becoming Inebriated by Technology” as a warning to society that the rapid technological developments in the last ten years are causing a dangerous over-reliance upon it. I created a term meant to capsulize the challenge confronting an increasingly technology-dependent society: “Intechication.” (Lonstein, 2018)

While overwhelmed by a myriad of new technologies such as Quantum Computing, Artificial Intelligence (“AI”), and Machine Learning (“ML”), to name a few, the blinding speed of advancements over the last five years should cause all of us to question not only dream of the possible benefits but also consider inherent risks of each as well as the consequence of combining technologies. For example, combining Social Media with the speed and scale of Quantum Computing and AL poses a formidable risk of widespread information warfare campaigns, which would be difficult, if not impossible, to contain. Where do we start? Is it too late? As Ian Malcolm (Portrayed by Jeff Goldblum), the lead in Michael Crichton’s novel – turned blockbuster movie, Jurassic Park, put it, “Your scientists were so preoccupied with whether they could, they didn’t stop to think if they should.” (Speilberg, 1993)

In a world emerging from a pandemic that, according to the World Health Organization, has caused seven million deaths globally as of April 2023 (World Health Organization, 2023), the first glimpses of a new type of pandemic are already present, instant global disinformation and misinformation campaigns on social media and elsewhere online. During the pandemic, numerous state-sponsored and private bot farms flooded social media and the broader internet with allegedly unsubstantiated claims, data, and theories, which caused widespread fear, doubt, protests, and even violence. (Himelein-Wachowiak, 2021)

Figure 2-1 Protests in Melbourne Australia

Sources: (Courtesy NY Times,) (Zhuang, 2021) (William, West Agence France-Presse — Getty Images, 2021)

The ability to automate and replicate content in social and online media may be an insurmountable problem, but what if the AI-fueled bots were using claiming content was disinformation – misinformation when it was legitimate dissent, opinion, or even factual?

NEW TECHNOLOGY – SAME OLD HUMANS: GUNS, EXPLOSIVES, BIOLOGICS, CHEMICALS, CONSUMER PRODUCTS AND CYBER

The introduction of groundbreaking new technologies is not something that is unique to the 20th and 21st centuries; in fact, some inventions were unintended by-products of research aimed to address entirely different issues. Gunpowder: one of the most important technological developments in history was a result of research designed to find a life-extending elixir by the Chinese somewhere between the eighth and ninth century A.D. At the direction of the first Chinese Emperor Qin Shi Huang, researchers travelled far and wide to discover or create what was a tool for immortality. The researchers first discovered the element Potassium Nitrate (also known as saltpeter) combined with honey to create a healing smoke or Sulphur to create a healing salve. (Xinhua Net, 2017) (Ling, 1947)

After that, researchers discovered some other properties of Potassium nitrate leading to experiments that combined it with Charcoal and Sulphur, which created the first forms of what we now call gunpowder. (Shepherd, 2022) Over the centuries, gunpowder or its elements have been used to heal, kill, conquer, and create, depending on how the possessor used it. Similarly, the gun, a by-product of the discovery of gunpowder, has also been used to protect, purloin, conquer, defend, kill, hunt, feed, and or save lives. With further development, firearms became lighter, more powerful, affordable, and available. While the use of technology in service to the public or nation is one thing, the challenges of gun ownership and use by individuals are quite another. The use of guns in warfare made it clear that their use by individuals and the challenges it presented required governments to enact laws governing their use and ownership from as early as 1689. Century. (Satia, 2019)When placing military-grade, powerful technology in the hands of humans increases the risk of its misuse. Unlike nations and organizations where, in most instances, [1]some degree of debate, consultation, and risk assessment takes place among leadership, its use by an individual does not necessarily have the same type of braking mechanisms.

Figure 2-2 Las Vegas Mass Shooting

Source: (Courtesy Florida Times Union/ AP) (Jacksonville.com, 2017)

Put another way, “There is no legislation that will strip evil from an immoral man,” by San Diego Gun Owners PAC Board member Warren Manfredi after the 2017-gun massacre at a concert in Las Vegas, Nevada.

The misuse of guns, explosives and other technological innovations in scale can lead to tragic and gut-wrenching results. Here are a few tragic examples:

Guns

Las Vegas Shooting, 61 killed over 800 injured.

- Pulse Orlando nightclub in Orlando, Fla. (June 12, 2016) – 49 killed, over 50 wounded.

- Virginia Tech in Blacksburg, Va. (April 16, 2007) – 32 killed, over 17 injured

- Sandy Hook Elementary School in Newtown, Conn. (Dec. 14, 2012), 26 killed ( mostly children)

- Luby’s Cafeteria in Killeen, Texas (Oct. 16, 1991), 23 killed. (Peralta, 2016)

Explosives

- Oklahoma City Bombing, Federal Court House, Oklahoma City, Ok April 19, 1996

- World Trade Center, February 26, 1993, New York, NY 6 killed, over 1000 killed

- Boston Marathon Bombing, Boston, Ma April 15, 2013, 3 killed, over 100 injured.

- Unabomber between 1978 and 1995 a series of bombings with 3 dead and 28 injured.

- Haymarket Square, Chicago Il. May 4, 1886, 11 killed, over 100 killed.(National Academies of Sciences, Engineering, and Medicine, 2018)

Biologics and Chemicals

Alphabet Bomber, Los Angeles, CA 1974, attempted attacks planned by a group including Muharem Kubergovic, who was arrested with 20 pounds of cyanide gas.

- Followers of Bhagwan Shri Rashneesh placed homegrown salmonella bacteria on supermarket produce, salad bars, and other location in Oregon in 1984. Seven hundred fifty-one people fell ill from the attack, intended to affect a local election.

- In 1994 and 1995, a test and follow-up attack occurred in Matsumoto (7 deaths and 500 injuries) and Tokyo Japan subway system (12 dead and thousands injured) using nerve gas. According to reports, the Aum Shinrikyo cult was also trying to develop or acquire Botulism and Ebola virus for use in weapons. (Smart, 1997)

Consumer and Commercial Technology Weaponization

- The September 11th, 2001, attacks using a box cutter to hijack and crash commercial airliners into the World Trade Center in New York City, NY, The Pentagon in Arlington, VA., and a crashed plane in Shanksville, PA. Almost 3,000 died on the attack date, thousands more died from 9/11 cleanup exposure diseases, and thousands more were injured.

- In May 2021, consumer drones were sued for supplying drugs, guns, phones, and cash to a Lee County, South Carolina prison. (Robinson, 2022)

- Explosive attack using drones on an Ecuadorian prison orchestrated by drug cartels in September 2021. (Crumley, 2021)

Internet & Social Media

- The September 11, 2001, attacks: According to the United States Department of Justice, “Evidence strongly suggests that terrorists used the Internet to plan their operations and attacks on the United States on September 11, 2001. “ (Thomas, 2003)

- Colonial Pipeline Attack, In May 2021, hackers using connectivity commenced a ransomware attack Colonial Pipeline was the target of a ransomware assault exploiting an insecure VPN, which shut it down for several days in 2021. The attack resulted in a significant nationwide fuel supply disruption and caused considerable price increases and long lines at gas stations. (United States Senate Committee on Homeland Security & Governmental Affairs, 2021)Figure 2-3 (Courtesy Jim Lo Scalzo, EPA) (EPA, J. Lo Scalzo, 2021)

- Arab Spring., December 2020 “The internet and social media were vital tools for mobilizing Arab Spring protesters and documenting some government injustices.” (Robinson K., 2020)

- In a case of alleged cryptocurrency financing of terrorist groups in Syria, Victoria Jacobs, a/k/a Bakhrom Talipov, was indicted in February 2023; Jacobs allegedly laundered $10,661 on behalf of Malhama Tactical by receiving cryptocurrency and Western Union and MoneyGram wires from supporters around the globe and sending the funds to Bitcoin wallets controlled by Malhama Tactical. In addition to sending cryptocurrency, she also purchased Google Play gift cards for the organization, according to the indictment.” (Katersky, 2023)

CAN TECHNOLOGY BE INHERENTLY EVIL?

In May 1927, Charles Lindbergh became a national hero and international celebrity. He was the first to fly across the Atlantic, from Long Island, New York, to Paris, France, non-stop and by himself. (National Air and Space Museum, 2023) With the heroic accomplishment came celebrity and, sadly, tragedy. In 1932 his firstborn child Charles, Jr., was kidnapped on March 1, 1932, and found murdered on May 12, 1932, a few miles away from the Lindbergh home. (Federal Bureau of Investigation, 2023) Seeking to remove themselves from the spotlight and media frenzy, the Lindbergh’s moved to France.

Still connected with flight, the U.S. Military asked Lindbergh to travel to Germany to assess the German air assets. He made at least three trips to Germany between 1936 and 1939. Lindbergh, of German descent, was awarded a medal known as the Commander Cross of the Order of the German Eagle on behalf of Adolph Hitler by Hermann Göring. Understandably, there was outrage in the United States and elsewhere when the story was published. Lindbergh discovered that his love of airplanes and the importance they introduced could not attenuate the risks associated with their misuse. In his book Brave Companions, author David Mc McCullough explained Lindbergh’s epiphany this way; “The evil of technology was not in the technology itself, Lindbergh came to see after the war, not in airplanes or the myriad contrivances of modern technical ingenuity, but in the extent to which they can distance us from our better moral nature, our sense of personal accountability.” (Mc Cullough, 1992)

Figure 2-3 Lindbergh accepts medal presented by Hermann Goering on behalf of Adolph Hitler

Source: (Courtesy Minneapolis Star Tribune/ Acme) (Duchshchere, 2022)

In early 1945 President Harry Truman asked Secretary of War Henry Stimson to create and convene a Blue-Ribbon committee to establish a set of recommendations for the use of atomic weapons during the late stages of WWII as well as a policy for post-war use and security of this nascent and potent new technology. Of great concern was the fear that the United States could not “maintain its monopoly” on the technology for long; some members of the committee believed the way to prevent a post-war (Unites States Department of Energy, 2023)

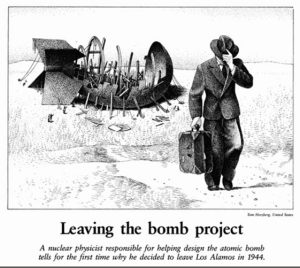

The constant friction between the tremendous power of nuclear weapons and the fear of misuse starts with the Manhattan Project itself. The United States and its allies created the Manhattan Project in response to information provided by intelligence and refugee scientists from Europe who informed the allies that Germany was working on and close to perfecting a weapon of mass destruction that harnessed and used the immense power of nuclear energy. (National WW II Museum, 2023) One particular friction associated with nuclear technology in warfare was scientist Joseph Rotblat. Born in Poland, Rotblat studied science in the United Kingdom and was part of a team that split the atom, which led to the ability to create a nuclear bomb. In light of the widely held belief that Germany was working work to create a nuclear bomb, the race was on. Part of a British team collaborating with the Americans, he eventually went to Los Alamos, New Mexico.

While Rotblat was at Los Alamos, he discovered how enormous the project would be to make a nuclear bomb. From this information, he became convinced that given the limitations of Germany in terms of talent, money, and manpower, it would be impossible for Germany to create a nuclear bomb.

Rotblat was interviewed as part of the History of the Atomic Heritage Foundation’s “Voices of the Manhattan Project” series in 1989.

“I was becoming more and more unhappy about my participation in the project, even without Niels Bohr, for the simple reason that I had begun to realize when I came to Los Alamos the enormity of the project, how much it requires, the enormous manpower required, and the technological resources, to see how much money went into there. Ignoring what was going on in Oak Ridge, Hanford, and Berkeley. There is this enormous effort for the Americans to make the bomb.”

“I could see that the war was coming to an end in Europe, and still it did not look like the bomb would be finished. We still had to grapple with basic issues like the implosion technique. But it became clear to me that [it was] very unlikely the Germans would make the bomb. I did not believe that the Germans could really produce something at less cost in this time, considering their involvement in the war [inaudible] was going on.

It became clearer gradually that the Germans are not going to make the bomb. The only reason really why I worked on the bomb was because of the fear of the Germans. This is the only reason. I would never have worked on this otherwise. This is quite simple, and not to be swayed, [inaudible] my motivation for work on the bomb was becoming invalid. But it was fortified by two events—well, not events. One was an event. This is the remark made by General Groves, which I described.

At that time, it was March of 1944, I was living with the Chadwick’s, and Groves prepared to make a visit to Los Alamos. The notification was he would come to Chadwick’s, because he had become very friendly with Chadwick and would have dinner. Therefore, I was being a resident in the house, therefore I was also there.

This struck in my mind, the shock of it. We had been talking of a general sort over dinner on sort of things. It came down to the project. All of a sudden, he said, “You realize, of course, that one purpose of this project is to subdue the Russians.” To me it came as a terrible shock, because to me the whole premise of the project was quite different.” (Rotblat, Voices of the Manhattan Project, the Joseph Rotblat’s Interview, 1989)

In 1995 Rotblat, the Pugwash organization, was awarded the Nobel Peace Prize “for their efforts to diminish the part played by nuclear arms in international politics and, in the longer run, to eliminate such arms.”

In his acceptance speech at 88, he challenged those who invent, research, work on or use new powerful technologies. Is it a challenge that confronts all of us today and, most notably, today’s students who will face these existential considerations in the coming years?

“But there are other areas of scientific research that may directly or indirectly lead to harm to society. This calls for constant vigilance. The purpose of some government or industrial research is sometimes concealed, and misleading information is presented to the public. It should be the duty of scientists to expose such malfeasance. “Whistleblowing” should become part of the scientist’s ethos. This may bring reprisals; a price to be paid for one’s convictions. The price may be very heavy, as illustrated by the disproportionately severe punishment of Mordechai Vanunu. I believe he has suffered enough.

The time has come to formulate guidelines for the ethical conduct of scientist, in the form of a voluntary Hippocratic Oath. This would be particularly valuable for young scientists when they embark on a scientific career. The US Student Pugwash Group has taken up this idea – and that is very heartening.

At a time when science plays such a powerful role in the life of society, when the destiny of the whole of mankind may hinge on the results of scientific research, it is incumbent on all scientists to be fully conscious of that role and conduct themselves accordingly. I appeal to my fellow scientists to remember their responsibility to humanity.” (Nobel Prize Outreach, 2023)

Figure 2-4 Leaving Los Alamos

Source: (Courtesy Tom Herzberg, Joseph Rotblat and Bulletin of Atomic Scientists, 1985)

In December 1946, Dr. Karl T. Compton authored an article in the Atlantic Magazine entitled “If the Atomic Bomb Had Not Been Used, Was Japan already beaten before the August 1945 Bombings?” As part of the article, he wrote about his interrogation of a senior Japanese Military Official who survived the bombings of Hiroshima and Nagasaki had not occurred; what would Japan have done? He answered:

“You would probably have tried to invade our homeland with a landing operation on Kyushu about November 1. I think the attack would have been made on such and such beaches…. It would have been a very desperate fight, but I do not think we could have stopped you………. We would have kept on fighting until all Japanese were killed, but we would not have been defeated,” by which he meant that they would not have been disgraced by surrender.” (Compton, 1946)

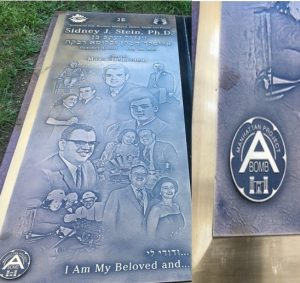

Dr. Sidney J. Stein was this author’s Great Uncle. As a child, I would hear stories about this project he worked on for the government, which was crucial in ending World War II. During my High School and College years, we talked about the challenge of creating technology that can be used to kill massive numbers of humans. His response was always one of pride and introspection. Like all the scientists who worked on the Manhattan Project, he knew the war had to end. Although none of us will ever know with certainty, there is no dispute that an invasion Japanese mainland would exact a heavy toll upon Japan & the Japanese people. Most scholars, military experts, and historians believe that the United States and its allies would have also suffered enormous casualties and deaths. Estimates range from fifty thousand deaths in the initial ground invasion alone (Compton, 1946) to tens of millions. (Jenkins, 2016)According to his Obituary written by his son Michael, “Sid remembered hearing that the bombs had been dropped and that the Japanese had surrendered. “We were thrilled knowing we had shortened the war and saved lives.” Stein was decorated for his work. As fate would have it, the company he founded in 1962, Electro Science Laboratories, maintained its Asian headquarters in Tokyo, Japan, for decades, employing many Japanese citizens. (Stein, 2015)

Figure 2-5 Sidney J. Stein Grave, Frazer, Pa.

Source: (Courtesy Stein Family) (Stein, 2015)

ITS 2023 AND THE CONCERNS OF HUMAN MISUSE OF TECHNOLOGY GROWS

From Artificial Intelligence (“AI”), Machine Learning (“ML”), and Quantum Computing to Genomics, Robotics, 3D Printing, and Virtual Reality, the world is seeing breakthroughs in technology at a breathtaking pace.

In 1950 Alan Turing, a mathematician and one of the first computer scientists, authored a paper entitled “Computing and Intelligence.” In the article, he sought to discuss and answer the question, can machines think?

Part of his hypothesis required a method to determine if the output of the machine or produced by a human. To prove his hypothesis, he called for a human interrogator to examine the answers to questions asked of humans and machines and determine whether a human or machine created the response. Turing was particularly keen on an “infinite capacity computer.” He envisioned what we might call today, Quantum Computing, adding storage and processing capacity as needed depending on the amount of data ingested and the processing capacity required for more complex information sets. He is widely considered one of the progenitors of Artificial Intelligence and Machine Learning. (Turing, 1950)

Dr. Turning alluded to the infinite capacity and storage that would eventually become Moore’s Law. Gordon Moore, Co-Founder of what is today known as the Intel Corporation, postulated that the transistor capacity of a chip doubles every two years, so does processing power. Simultaneously, as the number of transistors increases, the cost per transistor falls, thereby reducing cost while allowing the processing power of computer chips to grow exponentially. (Moore, 1965)

AI and Quantum Computing gave rise to the fission-like explosive growth of automation, AI, and ML. With this rapid development comes the challenge of misuse or mishap in scale. Although Robotics, Automation, AI, and ML have already demonstrated the ability to improve our lives while presenting the same existential threats as nuclear energy, electromagnetic pulse technology, lasers, and biological and chemical agents, you name a few.

One glance at any news source will undoubtedly contain reports of the many benefits of AI, ML, etc., and other stories about the threats these technologies may pose. Students and technology professionals will be required to decide whether to use technology, how to use technology, limitations on the international, national, commercial, and personal use of these new technologies, counter technologies, or other measures to deal with the misuse, unintended. Whatever is written on these pages today will undoubtedly be outdated months after publication. There is precious little we can accurately predict about ten or twenty years in the future, much less twenty minutes from now. Therefore, this chapter intentionally does not focus on currently perceived or known threats from technology; instead, our focus has been on what history has shown us and what precautions, legislation, countermeasures, or detection methods can be implemented to prevent the risks we know exist from becoming a reality. In no particular order, here are some examples of potential defenses.

JUST BECAUSE WE CAN DOES NOT MEAN WE SHOULD

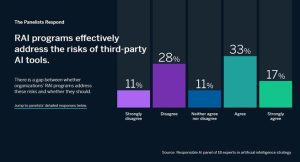

As discussed throughout this chapter, Risk/Benefit analysis is essential before introducing such new technologies into public, private, commercial, or defense environments. Since most AI technology available today is Third Party (“TPAI”) created, a significant analysis must be performed to assess the risks associated with its use which has been referred to as Responsible Artificial Intelligence (“RAI”). To that end, the MIT Sloan Management Review and Boston Consulting Group surveyed 18 panelists involved in considering or actually implementing TPAI

into their organizations.

Figure 2-6 RAI Survey April 2023

Source:(Courtesy MIT Sloan Management Review) (Renieris, 2023)

As the small but instructive survey reveals, there needs to be more agreement or confidence in using RAI to analyze the risks and benefits of TPAI. The people deciding whether TPAI is a sound, safe, and responsible decision for their organization need to be more confident in their analytical tools or processes; how can they implement the technology ethically?

WHAT ARE THE ETHICAL AND LEGAL CONSIDERATIONS?

Given the nascent state of the use of AI, ML, and other new technology, and the rapid rate of their development and implementation, the concepts of law, ethics, and morality of specific use cases and scenarios are taking a back seat. However, this is not to say that no guiding principles exist making creating, using, or restricting the use of AI, ML, or their progeny. Isaac Asimov was a Russian Immigrant to America who eventually served as a biochemistry professor at Boston University. In addition, he was a prolific writer who became enamored with science fiction as a child by reading small “pulp” magazines on this subject in his family’s candy store. Later on

Asimov wrote a series of short stories and novels between 1940 and 1945, eventually including them in his 1950 compilation entitled I, Robot. One story, written in 1941, was entitled “Runaround.” In Runaround, Asimov introduced the “Three Laws of Robotics,” also known as Asimov’s Law.

First Law: A robot may not injure a human being or, through inaction, allow a human being to come to harm.

Second Law: A robot must obey the orders given to it by human beings except where such orders would conflict with the First Law.

Third Law: A robot must protect its own existence as long as such protection does not conflict with the First or Second Laws. (Asimov, 1942)

The fact that Asimov created his laws for robots’ points to a more critical issue, whether technology or human, which needs regulation. Suppose technology could self-determine how its use; it could be engineered to have internal self-limitations to prevent misuse. A case in point is firearms. For as long as they have existed, guns have been used in war, law enforcement, and self-defense. The Second Amendment to United States Constitution. (United States of America, 1791) establishes the right to own (bear”) arms. For the same period, firearms have been misused or accidentally used in a way society finds unacceptable. According to the Judicial Learning Center: “Laws are rules that bind all people living in a community. Laws protect our general safety and ensure our rights as citizens against abuses by other people, by organizations, and by the government itself. We have laws to help provide for our general safety.” (Judicial Learning Center, 2019)

What happens when these laws and regulations are broken or violated, and a person has been proven guilty of the offense? [2] Does a punishment regime exist? Why is there punishment? The four primary philosophical reasons for punishment in a system of laws are:

Retribution: Punishment serves the purpose of retribution when it simply retaliates (or gets even) by inflicting pain or discomfort proportionate to the offense.

Incapacitation: Punishment serves the purpose of incapacitation when it prevents Offenders from being able to repeat an offense. The most popular form of incapacitation today is incarceration.

Deterrence: Punishment serves the purpose of deterrence when it causes offenders to refrain from committing offenses again (individual deterrence) or when it serves as an example that keeps others from committing criminal acts (general deterrence).

Rehabilitation: The purpose of rehabilitation is to change offenders through proper treatment. (Cherrington, 2007)

The greatest challenge in regulating AI, ML, and similar technologies is the new reality of scale and speed. As of this writing, AI through consumer products such as ChatGPT is already increasing and will require more work to regulate effectively. Students will undoubtedly be confronted with the challenge of resolving these and many more ethical and legal challenges presented by AI, ML, and other self-developing autonomous technology. The most vexing challenge may not be whether humans will obey the laws created to regulate technology but whether and how long it will take them to decide to abide by them.

Figure 2-7 War Games Movie Nuclear War Machine Learning Scene

Source: (Courtesy United Artists 1983) (Badham, 1983)

COUNTER -AI

The final and most important part of our discussion deals with the reality that when, not if AI, ML, or similar technology goes awry or becomes a hacker itself? No less than the esteemed Cryptography expert Bruce Schneier distilled the problem this way:

“There are really two different but related problems here. The first is that an AI might be instructed to hack a system. Someone might feed an AI the world’s tax codes or the world’s financial regulations, with the intent of having it create a slew of profitable hacks. The other is that an AI might naturally, albeit inadvertently, hack a system. Both are dangerous, but the second is more dangerous because we might never know it happened.” (Schneier, 2021)

The risk of conflict increases with the world’s nations all racing to become the leader in AI technology. Governments and non-state actors are experimenting with its capabilities. The experiments may cause unexpected results or even work cause unintended harm. Much like crowded airspace in a war zone, the risk of conflict is already significant, and the risk of accidental events causing conflict may be even greater. Even the intended results of the testing and implementing various AI technology by one nation might be deemed an existential threat by another, which, in turn, could create a scenario where virtual technology leads to kinetic engagement. The risk of an AI failure, attack, or unexpected result exists from pipelines to power plants and air travel to atomic energy. As more and more AI systems develop, the risk of unintended consequences grows. Prudence dictates that the creation of Counter AI technology and protocols happens rapidly. Professor M. A. Thomas of the United States Army Scholl of Advanced Military Studies described the urgent challenge to develop Counter AI systems this way:

“The singular strategic focus on gaining and maintaining leadership

And the metaphor of an “arms race” is unhelpful, however.

Races are unidimensional, and the winner takes all.

Previous arms races in long-range naval artillery or nuclear weapons were

predicated on the idea that advanced tech would create standoff, nullifying the effects of the adversary’s weapons and deterring attack. But AI is not unidimensional; it is a diverse collection of applications, from AI-supported logistics and personnel systems to AI-enabled drones and autonomous vehicles. Nor does broadly better tech necessarily create standoff, as the US military learned from improvised explosive devices in Afghanistan. This means that in addition to improving its own capabilities, the United States must be able to respond effectively to the capabilities of others. In addition to its artificial intelligence strategy, the United States needs a counter-AI strategy.” (Thomas M. A., 2020)

CONCLUSIONS

We are living through the beginning of an unprecedented development period of new technology of power and speed never before seen. Indeed, the introduction of atomic weapons was earth-shattering at the time. However, its development took many years and thousands of people to perfect. With AI and automation technology, the need for thousands of humans, many, if not all, endeavors of discovery or invention may decrease to only a few or none. It is the hope of this author and the members of the Wildcat Team that students and those professionals who read it use it as a call to action. The promise and danger of this new technology are both unlike anything the world has previously witnessed. With that tremendous power comes challenge, and like it or not, the burden of finding answers to the questions raised in this chapter and in this book will fall upon its reader. Here is wishing Godspeed to all those who take on the challenge, and may their efforts result in a safer and more peaceful world.

REFERENCES

Asimov, I. (1942). runaround. New York: Street & Smith .

Badham, J. (Director). (1983). War Games [Motion Picture].

Cambridge Dictionary. (2023, April 27). Transhumanism. Retrieved from Cambridge Dictionary: https://dictionary.cambridge.org/us/dictionary/english/transhumanism

Cherrington, D. J. (2007, April 1). Crime and Punishment: Does Punishment Work? . Retrieved from Brigham Young Scholars Archive: https://scholarsarchive.byu.edu/cgi/viewcontent.cgi?article=1953&context=facpub

Compton, D. K. (1946, December). If the Atomic Bomb Had Not Been Used, Was Japan already beaten before the August 1945 bombings? The Atlantic, pp. 54, 55.

Crumley, B. (2021, September 14). Drones drop explosives in Ecuador prison attack by suspected drug cartels. Retrieved from Drone DJ: https://dronedj.com/2021/09/14/drones-drop-explosives-in-ecuador-prison-attack-by-suspected-drug-cartels/

Daily Mail. (2021, November 22). Blow-by blow account of how Christmas parade pandemonium unfolded as SUV driver barreled through crowd and killed at least five. Retrieved from Daily Mail Online: https://www.dailymail.co.uk/news/article-10230313/Waukesha-Christmas-parade-horror-Timeline-carnage-unfolded.html

Director of National Intelligence. (2018, March 30). Planning and Preparedness Can Promote an Effective Response to a Terrorist. Retrieved from Director of National Intelligence: https://www.dni.gov/files/NCTC/documents/jcat/firstresponderstoolbox/First-Responders-Toolbox—Planning-Promotes-Effective-Response-to-Open-Access-Events.pdf

Duchshchere, K. (2022, June 3). Was Charles Lindbergh a Nazi sympathizer? Retrieved from Star Tribune: https://www.startribune.com/charles-lindbergh-little-falls-world-war-2-nazi-germany/600178871/

Environmental Protection Agency, James Lo Scalzo. (2021, May 19). Colonial Pipeline confirms it paid $4.4m ransom to hacker gang after attack. Retrieved from The Guardian : https://www.theguardian.com/technology/2021/may/19/colonial-pipeline-cyber-attack-ransom

Federal Bureau of Investigation. (2023, April 6). Lindbergh Kidnapping. Retrieved from FBI: https://www.fbi.gov/history/famous-cases/lindbergh-kidnapping

Folger Shakespeare Library. (2015, July 10). Now Thrive the Armorers: Arms and Armor in Shakespeare. Retrieved from Folgerpedia: https://folgerpedia.folger.edu/Now_Thrive_the_Armorers:_Arms_and_Armor_in_Shakespeare

Hayles, K. N. (1999). How we became posthuman : virtual bodies in cybernetics, literature, and informatics. Chicago, Il.: University of Chicago Press.

Himelein-Wachowiak, M. e. (2021, May 20). Bots and Misinformation Spread on Social Media: Implications for COVID-19. Retrieved from Journal of Medical Internet Research: https://www.ncbi.nlm.nih.gov/pmc/issues/380959/

Jacksonville.com, F. T. (2017, October 2). Las Vegas attack is deadliest shooting in modern US history. Retrieved from Florida Times Union: https://www.jacksonville.com/story/news/nation-world/2017/10/02/las-vegas-attack-deadliest-shooting-modern-us-history/15775260007/

Jenkins, P. (2016, May 18). Back to Hiroshima: Why Dropping the Bomb Saved Ten Million Lives. Retrieved from ABC Religion & Ethics: https://www.abc.net.au/religion/back-to-hiroshima-why-dropping-the-bomb-saved-ten-million-lives/10096982

Judicial Learning Center. (2019). What is a Law? Retrieved from Judicial Learning Center: https://judiciallearningcenter.org/law-and-the-rule-of-law/#:~:text=Laws%20protect%20our%20general%20safety,provide%20for%20our%20general%20safety.

Katersky, A. (2023, February 1). New York City woman charged with financing terrorist groups in Syria through cryptocurrency. Retrieved from ABC News: https://abcnews.go.com/US/new-york-city-woman-charged-financing-terrorist-groups/story?id=96818461

Library of Congress. (2023, April 15). Military Technology in World War I. Retrieved from Library of Congress: https://www.loc.gov/collections/world-war-i-rotogravures/articles-and-essays/military-technology-in-world-war-i/

Ling, W. (1947). On the Invention and Use of Gunpowder and Firearms in China. Isis, Vol 37, 167.

Lonstein, W. (2018, April 12 ). Governments and Businesses Are Becoming Inebriated by Technology. Retrieved from Forbes: https://www.forbes.com/sites/forbestechcouncil/2018/04/12/governments-and-businesses-are-becoming-inebriated-by-technology/?sh=4d52c7c148df

Mc Cullough, D. (1992). Brave Companions, Portraits in History. New York, NY: Simon & Schuster.

Moore, G. E. (1965, April 19 ). Cramming more components onto integrated circuits. Electronics, Volume 38, Number .

National Academies of Sciences, Engineering, and Medicine. (2018). Reducing the Threat of Improvised Explosive Device Attacks by Restricting Access to Explosive Precursor Chemicals. Washington DC: The National Academies Press.

National Air and Space Museum. (2023, April 20). The First Solo, Nonstop Transatlantic Flight. Retrieved from National Air and Space Museum: National Air and Space Museum

National Institute of Drug Abuse. (2019). Research Report – How is methamphetamine misused? Baltimore, MD: National Institute of Drug Abuse.

National WW II Museum. (2023, April 5). The Manhattan Project. Retrieved from National WW II Museum: https://www.nationalww2museum.org/war/topics/manhattan-project

Nobel Prize Outreach. (2023, April 1). Joseph Rotblat Facts and Lecture. Retrieved from The Nobel Prize: https://www.nobelprize.org/prizes/peace/1995/rotblat/lecture/

Peralta, E. (2016, June 12). A List Of The Deadliest Mass Shootings In Modern U.S. History. Retrieved from NPR: https://www.npr.org/sections/thetwo-way/2016/06/12/481768384/a-list-of-the-deadliest-mass-shootings-in-u-s-history

Renieris, E. M. (2023, April 20 ). Responsible AI at Risk: Understanding and Overcoming the Risks of Third-Party AI. Retrieved from MIT Sloan Management Review: https://sloanreview.mit.edu/article/responsible-ai-at-risk-understanding-and-overcoming-the-risks-of-third-party-ai/

Robinson, H. (2022, February 3). 20 people arrested in connection to drone attacks at Midlands prison. Retrieved from WIS TV: https://www.wistv.com/2022/02/03/20-people-arrested-connection-drone-attacks-midlands-prison/

Robinson, K. (2020, December 3). The Arab Spring at Ten Years: What’s the Legacy of the Uprisings? Retrieved from Council on Foreign Relations: https://www.cfr.org/article/arab-spring-ten-years-whats-legacy-uprisings

Rotblat, J. (1985). Leaving the bomb project. Bulletin of the Atomic Scientists Volume 41, Issue 7, 16-19.

Rotblat, J. (1989, October 12,). Voices of the Manhattan Project, the Joseph Rotblat’s Interview. (M. J. Sherwin, Interviewer)

San Diego County Gun Owners. (2017, October 1). A message on the tragedy in Las Vegas. Retrieved from San Diego County Gun Owners: https://sandiegocountygunowners.com/project/message-tragedy-las-vegas/

Satia, P. (2019). What guns meant in eighteenth-century Britain. Palgrave Commun 5, 105.

Schneier, B. (2021, April). The Coming AI Hackers. Retrieved from The Harvard Kennedy School Belfer Center: https://www.belfercenter.org/publication/coming-ai-hackers

Shepherd, A. D. (2022). From Spark and Flame: a Study of the Origins of Gunpowder. Tenor of Our Times, Vol. 11, Article 12, 2. Retrieved from Tenor of Our Times.

Smart, J. K. (1997). History of Chemical and Biological Warfare: An American Perspective. Aberdeen Proving Ground, MD: U.S. Army.

Speilberg, S. (Director). (1993). Jurassic Park [Motion Picture].

Stein, M. (2015, August 15). A Portrait of Sid Stein. Retrieved from Find A Grave: https://www.findagrave.com/memorial/193235542/sidney-j-stein

The 9/11 Commission . (2004). The 9/11 Commission Report. Washington, DC: United States of America .

The Economic Times. (2022, November 17 ). Christmas parade killer Darrell Brooks gets 1,067 years of sentence, judge breaks down in tears, says ‘heart-wrenching’. Retrieved from The Economic Times: https://economictimes.indiatimes.com/news/international/uk/christmas-parade-killer-gets-1067-years-of-sentence-judge-breaks-down-in-tears-says-heart-wrenching/articleshow/95582665.cms

Thomas, M. A. (2020, Spring). Time for a Counter-AI Strategy. Strategic Studies Quarterly, p. 3.

Thomas, T. L. (2003). Al Qaeda and the Internet: The Danger of “Cyberplanning”. Washington, D.C. : United States Department of Justice Office of Justice Programs.

Turing, a. m. (1950). Computing Machinery and Intelligence. Mind 49, 433-460.

United States of America. (1791, December 15). Second Amendment to the United States Constitution. United States Constitution . Washington, DC: United States.

United States Senate Committee on Homeland Security & Governmental Affairs. (2021). Testimony of Joseph Blount, CEO Colonial Pipeline . United States Senate Transcript (p. 4). Washington DC: United States Congress.

Unites States Department of Energy. (2023, April 5). The Manhattan Project, an ?Interactive History. Retrieved from U.S. Department of Energy – Office of History and Heritage Resources: https://www.osti.gov/opennet/manhattan-project-history/Events/1945/debate.htm

William, West Agence France-Presse — Getty Images. (2021, August 21). Protests in Melbourne. Retrieved from New York Times: https://static01.nyt.com/images/2021/08/21/world/21virus-briefing-oz-protests/merlin_193494093_43aeb0fb-7671-4f97-858d-47ab6368be91-superJumbo.jpg?quality=75&auto=webp

World Health Organization . (2023, April 16). World Health Organization. Retrieved from https://covid19.who.int/: https://covid19.who.int/

Xinhua Net. (2017, December 24). Across China: Wooden slips reveal China’s first emperor’s overt pursuit of immortality. Retrieved from Xinhua Net: http://www.xinhuanet.com/english/2017-12/24/c_136848720.htm

Zhuang, Y. (2021, August 21). In Melbourne, Australia, a protest against Covid restrictions turned violent. Retrieved from New York Times: https://www.nytimes.com/2021/08/21/world/australia/melbourne-protests-covid-restrictions.html

Zoli, C. (2017, October 2). Is There Any Defense Against Low-Tech Terror? Retrieved from Foreign Policy: https://foreignpolicy.com/2017/10/02/terror-has-gone-low-tech/

ENDNOTES

[1] It is not lost on the author that many nations and groups have used and abused in ways that most humanities found offensive, improper, and inhumane. Hitler, Pol Pot, and Stalin misused powerful technologies most brutally and horrifically, leading to the deaths of millions of innocents. Tyranny, mental illness, and cults are examples where group oversight, consideration, and debate failed… (San Diego County Gun Owners, 2017)

[2] This presumes a system of laws and punishments provides a method of adjudication with the presumption of innocence, the right of the accused to have a defense, confront the accuser, and have guilt or innocence determined by a neutral judge or jury of peers.