Chapter 1: The Role of Information Technology

J.J.C.H. Ryan

Student Learning Objectives:

After completing this block, the student will be able to use the conceptualization of an OODA loop in order to:

— describe the role of automated decisions in UAS operations

— analyze communications pathway weaknesses between UAS components

— identify points of attack in a notional UAS architecture

— explain types of sensing and how they are used to support decision making

–– ideate countermeasures to UAS operations

Introduction

In counter unmanned aerial systems (C-UAS) operations, there are basically just two ways to actually do something to counter the UAS activity: physically interfere with the system(s) or virtually interfere with the system(s). In this text, a wide variety of methods will be presented that employ one or both of these approaches. It is useful to have a structure upon which to consider those methods, which is why this chapter is first.

A UAS is, at its most abstract, an information processing system. Data is sensed, processed, shared, and communicated in order to control flight parameters (speed, altitude, etc.), internal sensors, external sensors, navigation, and mission execution. Data can be shared internally and externally, with other UASs, ground control elements, and computational backend systems. But this abstraction hides an incredible complexity of configuration. The various configurations of UASs range from stratospheric balloons (Loon LLC, 2020) (Sampson, 2019) to high altitude jets (AirForceTechnology.com, 2019) to hobbyist quadcopters (Fisher, 2020). Uses for UASs include surveillance, communications, weapons deployment, and entertainment. They exist in single system configurations, multiple element collaborations, and swarms. Simply put, the complexity and numbers of UAS configurations are legion. Therefore, it can be useful to abstract a construction of a UAS in order to have a way of discussing the issues without being bound by and constrained by implementation details.

In such an abstract description, a UAS consists of at least the following elements: a propulsion system, a control system, and a housing system. The propulsion system is what provides the mechanisms for flight and maneuver. The control system, which may be partially or completely autonomous, is what provides guidance to the UAS. The housing system is the physical structure that brings all components together to create a single operational UAS. A UAS may also include sensors, decision-making systems, communications systems, weapons, and defensive systems. Of all these components, only one may be bereft of information technology: the housing system. It follows, then, that understanding the role of IT in UAS operations is critical to understanding such mission-critical elements as targeting, effects, and execution of counter UAS activities.

Note that UAS operations could (and probably should) consider C-UAS actions prior to actual execution of a mission. In considering the potential C-UAS actions that a particular mission might encounter, the operators of a UAS might engage in counter C-UAS (CC-UAS) activities. These might include mission planning to avoid C-UAS capabilities, hardening of systems to resist C-UAS actions, and engaging in deception activities to confuse or deny C-UAS action effects. Simply put, considering how adversaries might try to disrupt and deny mission execution gives operators the opportunity to plan ways to subvert those adversarial activities. Thus, a mission planner needs to not only plan how to execute the mission but also to how to mitigate the actions that an adversary will take to thwart the mission. From the other perspective, a C-UAS operator must consider that an adversary might anticipate the C-UAS actions and have prepared alternatives and defenses. Whether you are Blue or Red in this scenario, the other side gets a vote.

The following Table 1-1 is a simple exploration of how UAS, C-UAS, and CC-UAS operations relate to each other. These are simply notional, are not intended to be a complete exposition, and are simply offered as a way to more easily integrate the concepts into a single operational construct.

Table 1-1 UAS, C-UAS and CC-UAS Operations Relationships

| UAS operations | Counter-UAS operations | Counter Counter-UAS operations |

| Flight path | MIJI activities | redundant systems alternative flight paths |

| Surveillance | Dazzling Camouflage |

Multiple types and numbers of sensors with different capabilities |

| Swarm coordination | Communications interference | Redundant channels |

Source: Ryan, J.J.C.H (2020) Private Notes

When you think through these possibilities, it becomes clear that the potential for physical interference to UAS operations is limited: you can shoot down a UAS, but that’s about it. But shooting down a UAS can be tricky, especially if the UAS is operating very remotely (like a stratospheric balloon) or in a swarm (where there are too many UAS to target individually). Plus, shooting down a UAS can deny the mission but is pretty darn obvious. A more subtle C-UAS operation might be to hijack the data feed or cause the UAS to operate in an area slightly different from the goal target. So, the real target may very well be the information systems embedded in a UAS.

Disrupting the Decision Cycles

To oversimplify significantly, the importance of embedded information processing technologies is to support and enhance decision-making capability. Should the UAS change course? Does the UAS have enough fuel to get home? If not, what should happen? Is the UAS about to fly into a tree? The entire flight of a UAS, whether alone or in a swarm, is filled with the need to make and execute decisions.

The point of integrating advanced information technologies into UASs is to speed up the ability to make and execute appropriate decisions. Those two phrases: “make and execute” and “appropriate” are critical to understanding the problem space. “Make and execute” imply data input to a decision-making system, data output from such a decision-making system, and a triggering mechanism for a decision acting element. “Appropriate” implies that the decision and triggering processes have been thoroughly tested to comply with the rules of engagement and the policies that exist for the mission profile. These are decision cycles: a decision made based on input, action is done based on the decision, and a reassessment of the situation is performed to see if further action is needed. Rinse and repeat, as needed.

The point of attacking information technologies in UASs is to disrupt or deceive the decision cycle, for one or more purpose. Therefore, it is useful to have a short discussion on conceptualizing decision cycles. There are many different ways to conceptualize how decisions are formed, but one that has currency and broad based acceptance is the OODA Loop, first conceptualized by John Boyd (Richards, 2012) and updated by many, including Julie Ryan in 1996 (Nichols, Ryan, & Ryan, 2000). There have been many other contributors to the nuanced application of the OODA Loop as well, including criticisms (Forsling, 2018). The point is that the useful but only as far as the nuanced application of it allows. Further, the model was developed in a time when decisions were definitely restricted to the human brain, hence the development of OODA 2.0 (Nichols, Ryan, & Ryan, 2000, pp. 477-488). Both versions of the model are useful in planning C-UAS activities. See Figure 1-1.

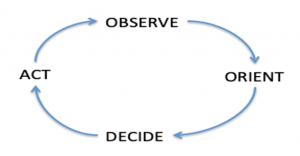

The original OODA Loop is normally simplified to a simple loop that encompasses four steps connected with arrows. The four steps comprise a decision cycle. The first step is to observe what is going on. The second step is to orient those observations within the context of the environment and activities. The third step is to create candidate decisions based on the observations, the orientation, and mission. The fourth step is to act on the decision(s) that are deemed appropriate. Finally, the cycle repeats as needed. The following diagram depicts the OODA Loop as normally drawn:

Figure 1-1: Simplified OODA Loop

Source: (Richards, 2012)

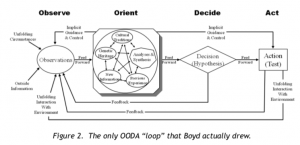

The literature is clear to point out, however, that the OODA conceptualized by Boyd was much more nuanced, considering the role of feedback, mental biases, and experience level throughout the entire model. Figure 1-2, taken from (Richards, 2012), shows the version of the OODA drawn by Boyd:

Figure 1-2: Boyd’s Drawing of the OODA Loop

Source: (Richards, 2012)

Both versions of the OODA Loop capture the essence of the process, in that a decision is made as a result of observing something in the environment that can be characterized (oriented) as something worth acting upon.

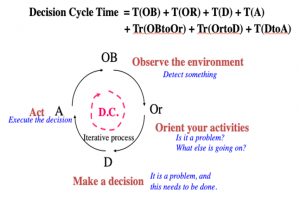

An interesting way of conceiving of this process includes layering time over the various steps. Using the simplistic version, simply to control the resultant complexity of the diagram, one can conceive that there are hard physical limits to each step of the process. Hard physical limits derive from the speed of light, the speed of neural transmission, the speed of thought conversion from sight to context, and the speed at which cognition occurs. These hard-physical limits, when characterized in scenarios, describe the ultimate maximum speed at which any decision cycle can occur. Figure 1-3 shows the simple version of the OODA Loop with such time overlays.

Figure 1-3: Time Elements of the OODA Loop

Source: (Ryan, Lecture Notes, EMSE 218/6540/6537, 1997)

The time elements shown include the times required to execute any of the given steps (T) plus the time to transition between steps (Tr). When the laws of physics and neurobiology have been pushed to their limits, there is a hard stop as to how fast this cycle can be executed.

Effective management of any situation depends on making decisions, typically with less than perfect data. Waiting for perfect data is a recipe for being last in the race to action but jumping into action with data that is imperfect is risky. When the potential impacts of a decision are low, then the pressure to be absolutely correct is reduced. When the impacts of a decision are high, including perhaps causing death or committing an act of war, then the requirement for better data is concomitantly high.

On the other hand, the faster a decision is made, and the necessary action executed, the faster the results occur. Fast, effective, and appropriate decisions depend on experience, education, and supporting capabilities. When a decision is needed very quickly, automation of some or many components of the system is a must.

Advanced information technology allows us to “cheat”, as it were. Incorporating advanced processing and automated reasoning enables a rethinking of this abstraction. Consider: what if all possible flight paths, potentials scenarios, and problem sets were modeled prior to any need for a decision to be made? Would that change the need for observation? What if all possible decisions based on all possible scenarios were categorized and stored prior to the mission? Would that change the need for real-time analysis of potential courses of action?

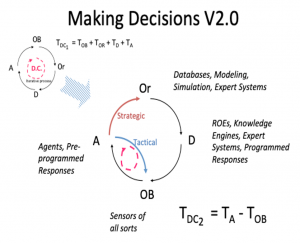

The answer is, of course: yes. This technology enhanced decision cycle can be modeled as OODA Loop 2.0, which isn’t actually an OODA loop at all but an ODAO Loop. Figure 1-4 shows the modified OODA Loop 2.0, with some technology suggestions associated with each step.

In this OODA 2.0 variation, preliminary preparation using databases, modeling, simulations, and expert systems provide a rich backdrop to the potential mission, allowing strategists to work with tacticians to flesh out the potential variations that the mission can involve. Based on these comprehensive analyses, a set of decisions can be predetermined, not unlike the decisions that are programmed into autonomous vehicles of all sorts. Decision parameters, such as values, rules of engagement, and geopolitical considerations, are integrated with expert systems in order to create a rich environment of allowable decisions responses under certain conditions. Note that the conditions must be completely describable as well: that is necessary in order to characterize the observables that comprise the triggering actions. Those set of activities with those types of technologies create the Orient and Decide phases of the OODA 2.0.

When those are completed, a system instrumented with sufficient sensors and actuated elements can wait for the conditions to be met that trigger a decision. The decision is triggered automatically, which causes the preprogrammed actions to be taken, and then the system goes back to observing. When considering this variation, it is useful to point out that the OODA 2.0 includes not one but two decision cycles: a tactical decision cycle and a strategic decision cycle. Both are critically important to the speed of operations, and both are points of vulnerability. Feedback is provided in two ways: strategically to the expert systems that model potential outcomes and inform decision options, and tactically to the observation sensors.

These decision cycles occur at the speed of computational processes and electronic communications, which is to say: very fast. There are two case studies that inform the design and use of systems employing the OODA 2.0 approach: the stock market crash of 1987 and the Vincennes tragedy of 1988.

The stock market crash of 1987 was the result of automated elements (bots) deployed in financial transaction systems to speed up the purchase or sale of assets in order to react to market conditions faster. In 1987, the number of bots had risen to the point that when the market moved in a certain direction, the bots reacted, as programmed, to buy or sell. These actions were detected and acted upon by other bots, which reacted in kind, and a feedback loop was created very quickly that led to wholesale selling. The humans, who were not in the decision loop, stepped in to stop the market and reassess the system architecture. (Kenton, 2019)

Figure 1-4: OODA Loop 2.0

Source: (Ryan, Security Challenges in Network-Centric Warfare, 2001)

The Vincennes tragedy of 1988 was a result of an automated system on a warship mistaking an Iranian airliner for an incoming attack: the warship’s systems automatically launched what was thought to be a defensive strike on the airliner. Hundreds of people died. (Halloran, 1988) The relationship between Iran and the US continues to be haunted by this very deadly mistake. (Gambrell, 2020)

These two cautious tales have the side effect of pointing out that any system using the OODA 2.0 approach is vulnerable to two attacks: the incitement of positive feedback loops, which may trigger undesirable decision states, and the enticement of data suggesting eminent danger, which may also trigger undesirable decision states. But these are not all of the opportunities that might be taken advantage of by a clever C-UAS planner. Using the OODA Loop analysis framework, both 1.0 and 2.0, can assist a planner in identifying many such opportunities to subvert, deny, or disrupt UAS missions by focusing on the information systems that enable the UAS operations.

Conceptualizing the Information Systems in UASs

The UAS is a “box” propelled through the air, controlled through remote and onboard means, focused on conducting a mission. The mission can vary both in terms of geospatial coverage and in terms of active or passive interaction with the target. There are several truisms.

- 1) At the beginning of a mission and until some certain point (which may be quite soon after launch), there are usually active communications between the UAS and the ground control station. This time may be a short period of time, such as 2 minutes or less, or it may be for the entirety of the mission.

- 2) The UAS may have some capability to detect and avoid objects, so as to avoid mid-air collisions. This capability may be extremely rudimentary, or it may be quite sophisticated.

- 3) The UAS has a propulsion system that provides adequate power to move in the manner it is intended to move. Control of this propulsion system may be through artificial intelligence, as in the case of the Alphabet Project Loon (Loon LLC, 2020), or they may be controlled through remote pilotage.

- 4) The UAS may have some capability to navigate autonomously or semi-autonomously. In relatively simple systems, like balloons, this may involve means to change altitude. In more complex guided systems, this means that it may be able to simply fly to an emergency landing field when certain circumstances arise. In other systems, this means that there is an onboard computer system dedicated to navigation that controls the flight of the system when released from active external control (whether ground or air based) and continues that control until commanded to return to base or resume responding to external control.

- 5) The UAS may have some capability to sense its surroundings. This may be rudimentary radar sensing, it may be optical sensing, or it may be multispectral sensing. The interpretation of the sensed data may be computed on board, either partially or completely, or may be computed off-board, perhaps with derived data returned to the system for action.

- 6) The UAS may have some capability for action, depending on the mission. This may include deploying decoys, munitions, or taking evasive action. The capability for action may be initiated remotely or may be autonomous, in which case a decision support system must be onboard.

These capabilities require computational systems and communications. And all of these may be targets for C-UAS activities. So, let’s take a look at the information systems in a conceptual UAS.

Internal

A UAS can’t fly (very far) if it doesn’t have internal systems to parse received instructions, make decisions based on sensed data, and control its onboard systems in a UAS. The internal systems can be thought of as the internal nervous system of a UAS. Sensed data is collected and may possibly undergo some preprocessing, prior to being transferred to a decision support system, a suite of AI support elements, or external communications for relay to other UASs and/or command and control elements, such as an airborne control system or a ground control system. The internal systems interpret and instruct navigational control, mission execution, and propulsion control. When emergency situations occur, the internal systems execute preprogrammed options, which could include autonomously navigating to safe zones or self-destructing. The internal systems also monitor the health and welfare of the UAS according to the instrumentation included onboard. This may include fuel level monitoring, damage assessment, and interference detection. According to design, the internal systems may relay information continuously, on schedule, or in emergencies.

Any successful attack on internal systems could affect mission execution. Internal systems could potentially be attacked in many different manners, many of which will be discussed in the following chapters. But for the time being, consider these two obvious options:

- Electronic beam attack, where the strength of the focused energy disrupts or disables the electronic components of the internal systems. For example, a powerful beam may overwhelm delicate circuits, rendering them inoperable.

- Malicious software (malware) injection using channels of communication to the UAS, or activation where the malware has been included in components of the UAS before launch and triggered by operational parameters.

In order to a priori protect against such activities, a UAS designer would need to consider the potential for these types of attacks and design in protections that mitigate the possibilities of such attacks being successful. For instance, the design architecture could include using hardened chips that are resistant to an electronic beam attack or incorporating a Faraday Cage into the design of the housing system to protect vulnerable electronics.

Boundary systems

Boundary systems are those systems that exist on the boundary of the UAS. These include any sensors, such as air pressure, altitude, navigation aids, and mission specific sensors, as well as external communications elements, such as antennae. These are elements that interface between the external conditions in which the UAS is operating and the internal systems.

A successful attack on boundary systems can subvert the entire UAS mission. Designing protections for these systems is tricky, though, since by definition they need to be on the boundary of the physical system in order to operate. Because some missions may be dependent on ground-based data processing backend systems, the compromise of data transfer systems may result in a mission abort. Similarly, if external sensors are compromised, the ability for a UAS to operate safely could be undermined.

Examples of boundary systems include:

- Passive sensors, which receive data without stimulating the environment. These include cameras and navigation aids.

- Active sensors, which stimulate the environment in order to collect data. These include radar and lidar systems.

- Communications system components, such as receivers and transmitters. These include data communications systems and automated identification transponders.

External

External information systems are those that are wholly or partially contained in one or more systems external to the UAS. These may include data servers, control systems, mission execution support systems, or backend processors. Because these elements are external to the UAS, there are two points of vulnerability: the external system itself and the communications pathway between the external system and the UAS.

External systems may include:

- Active mission control, for part or all of a mission. The external elements may include systems tracking many UAS missions as well as navigation assistance.

- Data processing systems to support big data analysis, characterization, and integration.

- Data processing systems to support sensor data processing, interpretation, and application.

Understanding the potential for attack and defense on external systems is specific and dependent on the mission and uses.

How Complex information Technologies Are Used in UAS operations

Decision Support Systems

A decision support system (DSS) is an information technology system that supports the making of decisions concerning mission operations. The DSS requires a knowledge base of facts and rules relevant to the mission. In support of mission planning, a DSS may use models or analytic methods to review and evaluate alternatives. During the mission a DSS may assist the controllers with decisions concerning options for mission execution, using the knowledge base together with sensor data from the UAS and perhaps other current intelligence.

Expert Systems

Expert systems are information technology systems that emulate decision-making by human experts. In a UAS, such systems can make decisions even when communications to mission controllers is not available. An on-board expert system requires access to an appropriate knowledge base of facts about the mission and rules that apply to the mission under various contingencies. The system must have an inference engine capable of applying the rules to facts about the status of on-board systems and sensor data, as well as mission plans and rules, to make decisions regarding continuing operations. For example, if communications is lost with mission controllers, the expert system may take control and direct the UAS to a contingency holding area or landing field.

AI

What is “artificial intelligence” or AI? This is a subject of much debate, even today. The various definitions that have been offered range from a full replication of generalized intelligence (as defined by sensing and reacting to internal and external stimuli of both expected and unexpected nature), an ability to mimic human behavior, an ability to execute specific complex tasks (such as sensory aspects of biological life, including smell, hearing, vision, and touch), and being able to detect patterns in complex data from multiple sources in order to make correct decisions (such as identifying a terrorist in a crowd of people). These are just a few of the types of definitions that have been offered, but they provide a view into the breadth of the contribution for AI in every aspect.

The types of AI are variously referred to as belonging to “strong” or “weak” classes of AI. Strong AI implementations are, as one might imagine, more towards the fully generalized and autonomous types of intelligences. A classic test of a strong AI system is the Turing test, in which an AI is tested as a black box to see if a human can figure out if the system is an actual person or a machine. There are other, more nuanced, tests as well, but this gives you the sense of strong AI. (Huang, 2006) Weak AI is not, in fact, weak, but simply limited by design. Artificial vision, for example, can be considered weak AI. Advanced decision support systems (DSSs) can also be considered weak AI (James, 2019).

Why devote some time to AI in a C-UAS book? Advanced information technology, including all forms of AI, is very important to both UAS and C-UAS operations. Consider: humans are bad at several activities that are critical to UAS operations. Augmenting or replacing humans as decision makers, actuators, or monitors of elements of UAS missions is an important application of technology.

One of the things in which humans have limited capability is multi-tasking: humans have severe limitations in their ability to do more than one thing at a time. Even people who think they are good at multi-tasking are demonstrably not so when tested. (Miller, 2017) This limitation means that an operator, when trying to keep track of many UAS operations and support activities, is very likely to either miss or delay reaction to a problem. The use of specialized AI frees up people to focus on one thing at a time.

Another problem with humans is that they get bored. When bored, their attention wanders, they daydream, and they zone out. Maybe even fall asleep. Also, when they get bored, they make mistakes.

Further, humans are slow to react. Very slow, compared to automation. What counts as fast for a human is a few minutes. Very fast is a few seconds. For automation, fast can be a few milliseconds and very fast can be a few nanoseconds. In the OODA Loop 2.0 (Nichols, Ryan, & Ryan, 2000, pp. 468-489) world, speed matters, a lot. Harnessing the power of automation can mean the difference between success and failure. The speed issue comes into play in several different areas of UAS and C-UAS operations. First, UASs can fly very fast. Hypothetically, a UAS flying at 60 miles per hour can cover 1 mile in 1 minute. In 15 seconds, that UAS can fly 440 yards (1320 feet).

To put that distance in perspective, consider this analysis of human reaction times during an ordinary situation: driving a car.

Suppose a person is driving a car at 55 mph (80.67 feet/sec) during the day on a dry, level road. He sees a pedestrian and applies the brakes. What is the shortest stopping distance that can reasonably be expected? Total stopping distance consists of three components:

Reaction Distance. First. Suppose the reaction time is 1.5 seconds. This means that the car will travel 1.5 x80.67 or 120.9 feet before the brakes are even applied.

Brake Engagement Distance. Most reaction time studies consider the response completed at the moment the foot touches the brake pedal. However, brakes do not engage instantaneously. There is an additional time required for the pedal to depress and for the brakes to engage. This is variable and difficult to summarize in a single number because it depends on urgency and braking style. In an emergency, a reasonable estimate is .3 second, adding another 24.2 feet3.

Physical Force Distance. Once the brakes engage, the stopping distance is determined by physical forces (D=S²/(30*f) where S is mph) as 134.4 feet.

Total Stopping Distance = 120.9 ft + 24.2 ft + 134.4 ft = 279.5 ft (Green, 2013)

Simple arithmetic tells us that humans cannot keep up with detection and closure rates.

Suffice it to say, automation is needed to augment human actions. Sometimes it replaces the human entirely while other times it simply augments human capabilities. But it is incredibly valuable in all circumstance.

So, let’s get back to the types of AI that can be used and what it means in terms of footprint, infrastructure, backend support, and vulnerabilities.

Strong AI, including full replication of generalized intelligence, is still a long way away from existing in a small form factor. While great strides have been made in creating intelligent-like capabilities, some scarily intelligent, the resultant systems are dependent on very large banks of backend processors for computational support so that the user-facing systems can be smaller. (Tozzi, 2019)

Replicating intelligence is actually pretty tricky. Ignoring the methods in which data is collected and transferred from outside a system to inside the system (analogous to human eyes perceiving objects and transmitting the information to the brain to be considered, classified, and integrated into the human’s thought process), there are really interesting issues associated with developing a system capable of taking data and making sense of it. Part of the challenge is simply classifying the data as belonging to one type or another: is this a bird or a bear? Is it a duck or a goose? Is it a Canadian Goose or an Arctic Swan? And so on, with increasing detail and specificity.

Another part of the challenge is distinguishing truth from falsehood: is this data input truly representative of reality or is it a falsehood? Falsehoods can come from a variety of sources, including sincerely held beliefs. For AI systems that are collecting textual postings, such as from sources like books, tweets, and newspapers, distinguishing truth can be extremely tricky. This is one of the challenges that Watson, the IBM system, has had to confront in order to execute such things as participating in Jeopardy (Gray, 2017).

All this leads to the issue of training data. AI classifiers are developed, or trained, using data sets. By inserting false or misleading data into the training sets, it is possible to cause the AI to make mistakes when deployed in real world situations (Moisejevs, 2019) (Bursztein, 2018).

A less robust AI, with the ability to mimic human behavior, an ability to execute specific complex tasks (such as sensory aspects of biological life, including smell, hearing, vision, and touch), can fit into a smaller form fit, depending on the function. One of the things most home users don’t realize about voice recognition and interpretation systems, such as Siri, is that the voice interpretation and characterization does not occur on the handheld phone or the small speaker system. Instead, the data is collected and transmitted to backend processors, where the actual data crunching occurs (Goel, 2018). This distributed processing is necessary in order to bring the amount of computing power to bear that is needed to interpret all the various types of voices, circumstances, and commands, and even then, mistakes are made. There are examples of voice recognition systems that are fully functional on standalone home computer systems, such as Dragon Naturally Speaking (Nuance, 2020), but these work only because the first thing the user needs to do is to train the software to interpret the user’s voice, including cadence, accent, and structure. Every year, these systems are getting more capable but there is still a fair amount of processing needed when more than one unique user is interfacing with the AI.

Systems that are trained to sense and interpret environmental elements may be limited by the technology used for sensing (Vincent, 2017). The examples of automated vehicles hitting pedestrians illustrates some of this challenge (Wakabayashi, 2018) (McCausland, 2019). It becomes even more problematic when complicated scenarios are envisioned, such as being able to detect patterns in complex data from multiple sources in order to make correct decisions (such as identifying a terrorist in a crowd of people) (Tarm, 2010).

It goes without saying that the increasing miniaturization of electronic components, the incorporation of alternatives to electronics, such as optics, and the development of special purpose processors have and continue to revolutionize the ability to squeeze capabilities into a small size form factor. Size reduction has a lot of advantages: it can mean lower power requirements, faster execution of computational cycles, and less heat generation. It can also have some inherent disadvantages, including less robust physical components. Protecting advanced microelectronics from directed energy attacks, for example, can require significantly increased shielding, which can in turn affect overall energy requirements for flight operations. In mission situations where energy efficiency and UAS maneuverability are important, tradeoffs need to be considered in overall system design. However, great strides have been made in both the development of specialized processors that execute AI-like capabilities and the integration of those processors on common chip sets. Integration of multiple special chips in a system can provide a marked improvement in on-board intelligence (Morgan, 2019).

The integration of advanced automation, including AI, into UAS architectures can be thought of as having several faces. First, decision support systems with pre-programmed rules of engagement can be embedded onboard the individual systems. Next, specialized AI processors can be included as well. Naturally, more complex AI and decision support solutions can be implemented that rely on backend (either terrestrial or airborne) processing for the heavy computational lifting. Finally, all of these can be integrated together.

The interesting thing about automated decision support systems is that all possible scenarios must have been considered by the human programmers who created the system. The scenario analysis allows the humans to catalog the potential decisions that must be made. In a trivial example, consider an automated water tap. There is a sensor that detects when something matching the profile of human hands is placed under the tap. The system is programmed to decide in those circumstances: if the profile of the detected object matches the profile loaded into the system, activate a switch that allows water to flow. When the sensor loses detection of that object, activate a switch that stops the water from flowing. All of that needs to be specifically programmed into the system: no decisions are possible without a priori structural design.

The same type of a priori structural design is needed for all autonomous decision systems, including and especially complex systems in complicated situations. For example, in an autonomous car, a scenario to consider might be that an old lady and a child run into the street in front of the car so suddenly that the car must (because of physics) hit one or the other of the people. The decision must be made which one to hit. In a strong AI system, the internal intelligence would process the data and make the decision based on internal logic. If the processing is sufficiently fast, the car would then execute the system’s decision, taking out either the old lady or the child. In weak AI or a conventional decision support system, the system would simply execute the pre-programmed decisions embedded in the system. These decisions might take something like the following structures, depending on the programmer team considerations:

- if two objects block the path with insufficient time to avoid both,

- • hit the one to the right

The decision, of course, could have just as easily been:

- • hit the one to the left

OR

- • navigate between the objects

Alternatively, a more complex system might have the following type of logic path:

- if two objects block the path with insufficient time to avoid both,

- • characterize the identity of the objects

- •• are both objects members of a protected class?

If yes, hit the one to the right

If no, then:

- •• is one a member of protected class?

If yes, hit the other object

If no, then hit the one on the right

The point of this thought exercise is to illustrate that “independent thinking” by a machine is dependent on thinking done by programmers in designing the system.

Implications for C-UAS Operations

A UAS may have decision processes in place that impel the UAS to avoid hitting members of its swarm, deploy electronic countermeasures when certain threats are detected, or increase power when the rate of altitude change exceeds certain thresholds. Each of these decisions structures is necessary to support the semi- or fully autonomous aspects of the mission. Each of these decisions can provide an exploitable aspect for C-UAS activities. Understanding what the decision structure is provides the C-UAS mission planner with the opportunity to create situations that trigger certain decisions that can lead to desirable outcomes, like diverting the flight path of a swarm.

Similarly, if the UAS is dependent on backend processing to support decision processes, then denying the link between the UAS and the backend processes will have an obvious effect. A competent architect will have programmed in failsafe decisions in the event of a lost link — forcing this outcome may or may not be a desired outcome. Spoofing the link and replacing the authentic backend processing with alternative processing may be a more desirable outcome, if it can be accomplished (probably very difficult if possible, at all). A middle level attack, where the link is degraded to the point that the decision cycle slows down significantly can be the more desirable outcome, as it provides the C-UAS operator additional time to pursue the C-UAS mission objectives.

Bottom line: understanding the level and complexity of onboard intelligence is an important part of C-UAS planning.

How Sensing is Used to Support UAS Operations

Other elements of the UAS information processing architecture that are potential targets for C-UAS activities include the sensors. A UAS is blind and deaf without sensors interacting with the environment and providing data about the environment to the control systems. Sensors include thermometers, barometers, visual spectrum cameras, multispectral sensors, wind speed sensors, hydrometers, and as many other types of sensors as can be imagined. Some of these may provide data to external systems, such as navigation aids or intelligence data collection systems, while others may provide data solely for use by the UAS.

Each of these sensors should be considered as potential targets for C-UAS activities. Confusing sensors that support navigation may cause a UAS to failsafe into an automated return to base profile. Denying the intelligence data gathering sensors may not do much to the flight operations of the UAS but would degrade or deny the effectiveness of the mission. Finally, attacking the sensor systems though electronic means to physically degrade or destroy the actual sensing apparatus provides a more enduring effect that the adversary would have a harder time recovering from.

In summary, there are more options to C-UAS than simply shooting the systems out of the sky. Although that is always an option.

Summary

UAS operations are complex symphonies of activities of many operators, both automated and humans. Understanding and analyzing interfaces can provide the C-UAS mission planner with many opportunities for vulnerability exploitation. In reading through the rest of this book, think about how each element fits into a larger analysis.

Questions for Reflection

- Diagram the likely coordination communications network for a UAS swarm. Identify potential points of compromise that would degrade the swarm activity.

- Describe the probable effect of jamming the ground to UAS control link.

- Explain the contribution that information technology makes to autonomous UAS operations.

- Your side is in a tense geopolitical conflict where both sides are using UASs to surveil the situation. There is pressure to avoid escalating the conflict by engaging in overtly hostile actions. However, it is necessary to move some military forces in order to be better positioned to react in case the situation degrades. Movement secrecy is desired, which means that some means must be found to deny the adversary’s surveillance capabilities while the move is taking place. The known capabilities of their UAS surveillance systems include radars and visual spectrum cameras with video capabilities. The data is collected onboard the UAS and uploaded to a high-altitude relay system, which sends it through other relays to the adversary intelligence data processing center. Your boss has asked you to come up with a C-UAS plan that is non-aggressive, but which provides cover for the force movement. What options can you provide for C-UAS activities in this scenario?

- A spy has revealed that The Flaming Arrow terrorist group is planning on using UASs in a swarm formation, designed to appear as a flying arrow, to deliver many small explosive devices to a key energy generation node. This node lies within a densely populated area that spreads out for 10 miles radius. There is a park one mile away from the targeted node. Once the UAS swarm is launched and released into autonomous mode, the explosives will be armed, with detonation occurring upon collision with some other object. The UASs to be used will be small, capable of flying 40 miles per hour for a distance of 5 miles while under load. There will be approximately 50 UASs in the swarm, flying approximately 50 feet above the ground. Each UAS has a basic decision support system onboard that allows fully autonomous mission execution once launched. Navigation is accomplished through image-based terrain feature recognition, where the visual data is collected through cameras and compared to onboard maps. The lead UAS establishes the route, but each of the UAS is capable of navigating independently. The spy has revealed the structure of the decision support system processes, which includes the following rule: if a swarm member to the right moves within 10 feet distance, move to the left until 10 feet separation is maintained. Your challenge is to design a C-UAS to cause the UAS system to divert to the park rather than hit the energy node. Keep in mind that you don’t know where the launch point is, but you know it has to be somewhere within the flight parameter limitations. Also, keep in mind that destruction of any UAS will cause the bomb to detonate. Your goal is to minimize the damage and keep the bombs away from both the energy node and the populated areas.

References

AirForceTechnology.com. (2019, June 19). The 10 longest range unmanned aerial vehicles (UAVs). Retrieved January 7, 2020, from AirForceTechnology.com: https://www.airforce-technology.com/features/featurethe-top-10-longest-range-unmanned-aerial-vehicles-uavs/

Bursztein, E. (2018, May 1). Attacks against machine learning — an overview. Retrieved January 29, 2020, from Blog: AI: https://elie.net/blog/ai/attacks-against-machine-learning-an-overview/

Coram, R. (2010). Boyd: The Fighter Pilot Who Changed the Art of War. New York: Hatchette Book Group.

Fisher, J. (2020, January 27). The Best Drones for 2020. Retrieved January 29, 202, from PC Magazine: https://www.pcmag.com/picks/the-best-drones

Forsling, C. (2018, July 30). I’m So Sick of the OODA Loop. Retrieved November 6, 2019, from Task and Purpose: https://taskandpurpose.com/case-against-ooda-loop

Gambrell, J. (2020, January 11). Crash may be grim echo of US downing of Iran flight in 1988. Minnesota Star Tribune, p. 1.

Goel, A. (2018, February 2). How Does Siri Work? The Science Behind Siri. Retrieved January 29, 2020, from Magoosh Data Science Blog: https://magoosh.com/data-science/siri-work-science-behind-siri/

Gray, R. (2017, March 1). Lies, propaganda and fake news: A challenge for our age. Retrieved January 29, 2020, from BBC Future: https://www.bbc.com/future/article/20170301-lies-propaganda-and-fake-news-a-grand-challenge-of-our-age

Green, M. (2013, January 1). Driver Reaction Time. Retrieved January 29, 2020, from Visual Expert: https://www.visualexpert.com/Resources/reactiontime.html

Halloran, R. (1988, July 4). The Downing of Fliight 655. New York Times, p. 1.

Huang, A. (2006, January 1). A Holistic Approach to AI. Retrieved January 29, 2020, from Ari Huang Research: https://www.ocf.berkeley.edu/~arihuang/academic/research/strongai3.html

James, R. (2019, October 30). Understanding Strong vs. Weak AI in a New Light. Retrieved January 4, 2020, from Becoming Human AI: https://becominghuman.ai/understanding-strong-vs-weak-ai-in-a-new-light-890e4b09da02

Kenton, W. (2019, February 12). Stock Market Crash of 1987. Retrieved January 29, 202, from Investopedia: https://www.investopedia.com/terms/s/stock-market-crash-1987.asp

Loon LLC. (2020, January 1). Loon.com. Retrieved January 29, 2020, from Loon.com: https://loon.com

McCausland, P. (2019, November 9). Self-driving Uber car that hit and killed woman did not recognize that pedestrians jaywalk. Retrieved January 29, 2020, from NBC News: https://www.nbcnews.com/tech/tech-news/self-driving-uber-car-hit-killed-woman-did-not-recognize-n1079281

Miller, E. K. (2017, April 11). Multitasking: Why Your Brain Can’t Do It and What You Should Do About It. Retrieved January 4, 2020, from Miller Files: https://radius.mit.edu/sites/default/files/images/Miller%20Multitasking%202017.pdf

Moisejevs, I. (2019, July 14). Poisoning attacks on Machine Learning . Retrieved January 29, 2020, from Towards Data Science: https://towardsdatascience.com/poisoning-attacks-on-machine-learning-1ff247c254db

Morgan, T. P. (2019, November 13). INTEL THROWS DOWN AI GAUNTLET WITH NEURAL NETWORK CHIPS. Retrieved January 29, 2020, from The Next Platform: https://www.nextplatform.com/2019/11/13/intel-throws-down-ai-gauntlet-with-neural-network-chips/

Nichols, R. K., Ryan, J. J., & Ryan, D. J. (2000). Defending Your Digital Assets Against Hackers, Crackers, Spies, and Thieves. New York: McGraw Hill.

Nuance. (2020, January 1). Dragon Speech Recognition Solutions. Retrieved January 29, 2020, from Nuance Products: https://www.nuance.com/dragon.html

Richards, C. (2012, March 21). Boyd’s OODA Loop: It’s Not What You Think. Retrieved July 27, 2019, from Fast Transients Files: https://fasttransients.files.wordpress.com/2012/03/boydsrealooda_loop.pdf

Ryan, J. J. (1997, September 80). Lecture Notes, EMSE 218/6540/6537. (J. J. Ryan, Performer) George Washington University, Washington, DC, USA.

Ryan, J. J. (2001, November 12). Security Challenges in Network-Centric Warfare. (J. J. Ryan, Performer) George Washington University, Washington, DC, USA.

Sampson, B. (2019, February 20). Stratospheric drone reaches new heights. Retrieved January 5, 2020, from Aerospace Testing International: https://www.aerospacetestinginternational.com/features/stratospheric-drone-reaches-new-heights-with-operation-beyond-visual-line-of-sight.html

Tarm, M. (2010, January 8). Mind-reading Systems Could Change Air Security . Retrieved March 1, 2011, from The Aurora Sentinel: http://www.aurorasentinel.com/news/national/article_c618daa2-06df- 5391-8702-472af15e8b3e.html

Tozzi, C. (2019, October 16). Is Cloud AI a Fad? . Retrieved January 29, 2020, from ITPro Today: https://www.itprotoday.com/cloud-computing/cloud-ai-fad-shortcomings-cloud-artificial-intelligence

Vincent, J. (2017, April 12). MAGIC AI: THESE ARE THE OPTICAL ILLUSIONS THAT TRICK, FOOL, AND FLUMMOX COMPUTERS. Retrieved January 29, 2020, from The Verge: https://www.theverge.com/2017/4/12/15271874/ai-adversarial-images-fooling-attacks-artificial-intelligence

Wakabayashi, D. (2018, March 19). Self-Driving Uber Car Kills Pedestrian in Arizona, Where Robots Roam. Retrieved January 29, 2020, from New York Times: https://www.nytimes.com/2018/03/19/technology/uber-driverless-fatality.html

Wikipedia. (2019, December 29). Loon LLC. Retrieved January 14, 2020, from Wikipedia: https://en.wikipedia.org/wiki/Loon_LLC