13 Chapter 13 Automaton, AI, Law, Ethics, Crossing the Machine – Human Barrier [Lonstein]

“The development of full artificial intelligence could spell the end of the human race….It would take off on its own, and re-design itself at an ever-increasing rate. Humans, who are limited by slow biological evolution, couldn’t compete, and would be superseded.” Stephen Hawking (Cellan-Jones, 2014)

Student Learning Objectives

Professor Stephen Hawking gave the cautionary warning above almost six years ago. While no human can predict what the future will hold with specific accuracy, we can examine new technology, blend it with the social sciences and history to develop generalized ideas of the societal risks and rewards of new technology. Students should use their imagination, experience, and learning to enhance their understanding of how best to implement technology, predict intended and unintended consequences, and engage in their risk-benefit analysis. This process is an essential precursor for the introduction of Artificial Intelligence into everyday life. This analysis will challenge the student to think critically, not merely accept statements of “experts” or manufacturers of even celebrity or other endorses of a particular technology at face value. Someone once said: “Any intelligent fool can make things bigger and more complex… It takes a touch of genius — and a lot of courage to move in the opposite direction.” – Attributed to Albert Einstein, E.F Schumacher, and Woody Guthrie in various publications, for our purposes, the source of the quote is less important than the message. Students should be bold and brave, speak truth based on their experience, and education and not shy away from being unpopular or different. Conformity, for the sake of conformity, does not honor students’ effort in acquiring knowledge. It makes them more lemming and less human. Students should strive to be the best human one can be.

Once Completed Students Should

- Understand that they all possess unique thinking and analysis tools based upon their imagination, learning, experience, and uniqueness. Those attributes are critical to better not only themselves but to better mankind both by embracing new technology or rejecting it based upon risk.

- Consider the risk of ubiquitously using automation and AI throughout many aspects of everyday life. Students must be aware of potential risks from connected technology, such as social media where information is simple to acquire and digest in seconds carry risk of abuse, manipulation or even degradation of human thought processes?

- Be aware that there are no simple answers to the widespread implementation of AI and Automation and beyond the changes to how we, as humans do things but also consider how we might be changed as a result of this process. From mental and physical health to its impact upon future generations, the environment, public safety, and many other aspects of the human existence.

Humans, Humanity and Humanoids

According the Merriam-Webster Dictionary a “Human” is defined as “a bipedal primate mammal (Homo sapiens), a person” (Merriam-Webster, 2020); “Humanity” is defined as “the quality or state of being human.” (Merriam-Webster, 2020); And “Humanoids” are defined as “a humanoid being: a nonhuman creature or being with characteristics (such as the ability to walk upright) resembling those of a human.” (Merriam-Webster, 2020). Finally, Merriam-Webster Defines Artificial Intelligence as: “1. a branch of computer science dealing with the simulation of intelligent behavior in computers and 2: the capability of a machine to imitate intelligent human behavior.” (Merriam-Webster, 2020) [See Figure 13.1]

The question which will confront all of us in the very near future is what will differentiate the human and humanity, one organic the other more ethereal in terms of their ability to make subjective judgments, from the Humanoid which is not organic, nor is it capable of subjective thought, only objective decision making based upon its programming.

Figure 13.1 The Humanoid

Source: Courtesy Forbes & Adobe Stock)

Are We Losing Our Minds?

Today there is no shortage of conflicting viewpoints on the law, ethics, and morality relating to autonomous technology and Artificial Intelligence. Moving beyond what is their current nascent state, these technologies will undoubtedly continue to become staples of everyday life. To focus our discussion on technology familiar to most if not all of us, we will examine the issues of AI and Autonomous operation in social media. In 2017 Liz Stillwaggon Swan wrote an article in the IEEE publication “Technology and Society” entitled “Are Social Media Making Us Stupid?” Swan asks whether addiction to social media amongst our youth results in a rapid loss in writing skills proficiency. Swan writes:

“Social media platforms force users to think and write in bit-like form, with acronyms substituting for sentences and emoticons substituting for the expression of feelings. We are learning–some of us more quickly than others–to adapt to a computer-dictated form of communication. Sherry Turkle (M.I.T.) has noted that a fluency with texting and tweeting is commonly correlated with a dearth of skills in face-to-face interactions. We’re noting, in addition, what social media addiction is doing to written communication: specifically, it’s eroding the traditional divide between speaking and writing.” (Stillwaggon Swan, 2017)

Our focus will be on the rapidly decreasing face-to-face interactions associated with increased social media usage. Social media is a simple technology, but Swan writes it also has “broken time and space barriers” by allowing users to communicate with followers and vice-versa 24/7 around the globe instantly.[1] How do AI and autonomous technology result in the need for consideration of laws and ethics relating to their implementation in social media?

According to Courtney Seiter, the Neurotransmitter Dopamine, (“the Pleasure Chemical”) and the Hormone Oxytocin (the “Cuddle Chemical”) play a significant role in the addictive use and near-blind acceptance of content on social media. According to Seiter, there is a strong relationship between these two behaviors affecting biological compounds and the online and increasingly offline actions of its users. Seiter writes that the roles of Dopamine and Oxytocin play significant roles in the psychology of social media.

Dopamine

Dopamine is stimulated by unpredictability, by small bits of information, and by reward cues—pretty much the exact conditions of social media. The pull of dopamine is so strong that studies have shown tweeting is harder for people to resist than cigarettes and alcohol.

Oxytocin

Then there’s oxytocin, sometimes referred to as “the cuddle chemical” because it’s released when you kiss or hug. Or … tweet. In 10 minutes of social media time, oxytocin levels can rise as much as 13%—a hormonal spike equivalent to some people on their wedding day. And all the goodwill that comes with oxytocin—lowered stress levels, feelings of love, trust, empathy, generosity—comes with social media, too. As a result, social media users have shown to be more trusting than the average Internet user. The typical Facebook user is 43% more likely than other Internet users to feel that most people can be trusted. So, between dopamine and oxytocin, social networking not only comes with a lot of great feelings, it’s also really hard to stop wanting more of it.” (Seiter, 2016)

Slot Machines in Your Hand & Head

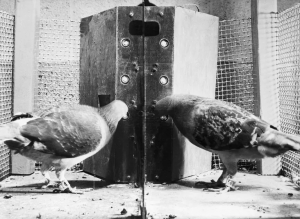

Creators of social media platforms like Facebook, Twitter, amongst many others, did not need to look far to learn how to drive maximum adoption and daily dependence upon social media. I always enjoy the bells and whistles and lights when walking into a casino. Yet, after playing a bit, the usual sensation experienced is regret, foolishness, and quite frankly dirtiness. Much like a smartphone, noises, visuals, and other sensory stimulation 24 hours a day can lead to dependence and mistakes. B.F Skinner, the famed Harvard psychologist, once said “A pigeon can become a pathological gambler, just as a person can,” (Skinner, 1977) [See Figure 13.2]

Figure 13.2 Gambling Pigeons

Source: (Courtesy Bettmann Archive / Getty)

So, what do pigeons and gambling have to do with social media? “Just as substance addicts require increasingly strong hits to get high, compulsive gamblers pursue ever riskier ventures. Likewise, both drug addicts and problem gamblers endure symptoms of withdrawal when separated from the chemical or thrill they desire. And a few studies suggest that some people are especially vulnerable to both drug addiction and compulsive gambling because their reward circuitry is inherently underactive—which may partially explain why they seek big thrills in the first place.” (Scientific American, 2013)

The Psychology of Social Media

In 2004 Dr. John Suler wrote the groundbreaking paper entitled the “Online Disinhibition Effect. In the paper Dr. Suler examined the psychology behind our behavior on line and how it varies from the brick and mortar world. Dr. Suler wrote in 2004:

“Everyday users – on the Internet—as well as clinicians and researchers have noted how people say and do things in cyberspace that they wouldn’t ordinarily say and do in the face-to-face world. They loosen up, feel less restrained, and express themselves more openly. So pervasive is the phenomenon that a term has surfaced for it: the online disinhibition effect.” (Suler, 2004)

What the psychological components of the Online Disinhibition Effect and how do they drive our online behavior? [See Table 13.1]

|

ONLINE BEHAVIOR –IS IT DIFFERENT? SIMPLE, VIRTUAL, ANONYMOUS |

| •You Don’t Know Me (Dissociative anonymity) -As you move around the internet, most of the people you encounter can’t easily tell who you are. When people have the opportunity to separate their actions from their real world and identity, they feel less vulnerable. They don’t have to own their behavior by acknowledging it within the full context of who they “really” are. |

| •You Can’t See Me (Invisibility) -Even with everyone’s identity visible, the opportunity to be physically invisible amplifies the disinhibition effect. You don’t have to worry about how you look or sound when you say (type) something. You don’t have to worry about how others look or sound when you say something. |

| •See You Later (Asynchronicity) -People don’t interact with each other in real time. Others may take minutes, hours, days, or even months to reply to something you say. Asynchronous communication is like “running away” after doing something unethical or illegal. |

| •It’s Just a Game (Dissociative imagination) –People feel their online personae exist in a different space, separate and apart from the demands and responsibilities of the real world. Once they turn off the computer and return to their daily routine, they believe they can leave their online identity behind. Why should they be held responsible for what happens in an online world that has nothing to do with reality? |

| •It’s All In My Head (Solipsistic Introjection) -Absent cues combined with text communication can have an interesting effect on people. Sometimes they feel that their mind has merged with the mind of the online companion. Reading another person’s message might be experienced as a voice within one’s head, as if that person magically has been inserted or “introjected” into one’s psyche. Of course, we may not know what the other person’s voice actually sounds like, so in our head we assign a voice to that companion. In fact, consciously or unconsciously, we may even assign a visual image to what we think that person looks like and how that person behaves. The online companion now becomes a character within our intrapsychic world, a character that is shaped partly by how the person actually presents him or herself via text communication, but also by our expectations, wishes, and needs. Because the person may even remind us of other people we know, we fill in the image of that character with memories of those other acquaintances |

| •We’re Equals (Minimizing authority) -While online a person’s status in the face-to-face world may not be known to others and it may not have as much impact as it does in the face-to-face world. If people can’t see you or your surroundings, they don’t know if you are the president of a major corporation sitting in your expensive office, or some “ordinary” person lounging around at home in front of the computer. |

Table 13.1 Online Disinhibition Effect

Source: (Courtesy John Suler)

When Facebook was conjured up one of its founders, Sean Parker, its President at the time said:

“The thought process that went into building these applications, Facebook being the first of them… was all about: ‘How do we consume as much of your time and conscious attention as possible?'” “And that means that we need to sort of give you a little dopamine hit every once in a while, because someone liked or commented on a photo or a post or whatever. And that’s going to get you to contribute more content, and that’s going to get you … more likes and comments.” “It’s a social-validation feedback loop … exactly the kind of thing that a hacker like myself would come up with because you’re exploiting a vulnerability in human psychology. “The inventors, creators — it’s me, it’s Mark [Zuckerberg], it’s Kevin Systrom on Instagram, it’s all of these people — understood this consciously. And we did it anyway.” (Allen, 2017)

Now that we have enough understanding of how social media was designed to psychologically effects humans, the question becomes how it can be used or misused. By leveraging the known psychological intended effects social media can be used to cross the virtual / actual barrier to cause humans to take or not take actions or behave in a particular way. This can fulfill the objective of the social media platforms or actors as a tool of disinformation, confusion, political unrest, or even armed conflict.

Noted internet and virtual reality Jaron Lanier recently wrote about these and many other concerns in his 2018 book, “Ten Arguments for Deleting your Social Media Accounts Right Now.” In an April 2018 interview with the Intelligencer he spelled out his concerns about the damage social media may be doing across society.

Weaponizing AI and Automation Using Social Media as the Delivery Method

Lanier in a prescient and articulable fashion express his very real concern with the potential of social media and its digital automation to have profoundly dangerous affects upon the world:

“One of the things that I’ve been concerned about is this illusion where you think that you’re in this super-democratic open thing, but actually it’s exactly the opposite; it’s actually creating a super concentration of wealth and power and disempowering you. This has been particularly cruel politically.

Every time there’s some movement, like the Black Lives Matter movement, or maybe now the March for Our Lives movement, or #MeToo, or very classically the Arab Spring, you have this initial period where people feel like they’re on this magic-carpet ride and that social media is letting them broadcast their opinions for very low cost, and that they’re able to reach people and organize faster than ever before. And they’re thinking, Wow, Facebook and Twitter are these wonderful tools of democracy. But then the algorithms have to maximize value from all the data that’s coming in. So, they test use that data. And it just turns out as a matter of course, that the same data that is a positive, constructive process for the people who generated it — Black Lives Matter, or the Arab Spring— can be used to irritate other groups. And unfortunately, there’s this asymmetry in human emotions where the negative emotions of fear and hatred and paranoia and resentment come up faster, more cheaply, and they’re harder to dispel than the positive emotions. So, what happens is, every time there’s some positive motion in these networks, the negative reaction is actually more powerful. So, when you have a Black Lives Matter, the result of that is the empowerment of the worst racists and neo-Nazis in a way that hasn’t been seen in generations. When you have an Arab Spring, the result ultimately is the network empowerment of ISIS and other extremists — bloodthirsty, horrible things, the likes of which haven’t been seen in the Arab world or in Islam for years, if ever.” (Lanier, 2018)

A mere two years later we have seen protests and riots across the United States and globe started in late May when a citizen in Minneapolis, George Floyd was seen dying during a police restraint where an officer kneeled on his neck for over eight minutes. Spontaneous and understandable protests and cries for justice ensured and social media became a tool employed to coordinate some of these demonstrations. Unfortunately, almost immediately social media’s emotional and Dopamine intensive qualities were manipulated to turn peaceful protests into riots, violence, property damage and even death across America. [See Figure 13.3]

Figure 13.3 Protest Tweet

Source: (Twitter Post @sugaaab_retweeted by @rave_mom_breezy)

Large sections of the population have recently become more reliant upon social media, especially during the global Coviid-19 Pandemic, which has turned to social media and away from broadcast media in droves. According to the Business Insider, a “Harris Poll conducted between late March and early May, between 46% and 51% of US adults were using social media more since the outbreak began. In the most recent May 1–3 survey, 51% of total respondents — 60% of those ages 18 to 34, 64% of those ages 35 to 49, and 34% of those ages 65 and up – reported increased usage on certain social media platforms.” (Samet, 2020)

Couple a significant uptrend in the global usage of social media with a populace that has increasingly become addictively and compulsively tethered to social media, and the result is an army of potential human assets which, to a certain degree, can be transformed to near humanoids.

The ability to deploy a humanoid army, nearly instantly, anywhere in the world, is a potent delivery system. The ordinance must be programmed into the delivery vehicle. Bots, fake accounts, fake news, disinformation, real-fake videos, and memes are all tools of social media information warfare. They have increasingly leveraged as a weapon of information warfare globally.

Recently Jeff Elder of Business Insider reported:

Media and citizen journalists are posting video, images, and accounts of scattered and chaotic protest events in response to the killing of George Floyd by a Minneapolis police officer, and the posts are being re-shared broadly. The result is an often-overwhelming stream of media from multiple sites and sources, and experts say audiences must be aware that the situation is being manipulated.

“People need to be aware that these events on the ground are being spun for political reasons,” says Angie Drobnic Holan, editor-in-chief of PolitiFact, the Pulitzer Prize-winning fact-checking news service of the Poynter Institute journalism think tank. Much of that spin likely comes from forces outside of America, the experts warn.

“Were there foreign-backed disinformation accounts targeting Americans this weekend? Absolutely. I am positive that was happening,” says Molly McKew, a writer and lecturer on Russian influence who advises the non-profit political group Stand Up America.” (Elder, 2020)

Information warfare is nothing new as a tool of conflict. Between the 1920’s and 1940’s Germany under Hitler’s Minister of Propaganda Dr. Joseph Goebbels. According to Dr. Yaniv Livyatan, “Goebbels needed a staff of 1,000 to generate propaganda. Today all it takes is the click of a button.” (Livyatan, 2019) [See Figure 13.4]

Figure 13.4 Dr. Joseph Goebbels

Source: (Courtesy AP)

Dr. Levyatan belies that social media, its ubiquitous nature, ease of automation and ability to instantly “push” notifications to subscribers instead of them searching for content makes it a propaganda game changer. He continues:

So, it’s vital to understand who your audience is and what moves it. At the same time, the world is becoming a place where you can accumulate more and more knowledge of that kind. You can target your messages accurately. Scientifically. Think what Goebbels could have done with Facebook.

“Facebook is excellent for psychological warfare because they’re constantly collecting information about us. An analysis of that information is very illuminating with respect to our personalities, our aspirations, our opinions. We saw that vividly in the story of Cambridge Analytica [which acquired data from profiles of some 50 million Facebook users] in the 2016 U.S. election. Our behavior in the social networks, which we perceived as something innocent and mundane, has become an instrument through which we can be influenced via manipulative techniques. The information we volunteer, such as Likes, make it possible for those who want to, to understand how to communicate with us in a precise way.

Goebbels had a ministry of 1,000 personnel whose primary task was not to compose messages but to go into the field and examine how the messages work on people. Today you can do that by pressing a small button. If the Nazis had come to power today, they would have ruled the world.

Indeed, we see which rulers are rising to power today and what their messages look and sound like. We can take it that there’s a connection.” (Livyatan, 2019)

Conclusions

While many consider the implementation of Artificial Intelligence and Autonomous Technology concepts most closely associated with Drones, Driverless Vehicles, Autonomous Surface and sub-surface and sea technology, students must keep a keen eye on their personal technology as well. According to Rescuetime.com the average American checks their cell phone 58 times each day so if you wanted to get a message in front of a target’s eyes, the phone and Social media apps are the way to do it. (McKay, 2019) When combined with addictive behavior and a growing reliance on short form messaging and content, critical thinking is under attack and those who seek to do harm are well aware of this troubling reality.

Questions for students to consider

- How would you respond to the mass dissemination of false information on social media video designed to mislead the public that a terror attack was occurring in New York City using film from the 9/11 attacks in 2001?

- Should social media technology be controlled or be subject to oversight and regulation from the federal government due to the risk it can easily be used to destabilize or even overthrow the nation?

- Should Social Media companies be allowed to employ algorithms and use encryption technology without allowing access to the information to the federal government or military in a time of national crisis?

- How would you slow the progress of the pernicious addictive allure of social media upon today’s youth or do you believe that is something best left to the social media companies themselves?

- Have you ever asked another who informed you that they saw that a particularly disturbing event occurred where they saw the information and their reply was Facebook, Twitter, or another social media platform?

REFERENCES

@sugaaab_. (2020, May 27). Tweet. Minneapolis, Minnesota, United States.

Allen, M. (2017, November 9). Sean Parker unloads on Facebook: “God only knows what it’s doing to our children’s brains”. Retrieved from Axios.com: https://www.axios.com/sean-parker-unloads-on-facebook-god-only-knows-what-its-doing-to-our-childrens-brains-1513306792-f855e7b4-4e99-4d60-8d51-2775559c2671.html

Cellan-Jones, R. (2014, December 2). Stephen Hawking warns artificial intelligence could end mankind. Retrieved from bbc.com: https://www.bbc.com/news/technology-30290540

Cherry, K. (2020, May 25). The Skinner Box or Operant Conditioning Chamber. Retrieved from Verywellmind.com: https://www.verywellmind.com/what-is-a-skinner-box-2795875

Elder, J. (2020, June 2). Foreign actors and extremist groups are using disinformation on Twitter and other social networks to further inflame the protests across America, experts say. Retrieved from Business Insider: https://www.businessinsider.com/twitter-facebook-disinformation-george-floyd-unrest-politifact-2020-6

Lanier, J. (2018, April). ‘One Has This Feeling of Having Contributed to Something That’s Gone Very Wrong”. (N. Kulwin, Interviewer)

Livyatan, D. Y. (2019, January 6). Just Think What Goebbels Could Have Done With Facebook. Retrieved from Haaretz.com: https://www.haaretz.com/world-news/.premium.MAGAZINE-just-think-what-goebbels-could-have-done-with-facebook-1.7308812

Marr, B. (2020, February 17). Artificial Human Beings: The Amazing Examples Of Robotic Humanoids And Digital Humans. Retrieved from Forebes.com: https://www.forbes.com/sites/bernardmarr/2020/02/17/artificial-human-beings-the-amazing-examples-of-robotic-humanoids-and-digital-humans/#6103bce65165

McKay, J. (2019, March 21). Screen time stats 2019: Here’s how much you use your phone during the workday. Retrieved from Rescue Time: Blog: https://blog.rescuetime.com/screen-time-stats-2018/

Merriam-Webster. (2020, August 15). artificial intelligence noun. Retrieved from Merriam-Webster.com: https://www.merriam-webster.com/dictionary/artificial%20intelligence

Merriam-Webster. (2020, August 5). Definition of human (Entry 2 of 2). Retrieved from Merriam-Webster.com: https://www.merriam-webster.com/dictionary/human#h2

Merriam-Webster. (2020, August 11). humanity noun. Retrieved from Merriam-Webster.com: https://www.merriam-webster.com/dictionary/humanity

Merriam-Webster. (2020, August 12). humanoid adjective. Retrieved from Merriam-Webster.com: https://www.merriam-webster.com/dictionary/humanoids

Samet, A. (2020, June 9). 2020 US SOCIAL MEDIA USAGE: How the Coronavirus is Changing Consumer Behavior. Retrieved from Business Insider: https://www.businessinsider.com/2020-us-social-media-usage-report

Scientific American. (2013). How the Brain Gets Addicted to Gambling. Scientific American, 309, 5, 28-30.

Seiter, C. (2016, August 10). The Psychology of Social Media: Why We Like, Comment, and Share Online. Retrieved from Buffer: https://buffer.com/resources/psychology-of-social-media/

Skinner, B. F. (1977). Skinner- Operant Conditioning. Retrieved from YouTube: https://www.youtube.com/watch?v=LSv992Ts6as&feature=youtu.be&t=244

Stillwaggon Swan, L. (2017, June 29). Are Social Media Making Us Stupid? Retrieved from technologyandsociety.org: https://technologyandsociety.org/are-social-media-making-us-stupid/

Suler, J. (2004). The Online Disinhibition Effect. CyberPsychology & Behavior, 321-326.

[1] Nations such as North Korea, Iran and a few others have been referred to as hermit nations because to the extent technologically possible, they restrict the use of social media.