9 Modeling, Simulations, and Extended Reality (Oetken)

This chapter provides an overview of immersive technology and surrounding use cases in emerging space systems. Recent advancements in virtual, augmented, and extended reality technologies have created new and exciting frameworks in the areas of simulation, modeling, and training. This chapter explores those frameworks and provides insight into how they fit within current and future space systems.

Student Learning Objectives

After reading this chapter, students should be able to do the following:

- Define and differentiate virtual, augmented, and extended reality

- Describe the framework of a virtual environment

- Define and differentiate the degrees of freedom in immersive simulation

- Differentiate use case protocols for augmented versus extended reality technology integration in space systems

Foundations of Immersive Systems Technology

Immersive technology and its relationship to human-centered emerging space systems have the potential to significantly enhance many facets of aerospace design and space exploration. Immersive systems are the technologies used to create an experience where system engineers and designers enhance parts of a user’s physical world with computer-generated input (The Interaction Design Foundation, 2021) Emerging space systems can be enhanced through immersive technologies that use virtual reality (VR), augmented reality (AR), and extended reality (XR). System engineers and designers are on the threshold of a tremendous opportunity to enhance and improve the user experience for pilots, astronauts, and other space-related occupations by embedding immersive systems technologies in the lab and in the field.

Virtual Reality

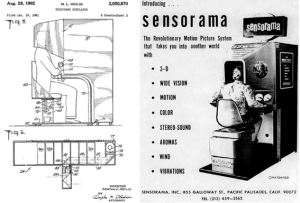

Much of the virtual reality technologies found in today’s ecosystems are built on ideas that date back as early as the 1800s. The first stereoscope, using twin mirrors to project a single image, was invented in 1838, and that concept eventually morphed into the View-Master toy, which was patented in 1939 and is still in production. The next big technological leap in immersive systems came from Morton Heilig, who is often regarded as the father of VR (Gackenbach, 2017). Heilig had the vision to create a multisensory theater experience that would be more immersive than anything people had previously experienced. His Sensorama simulator (Figure 9-1) was a fully immersive, multisensory theater experience that encompassed 3-D images, stereo sound, wind, smells, and vibrations.

In order to make the Sensorama simulator more immersive, Heilig invented a

side-by-side dual film 3-D camera and projector. He made five films dedicated to the Sensorama, which included a motorcycle ride through New York City, a bicycle ride, a ride of a dune buggy, a helicopter ride over Century City, and a dance by a belly dancer (Carlson, 2017). The experience of the motorcycle ride through New York included a seat that would vibrate as a motorbike would, air that would rush through the user’s hair, and smells of the road and a passing bistro (Gackenbach, 2017).

Figure 9-1 The Sensoroma

Source: (Basso, 2017)

In 1965, another inventor named Ivan Sutherland created a head-mounted device that Sutherland marketed as a window into a virtual world. Sutherland’s device was the first head-mounted display to incorporate computer technology to mediate a VR system (Gackenbach, 2017). Sutherland’s system became known as the “Sword of Damocles” (Figure 9-2). The name arises from the Greek story of Damocles, in which a sword was suspended in the air by a hair, directly above the King’s head—and at any moment, the hair could break, killing the King (Skurzynski, 1994). Similarly, Sutherland’s contraption consisted of a height-adjustable pole attached to the ceiling. The system used this design setup due to the extreme weight of the headgear.

Figure 9-2 The Sword of Damocles

Source: (Van Krevelen, 2010)

This was the first time that computers were used to display a real-world environment whose elements were augmented by a computer (Adams, 2014). The headgear itself was made of cathode ray tube monitors, a mechanical tracking system, an ultrasonic tracking system, eyeglass display optics, and many computer programs and algorithms. Sutherland’s system was able to project a transparent, 3-D wireframe cube onto the semitransparent optic lenses to create the illusion that the cube was floating in the room (Sunderland, 1968). The graphics were primitive—however, the 3-D cube would move and tilt, corresponding to the observer’s head movements.

The term “virtual reality” was first used in the 1980s when Jaron Lanier started to design and develop goggles and gloves needed to experience what he called “virtual reality.” Visual Programming Languages (VPL) was one of the first companies to design, develop, and sell VR products to consumers. VPL developed the DataGlove, the EyePhone, and AudioSphere (Figure 9-3). These devices, when used together, created an immersive experience. The DataGlove used fiber optic cables attached to the back of the glove, which immitted tiny light beams as the user bent and moved their hand. Then, a computer interpreted the light beams and would generate an image on a small screen inside the EyePhone helmet. There were two drawbacks that limited the success of Lanier’s systems: it was too expensive for the average consumer, and it was a one-size-fits-all glove (Burdea, 2003). Additionally, the DataGlove lacked tactile feedback, which reduced any sense of presence and was inconsistent with expectations of reality.

Figure 9-3 The VPL DataGlove and EyePhone

Source: (Sorene, 2014)

The 1970s and 1980s were an exciting time in the field of virtual reality. Advances in optical technology ran parallel to projects that worked on haptic devices and devices that would allow users to move around in virtual space. At NASA’s Ames Research Center in the mid-1980s, the Virtual Interface Environment Workstation (VIEW) system (Figure 9-4) combined a head-mounted device with VPL’s DataGloves to enable haptic interaction. The VIEW system used a head-mounted stereoscopic display system in which the display may be an artificial computer-generated environment, or a real environment relayed from remote video cameras (Rosson, 2022). can “step into” this environment and interact with it. For this project, NASA developed the DataSuit—a full-body garment equipped with sensors that increased the sphere of performance for virtual reality simulations by reporting to the computer the motions, bends, gestures, and spatial orientation of the user (Rosson, 2022).

Figure 9-4 The NASA VIEW system

Source: (Rosson, 2022)

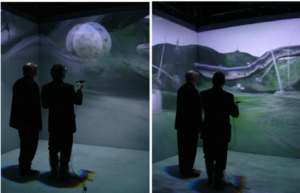

A large leap towards more interactivity in VR technology came in 2001 with the SAS cube—a computer-based cubic room (Figure 9-5). The SAS cube was nicknamed “The Cave,” which was in reference to Plato’s allegory of the cave, wherein he challenges human ideas of perception, reality, and illusion. The SAS cube room used projectors and sensors driven by PCs that react to people in the room. The advancements in PC graphics developed by the gaming industry meant that a cluster of relatively inexpensive PCs could be used instead of large supercomputers to yield the processing power required for effective vividity and interaction (Jacobson J. &., 2005) (Jacobson J. L.-L., 2005). The SAS cube system used rear projectors to cast stereoscopic images onto four screens, one of which was the floor. The continuous visual images synchronized across all screens produced a virtual landscape. Users wear 3-D glasses equipped with motion tracking sensors, which track

head movement (Fuchs, 2011). The stereoscopic images made the environment look 3-D, and sensors let users interact with objects and navigate the space (Robertson, 2001).

Figure 9-5 The SAS Cube System

Source: (Jacobson J. &., 2005)

Augmented and Mixed Reality

Augmented reality systems differ from virtual reality in many conceptual and technical aspects. Augmented reality is accomplished through the human eye’s view of the physical world in which various elements are enhanced by computer-generated input and digital artifacts. These inputs and artifacts can range from sound to video, graphics to GPS overlays, and more (The Interaction Design Foundation, 2021).

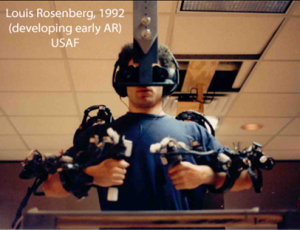

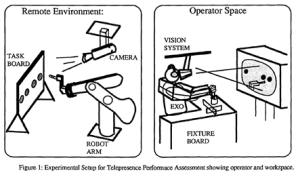

One of the first functional augmented reality systems was a robotic system designed and developed in 1992 at The United States Air Force Armstrong Research Lab by Louis Rosenberg. Rosenberg designed an AR system called Virtual Fixtures (Figure 9-6A & 9-6B), which was an incredibly complex robotic system designed to compensate for the lack of high-speed 3-D graphics processing power in the early 1990s (The Interaction Design Foundation, 2021). The system enabled the overlay of sensory information onto a workspace to improve user productivity.

Figure 9-6A The Virtual Fixtures Robotic System

Source: (Rosenberg, 2022)

Figure 9-6B The Virtual Fixtures Robotic System

Source: (Rosenberg, 2022)

The first commercial AR application was introduced in 2008 by a German marketing agency in Munich. The agency designed a printed magazine ad for a model BMW Mini, which, when held in front of a computer’s camera, also appeared on the screen. Through the connection of markers on the physical print ad and the virtual car model, users were able to control the car on the screen and move it around to view different angles simply by manipulating the piece of paper (Javornik, 2016). The application was one of the first marketing campaigns that allowed interaction with a digital model in real-time.

Google’s Project Glass AR device (Figure 9-7) was presented to the public in 2012. Google Glass is an optical head-mounted display wearable device that is controlled with an integrated touch-sensitive sensor or with natural language voice commands. After Google made the public announcement for Google Glass, there was a surge of new AR research and an increase in the public perception of augmented reality technology (Arth, 2015). However, Google Glass was never quite successful in the consumer market. In January of 2015, Google announced that it would stop producing the Google Glass prototype. In July of 2017, Google announced the Google Glass Enterprise Edition and an updated version of the enterprise edition in 2019.

Figure 9-7 Google Glass AR Device

Source: (Statt, 2020)

In January of 2015, Microsoft announced the Hololens (Figure 9-8A). The Hololens is a headset that fuses AR and VR technologies. The device contains an integrated Windows computer system with a see-through display and multiple sensors. The Hololens is Microsoft’s take on augmented reality, which they call mixed reality (Arth, 2015). Using multiple sensors, advanced optics, and holographic processing that melds seamlessly with its environment, the device generates holograms that can be used to display information, blend with the real world, or even simulate a virtual world. The Hololens has a plethora of optical sensors, with two on each side for peripheral environment understanding and sense, a main downward facing depth camera to pick up hand motions, and specialized speakers that simulate sound from anywhere in the room. The Hololens also has several microphones, an HD camera, an ambient light sensor, and Microsoft’s proprietary “Holographic Processing Unit” that has similar processing power as an average laptop. The device is capable of sensing the spatial orientation of the operator in relation to its position in the room, tracking walls and objects in the room, and blending holograms into the physical environment. The Microsoft Hololens 2 was released in 2019 and improved on the immersiveness, ergonomics, and connectivity of the original device.

Figure 9-8A Microsoft Hololens Device

Source: (Landyshev, 2019)

Basics of Dynamic Modeling in Virtual Environments

A virtual world is representative of an environment made up of objects, avatars, actuators, and other elements. In a general sense, we are dealing with dynamic environments where objects can move when touched. Forces and torques from various sources act on virtual objects. Simulating the dynamics of virtual environments is an

important part of an application, regardless of whether it is dynamics of rigid bodies, deformation dynamics, or dynamics of fluids (Mihelj, 2014). Inclusion of the relevant dynamic responses allows realistic behavior of the virtual environment to be achieved. A model of a body in a virtual environment must include a description of its dynamic behavior. This description also defines the body’s physical interaction with other bodies in the environment. Body dynamics can be described based on various assumptions, which then determine the level of realism and the computational complexity of the simulation.

Interaction and Simulation in Complex Systems

Complex immersive systems require advanced levels of interaction within the virtual environment in order to maintain integrity. Mihelj et al. (2014) describe this level of interaction based on a computer-generated framework:

Interaction with a computer-generated environment requires the computer to respond to the user’s actions. The mode of interaction with a computer is determined by the type of the user interface. Proper design of the user interface is of utmost importance since it must guarantee the most natural interaction possible. The concept of an ideal user interface uses interactions from the real environment as metaphors through which the user communicates with the virtual environment (p. 205).

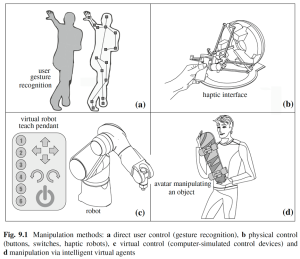

The principal elements of interaction inside a virtual environment can be broken down into three main functionalities: manipulation, navigation, and communication. (Mihelj, 2014) note that “manipulation allows the user to modify the virtual environment and manipulate objects within it; navigation allows the user to move through the virtual environment; and communication occurs between different users or between users and digital intermediaries within the virtual environment” (p. 207). One of the advantages of operating in an immersive environment is the ability to interact with objects or manipulate objects in the environment (Mihelj, 2014). The ability to experiment in a new environment, real or virtual, enables the user to gain knowledge about the functioning of the environment. Some manipulation methods are shown in (Figure 9-8B).

Figure 9-8B Manipulation Methods

Source: (Mihelj, 2014)

Navigation represents movement in space from one point to another. It includes

two important components: (1) travel—how the user moves through space and time

and (2) path planning —methods for determination and maintenance of awareness of

position in space and time, as well as trajectory planning through space to the desired

location (Mihelj, 2014). Moreover, simultaneous activity of multiple users in a virtual environment is a vital aspect of a VR framework. (Mihelj, 2014) offer a good description of how simultaneous activity can be achieved in a virtual environment:

Users’ actions in virtual reality can be performed in different ways. If users work together in order to solve common problems, the interaction results in cooperation. However, users may also compete among themselves or interact in other ways. In an environment where many users operate at the same time, different issues need to be taken into account. It is necessary to specify how the interaction between persons will take place, who will have control over the manipulation or communication, how to maintain the integrity of the environment and how the users communicate. Communication is usually limited to visual and audio modalities. However, it can also be augmented with haptics (p. 210).

As technology advances, immersive systems become more complex, and their use requires many new skills. Engineers and designers are currently working on best-practice scenarios and frameworks to put in place, but many of the principals dealing with this complex environment are already in place. (Mihelj, 2014) explain this complexity in detail:

Extended reality is a medium that is suitable for training operators of such systems. Thus, it is possible to practice flying an aircraft, controlling a satellite, navigating a space vehicle, repairing an engine, and many other tasks in a virtual environment. Such environments are also important in the field of advanced robotics. Their advantage is not only that they enable simulation of a robotic cell but also behave as a real robot controller. This saves time required for programming since robot teaching is done in a simulation environment offline while the robot can still be used in a real environment in the meantime. At the same time, a virtual environment enables verification of software correctness before the program is finally transferred to the robot. Simulation-based programming may thus help avoid potential system malfunctions and consequent damage to the mechanism or robot cell (p. 217-219).

Degrees of Freedom in Immersive Simulation

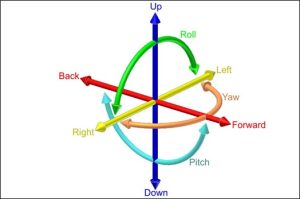

Degrees of freedom (DoF) is a reference to the number of basic ways a rigid object can move through 3-D space. In total, there are six degrees of freedom. Three degrees correspond to rotational movement around the x, y, and z axes. These are commonly referenced as pitch, yaw, and roll. The other three degrees correspond to translational movement along the x, y, and z axes. These can be thought of in reference to how an object is moving forward or backward, moving left or right, and moving up or down.

Most VR and XR headsets and input devices are set up in 3DoF or 6DoF configurations. 3DoF means one can track rotational motion but not translational. In regard to the immersive environment, that means one can track whether the user has turned their head left or right, tilted it up or down, or pivoted left and right. 6DoF (Figure 9-9) means one can additionally track the translational motion in the immersive environment—whether the user has moved forward, backward, laterally, or vertically.

Figure 9-9 6Dof Illustration

Source: ShareAlike 4.0 International (CC BY-SA 4.0))

Use of Motion Control Platforms

Cybersickness or Virtual Reality sickness are similar to Motion Sickness. The symptoms are almost identical in that users feel dizzy as if they were on a car trip or feel sick as if they were on a bumpy plane ride. The intensity of cybersickness depends on the VR technology that the headset is using. Symptoms often involve problems with presence and balance and eyes sending false perspectives as motion is displayed in the headset. Through the use of mechanical movement, motion control platform systems (Figure 9-10) provide the capability of staying immersed in longer virtual and extended reality simulation sessions without feeling nauseous. Motion control systems incorporate the principal physics of 3DoF or 6DoF to provide a minimization of mismatches between movement and graphics, which enhances disorientation for the user.

Figure 9-10 3DoF Motion Control Platform System

Source: (Motion Systems EU. , 2022)

AR and XR Uses Cases in Space Systems

In order for XR solutions to become a mainstream addition to the modern space systems workflow, they need to be efficient, immersive, and ergonomic. Hand and eye tracking solutions are ideal for making the XR experience feel more natural, but it is also important for the right hardware and software innovations to be implemented to ensure these features deliver the right results (Carter, 2022). For example, lightweight and untethered headsets with powerful displays will make it easier to engage in XR environments for longer periods of time. To improve the overall ergonomic experience, developers are researching eye-tracking technology to detect the visual needs of the user at any given time and adjust the rendering accordingly (Carter, 2022). Additionally, enhanced artificial intelligence solutions built into XR technology will aid in making the immersive experience feel more natural when it comes to using hand and eye tracking software. The right AI innovations will be able to track even the most minute finger movements and gestures, even when parts of a person’s hand are hidden from direct view (Carter, 2022).

The current standard for human-system integration in space hardware development makes use of high-fidelity mockups to test operational scenarios and human interactions. This process is iterated at different scales and development stages, and it usually requires the use of great resources and implementation time (Netti, 2021). Immersive technologies can help mitigate this problem by minimizing the dependency on physical prototyping of assets and help condense the iterative evaluation/implementation process optimizing the transition from CAD modeling to human-in-the-loop testing. NASA is currently using XR simulation technology to test a Multi-Mission Extra Vehicular Robot (MMEVR) that is designed to be a collaborative/autonomous robot for EVA operations. The MMEVR human-system integration experiment (Figure 9-11) is to explore the robot collaboration capabilities in regular spacecraft maintenance scenarios and to understand how the hardware performance is affected by the human component and, conversely, how the human capabilities in space are affected by the hardware component (Netti, 2021).

Figure 9-11 MMEVR Testing Environment

Source: (Netti, 2021))

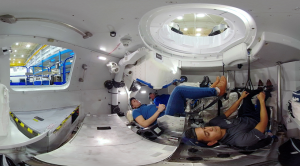

Crewed space mission requires astronauts to practice and simulate every detailed step of the flight mission thousands of time. Although launching a spacecraft from zero to orbit takes only 12 minutes, it requires years of preparation and hundreds of hours of complex training simulations (varjo.com, 2022). For the mission to be successful, everything needs to go flawlessly. The Boeing Starliner flight-test crew in Houston has implemented a new and innovative way to train for this space system. The crew is using the Varjo XR3 extended reality system (Figure 9-12). The XR3 system allows astronauts to train remotely from anywhere in the world with the same level of realism and interactions as in a physical simulator. The system uses industry-leading visual quality to ensure virtual instruments in the spacecraft can be read and operated accurately. This type of immersive simulation experience can be used to train for any procedure. Additionally, it unlocks the ability to train in pre-launch quarantine in crew quarters, which was previously impossible.

Figure 9-12 Boeing Starliner Varjo XR3 Testing

Source: (nbcnews, 2018)

NASA is also using augmented reality technology to explore various applications that can be used to assist astronauts aboard the International Space Station. (Experiment details, 2022) The T2 Augmented Reality (T2AR) project (Figure 9-13) demonstrates how station crew members can inspect and maintain scientific and exercise equipment critical to maintaining crew health and achieving research goals without assistance from ground teams (Guzman, 2021). The project demonstration used 3-D directional cues to direct the astronaut’s gaze to specific work sites and displayed procedure instructions. The device followed an astronaut’s verbal instructions to navigate procedures and displayed AR cues and procedure text over the hardware as appropriate for the procedure step being performed (Guzman, 2021). The system also provided supplemental information, such as instructional videos and system overlays, to assist in performing the procedure.

Figure 9-13 NASA T2AR Project Demonstration

Source: (NASA, 2021)

Future Thinking in Immersive Systems Technology

The future of immersive systems technology is exciting and yet full of unknowns. Engineers and designers are just beginning to harness the full potential of new technological advancements. Intelligent systems, machine learning, advanced micro processing, and advanced optics will provide the platforms for new immersive systems to emerge. These XR and AR technologies will eventually merge and integrate more seamlessly with the human body—which is ideal for complex space systems. One way is through AR contact lenses. While it’s true that AR glasses will get better, cheaper, and more comfortable, in the future, they may also become obsolete as AR lenses take over. Such lenses are already in development.

The Mojo AR contact lens prototype (Figure 9-14) is a huge step forward for advanced immersive technology. The new Mojo Lens prototype accelerates the development of invisible computing, the next-generation computing experience where information is available and presented only when needed (Mojo, 2022). This type of AR experience allows users to access timely information quickly and discreetly without having to look down at a screen or lose focus on the people and physical world around them.

Figure 9-14 Mojo Advanced AR Contact Lens

Source: (Mojo, 2022)

The power of XR and AR technology is grounded in the ability to turn information into experiences, which can make many aspects of one’s life richer and more fulfilling. For businesses, XR offers huge scope to drive business success, whether that means engaging more deeply with customers, creating immersive training solutions, streamlining business processes such as manufacturing and maintenance, or generally offering customers innovative solutions to their problems. In the end, the potential benefits of XR and AR far outweigh the challenges.

References

Adams, R. &. (2014). Augmenting virtual reality. Military Technology, pp. 38(12), 16–24.

Arth, C. G. (2015). The history of mobile augmented reality. arXiv preprint, p. arXiv:1505.01319.

Basso, A. (2017). Advantages, critics and paradoxes of virtual reality applied to digital systems of architectural prefiguration, the phenomenon of virtual migration. Proceedings of the International and Interdisciplinary Conference IMMAGINI. Brixen, I: Conference IMMAGINI. doi:Basso, A. (2017). Advantages, critics and paradoxes of virtual reality applied to digital systems of architectural prefiguration, the phenomenon of virtual migration. Proceedings of the International and Interdisciplinary Conference IMMAGINI, Brixen, I

Buis, A. (2021, August 3). Earth’s Magnetosphere: Protecting Our Planet from Harmful Space Energy. Retrieved August 12, 2022, from NASA Global Climate Change: https://climate.nasa.gov/news/3105/earths-magnetosphere-protecting-our-planet-from-harmful-space-energy/

Burdea, G. C. (2003). Virtual reality technology (Vol. 1). Hoboken, NJ: John Wiley & Sons.

Carlson. (2017). history/lesson17.html. Retrieved from design.osu.edu: https://design.osu.edu/carlson/history/lesson17.html

Carter, R. (2022). The hottest trends in XR hand and eye tracking for 2022. XR Today. Retrieved from https://www.xrtoday.com/mixed-reality/the-hottest-trends-in-xr-hand-and-eye-tracking-for-2022

Experiment details. (2022, Aug 21). Retrieved from www.nasa.gov/: https://www.nasa.gov/mission_pages/station/research/experiments/explorer/Investi gation.html#id=7587

Fuchs, P. M. (2011). Virtual interfaces. Virtual reality: Concepts and technologies. CRC Press.

Gackenbach, J. B. (2017). Looking for the Ultimate Display: A Brief History of Virtual Reality. In Boundaries of self and reality online: Implications of digitally constructed realities . Academic Press Essay, pp. 239–259.

Group on Earth Observations. (n.d.). About Us. Retrieved August 8, 2022, from Group on Earth Observations: https://www.earthobservations.org/geo_community.php

Guzman, A. (2021, Aug 21). New augmented reality applications assist astronaut repairs to space. NASA. Retrieved from www.nasa.gov: https://www.nasa.gov/mission_pages/station/research/news/augmented-reality-applications-assist-astronauts

Holzinger, M. J., Chow, C. C., & Garretson, P. (2021, May 3). AFRL Portal. Retrieved August 7, 2022, from A Primer on Cislunar Space: https://www.afrl.af.mil/Portals/90/Documents/RV/A%20Primer%20on%20Cislunar%20Space_Dist%20A_PA2021-1271.pdf?ver=vs6e0sE4PuJ51QC-15DEfg%3D%3D

Howell, E. (2017, August 21). Lagrange Points: Parking Places in Space. Retrieved August 7, 2022, from Space.com: https://www.space.com/30302-lagrange-points.html

Hudson, K. E. (1990). Communications Satellites: Their Development and Impact. New York, NY: Macmillan Inc.

Indian Space Research Organization. (n.d.). Earth Observation Applications. Retrieved August 8, 2022, from Indian Space Research Organization: https://www.isro.gov.in/earth-observation/applications

Jacobson, J. &. (2005). Game engine virtual reality with CaveUT. Compute, pp. 38(4), 79–82.

Jacobson, J. L.-L. (2005). Proceedings of the 2005 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology – ACE ’05 (pp. Jacobson, J., Le Renard, M., Lugrin, J.-L., & Cavazza, M. (2005). The CaveUT system. Proceedings of the 2005 ACM SIGCHI International Conference on Advances in Computer Entertainment Technology – ACE ’05. https://doi.org/10.1145/1178477.1178503). ACE. doi:https://doi.org/10.1145/1178477.1178503

Javornik, A. (2016, Oct 4). The mainstreaming of augmented reality: A brief history. Harvard Business Review. Retrieved from hbr.org: https://hbr.org/2016/10/the-mainstreaming-of-augmented-reality-a-brief-history

Jones, A. (2022, July 1). China launches new Gaofen 12 Earth observation satellite. Retrieved August 8, 2022, from Space.com: https://www.space.com/china-launches-gaofen-12-satellite

JPL. (2001, November 3). Joint Propulsion Lab. Retrieved August 9, 2022, from Seals, Sea Lions and Satellites: https://www.jpl.nasa.gov/news/seals-sea-lions-and-satellites

Landyshev. (2019). mixed-reality-microsoft-hololens-headset-business/. Retrieved from www.visartech.com/: https://www.visartech.com/blog/mixed-reality- microsoft-hololens-headset-business/

Mihelj, M. N. (2014). Virtual Reality Technology and applications . London: Springer.

Mojo. (2022, March 30). we-have-reached-a-significant-milestone. Retrieved from www.mojo.vision/: https://www.mojo.vision/news/we-have-reached-a-significant-milestone-blog

Motion Systems EU. . (2022, May 30). product/simulators/ps-3rot-150-v2/. Motion Systems EU. Retrieved from https://motionsystems.eu/product/simulators/ps-3rot-150-v2/

NASA. (2010, November 9). Global View of Fine Aerosol Particles. Retrieved August 12, 2022, from NASA Earth Observatory: https://earthobservatory.nasa.gov/images/46823/global-view-of-fine-aerosol-particles

NASA. (2010, September 22). New Map Offers a Global View of Health-Sapping Air Pollution. Retrieved August 12, 2022, from NASA Earth Observatory: https://www.nasa.gov/topics/earth/features/health-sapping.html

NASA. (2010, April 1). TIROS, the Nation’s First Weather Satellite. Retrieved August 6, 2022, from NASA History: https://www.nasa.gov/multimedia/imagegallery/image_feature_1627.html

NASA. (2012, April 9). NASA Views Our Perpetual Ocean. Retrieved August 9, 2022, from NASA: https://www.nasa.gov/topics/earth/features/perpetual-ocean.html

NASA. (2016, September 16). Sea Ice. Retrieved August 9, 2022, from NASA Earth Observatory: https://earthobservatory.nasa.gov/features/SeaIce/page1.php

NASA. (2017, October 26). A River of Rain Connecting Asia and North America. Retrieved August 12, 2022, from NASA Earth Observatory: https://earthobservatory.nasa.gov/images/91175/a-river-of-rain-connecting-asia-and-north-america

NASA. (2021, June 23). Earth Observing System Project Science Office. Retrieved August 8, 2022, from NASA: https://eospso.nasa.gov/content/nasas-earth-observing-system-project-science-office

NASA. (2021, April 15). NASA-Built Instrument Will Help to Spot Greenhouse Gas Super-Emitters. Retrieved August 12, 2022, from NASA: https://www.nasa.gov/feature/jpl/nasa-built-instrument-will-help-to-spot-greenhouse-gas-super-emitters

NASA. (2021, August 25). Protecting the Ozone Layer Also Protects Earth’s Ability to Sequester Carbon. Retrieved August 12, 2022, from NASA: https://www.nasa.gov/feature/goddard/esnt/2021/protecting-the-ozone-layer-also-protects-earth-s-ability-to-sequester-carbon

NASA. (2021, October). Satellites View California Oil Spill. Retrieved August 9, 2022, from NASA Earth Observatory: https://earthobservatory.nasa.gov/images/148929/satellites-view-california-oil-spill

NASA. (2022, July 22). 50 Years of Landsat. Retrieved August 6, 2022, from NASA History: https://www.nasa.gov/image-feature/50-years-of-landsat

NASA. (2022). Aqua Earth-observing Satellite Mission. Retrieved August 8, 2022, from NASA Earth Observing Systems: https://aqua.nasa.gov

NASA. (2022). NASA Covers Wildfires Using Many Sources. Retrieved August 12, 2022, from NASA Earth Observatory: https://www.nasa.gov/mission_pages/fires/main/missions/index.html

NASA. (2022). Ozone Monitoring Instrument (OMI). Retrieved August 12, 2022, from NASA Aura: https://aura.gsfc.nasa.gov/omi.html

nbcnews. (2018, Sept 30). 360-video-inside-boeing-s-starliner-space-capsule. Retrieved from www.nbcnews.com/: https://www.nbcnews.com/mach/science/360-video-inside-boeing-s-starliner-space-capsule-ncna914856

Netti, V. G. (2021). A Framework for use of immersive technologies for human-system integration testing of space hardware. 2021 ACM CHI Virtual Conference on Human Factors in Computing Systems (pp. Netti, V., Guzman, L., & Rajkumar, A. (2021). A Framework for use of immersive technologies for human-system integration testing of space hardware). Yokoha: ACM.

NOAA. (2011, August 1). Ocean Currents. Retrieved August 9, 2022, from National Oceanic and Atmospheric Administration (NOAA): https://www.noaa.gov/education/resource-collections/ocean-coasts/ocean-currents

Pacific Marine Mammal Center. (2019, January 2). Satellite Tracking. Retrieved August 12, 2022, from Pacific Marine Mammal Center: https://www.pacificmmc.org/satellite-tracking

Parker, J. S., & Anderson, R. L. (2013, July). Transfers to Low Lunar Orbits. Retrieved August 7, 2022, from JPL DESCANSO Book Series: https://descanso.jpl.nasa.gov/monograph/series12/LunarTraj–05Chapter4TransferstoLowLunarOrbits.pdf

Patel, K. (2019, March 1). 5 Stories from 5 Years of Precipitation Measurements from Space. Retrieved August 9, 2022, from NASA Earth Observatory: https://earthobservatory.nasa.gov/blogs/earthmatters/2019/03/01/5-stories-from-5-years-of-precipitation-measurements-from-space/

Preston, S. (2022, April 26). Monitoring River Flushing And Hydropower From Space. Retrieved 2022, from Planet Pulse: https://www.planet.com/pulse/monitoring-river-flushing-and-hydropower-from-space/

Robertson, R. (2001, Nov 11). CGW/2001/Volume-24-Issue-11-November-2001-/immersed-in-art.aspx. Retrieved from www.cgw.com: http://www.cgw.com/Publications/CGW/2001/Volume-24-Issue-11-November-2001-/immersed-in-art.aspx.

Rosenberg, L. (2022, May 19). How a parachute accident helped jump-start augmented reality. IEEE Spectrum. Retrieved from spectrum.ieee.org: https://spectrum.ieee.org/history-of-augmented-reality

Rosson, L. (2022, Aug 21). The Virtual Interface Environment Workstation (VIEW), 1990. NASA. Retrieved from www.nasa.gov/ames/: https://www.nasa.gov/ames/spinoff/new_continent_of_ideas

Skurzynski, G. (1994). Virtual reality. Cricket. Cricket, pp. 21(11), 42–46.

Sorene, P. (2014, Nov 24). Jaron Lanier’s eyephone: Head and glove virtual reality in the 1980s. Flashbak. . Retrieved from flashbak.com: https://flashbak.com/jaron-laniers-eyephone-head-and-glove-virtual-reality-in-the-1980s-26180/

Statt. (2020, Feb 4). Google opens its latest Google Glass AR headset for direct purchase. Retrieved from www.theverge.com: https://www.theverge.com/2020/2/4/21121472/google-glass-2-enterprise-edition-for-sale-directly-online

Sunderland, I. (1968). Carlson/history/PDFs. Retrieved from design.osu.edu/: http://design.osu.edu/carlson/history/PDFs/p757-sutherland.pdf.

Temming, M. (2021, January 21). Space Station Detectors Found the Source of Weird ‘Blue Jet’ Lightning. Retrieved August 12, 2022, from Science News: https://www.sciencenews.org/article/space-station-detectors-found-source-weird-blue-jet-lightning

The Interaction Design Foundation. (2021). augmented-reality-the-past-the-present-and-the-future. Retrieved from www.interaction-design.org/: https://www.interaction-design.org/literature/article/augmented-reality-the-past-the-present-and-the-future

The Space Option. (2012, October 1). Cislunar Space. Retrieved August 7, 2022, from The Space Option: https://thespaceoption.com/portfolio/cislunar-space/

Van Krevelen, D. W. (2010). A survey of Augmented Reality Technologies, applications and limitations. International Journal of Virtual Reality, pp. 9(2), 1–20. Retrieved from https://doi.org/10.20870/ijvr.2010.9.2.2767

varjo.com. (2022, Aug 21). Varjo & Boeing: A new era in astronaut training using virtual reality. Retrieved from varjo.com: https://varjo.com/boeing-Starliner/

World Health Organization. (2022). Air Pollution. Retrieved August 12, 2022, from WHO Health Topics: https://www.who.int/health-topics/air-pollution#tab=tab_1

World Meteorological Organization. (2022, February 1). WMO certifies two megaflash lightning records. Retrieved August 12, 2022, from World Meteorological Organization: https://public.wmo.int/en/media/press-release/wmo-certifies-two-megaflash-lightning-records