Cyberethics

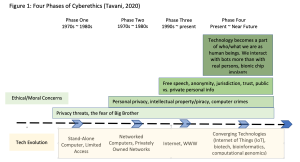

Concerns about ethical and moral issues involving technology have been raised since the late 1940s when the first operational computer was invented (Tavani, 2016) and then evolved along with technology development. Scholars, researchers, and practitioners have been trying to use different terms to capture the different aspects of the ethical and moral concerns relating to technology. The terms widely used in the literature include “computer ethics”, “machine ethics”, “Internet ethics”, “information ethics”, “technology ethics,” “robot ethics,” “AI ethics,” “machine learning ethics,” “blockchain ethics,” “cyberethics,” to just name a few. Cyberethics, defined “as the field of applied ethics that examines moral, legal, and social issues in the development and use of cybertechnology” (Spinello, 2020, p.1), seems to be broad enough to encompass the different aspects of ethical and moral issues concerning technology that most of the other terms are trying to address. According to Tavani (2016), our understanding of the ethical and moral issues involving technology that we now refer to as cyberethics has evolved through four phases alongside the history of technology revolution (See Figure 1).

What we need to learn from this history is that although today’s technologies don’t look like the technologies in those old days at all, ethical and moral issues raised at the beginning still exist and we continue to add more ethical and moral issues to the pool as new technologies are developed. Therefore, for digital leaders to lead successful organizational Dx, cyberethics is an important topic to stay on top of.